iPhone Xs, iPhone Xs Max, iPhone XR cameras explained

The iPhone Xs and the iPhone Xs Max may look like they have the same cameras, but there's a whole lot of improvement under the hood. You can control the level of depth in your photos and use computational photography to get more dynamic range.

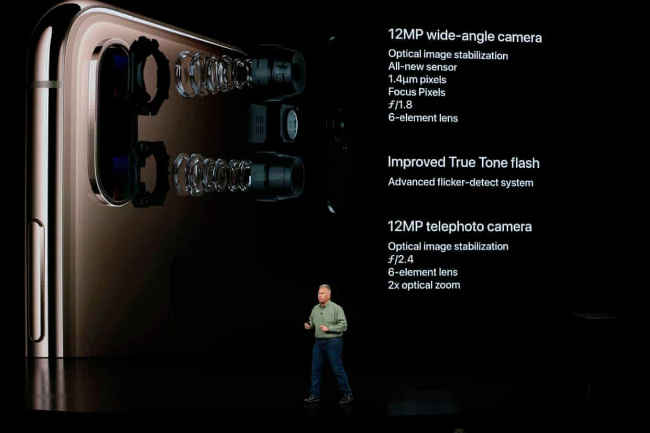

The new iPhone Xs and the iPhone Xs Max have been announced today and an important aspect of the upgraded iPhones is the camera. Both the iPhone Xs and the iPhone Xs Max tout dual cameras at the back, vertically aligned, much like the iPhone X from last year. The dual camera system is a 12-megapixel wide-angle lens and a 12-megapixel telephoto lens with an improved True Tone LED flash. On the iPhone XR is a single 12-megapixel wide-angle lens, which can do pretty much everything that the dual camera units can. While the camera resolution (retained from the iPhone X) might sound disappointing, Apple promises much better images from the new iPhones by embracing the powers of computational photography.

What’s new in the camera?

The dual camera system on the back is the same for both the iPhone Xs and the Xs Max. Apple is using larger sensors and the 12MP dual camera unit is used for leveraging 2X optical zoom and taking portrait shots. Up front is a 7-megapixel TrueDepth camera that also relies on a IR camera and a dot projector for portrait lighting effects and richer selfies.The technology is the same as last year's iPhone X, but FaceID is supposedly more secure and works faster.

The wide-angle lens has an aperture of f/1.8 while the larger sensor has a pixel pitch of 1.4um. The telephoto lens has aperture of f/2.4. Both lenses are optically stabilised. The pixel pitch is certainly larger than before. Up front, the 7MP TrueDepth camera has an aperture of f/2.2 and is coupled with a faster sensor. The new iPhones rely on 6-element lenses for clearer photos.

There is an improved image signal processor in the A12 Bionic chipset that makes the camera faster than the iPhone X. The ISP is capable of 1 trillion operations per photo and uses the compute power for better red-eye reduction, semantic segmentation of details like facial hair and glasses, improved autofocus, noise reduction, higher dynamic range and more. Even while recording videos, the ISP aids in tone mapping, auto focus and auto exposure adjustments, adjusting the shadow and highlight details, and colour rendering. What’s more, the new iPhones uses all the four mics on the body to capture stereo sound which is then played back in the stereo widening format in the iPhone Xs and the iPhone Xs Max. The new iPhones can record 4K videos in 60fps and has video stabilisation as well.

The added smartness

“Increasingly what makes photos possible are the chip and the software that runs on it,” Phil Schiller noted. Apple has surely worked hard on leveraging computational photography to take the iPhone’s imaging prowess much further. There’s smart HDR that takes four images even before you press the shutter. It also captures secondary inter-frame shots in lower exposure and also takes some images in long exposure. Later, it uses the ISP and the Neural Engine to analyse and match the best parts of the photos to make the final result look stunning. Theoretically, this allows more dynamic range than the camera on the iPhone X.

Apple is also using newer and better algorithms for portrait shots and portrait lighting. You can now adjust the aperture in post-production to control the level of bokeh in the portrait shot. It seems to work incredibly well. It gives you complete control over the blur and you can control it based on aperture levels. It’s akin to what’s found in the Huawei P20 Pro and couple of other Android phones including the Samsung Galaxy Note 9. Based on the presentation though, it seems to work far better.

Apple pulls off a Google Pixel with the iPhone XR camera

The iPhone XR has only a single lens camera and that’s enough for Apple to provide most of the features that’s there on the more expensive iPhone Xs and the iPhone Xs Max. It sounds a lot like what Google did on the Pixel and the Pixel 2. Seems like Apple is finally catching up in computational photography. It’s the same 12MP wide-angle camera from the iPhone Xs but thanks to the Neural Engine and the ISP, it can also take portrait shots with the portrait lighting effects. The Smart HDR feature is also there, but the depth control mechanism seems to be missing.

The cameras on the new iPhones may look to be pretty much the same, but a lot has changed under the hood. Based on the samples that we saw, the photos are going to look much better. Apple claimed the iPhone is the most used camera in the world, and with improvements like this, Android flagships have got a lot of catching up to do.