OpenAI’s ChatGPT can now see, hear & speak: Here’s how

OpenAI has started to roll out new voice and image capabilities in ChatGPT.

ChatGPT can now see, hear, and speak.

These new capabilities are rolling out to Plus and Enterprise users.

OpenAI today announced that it has started to roll out new voice and image capabilities in ChatGPT. This means that the AI chatbot can now see, hear, and speak.

Survey

SurveyChatGPT can now see, hear, and speak. Rolling out over next two weeks, Plus users will be able to have voice conversations with ChatGPT (iOS & Android) and to include images in conversations (all platforms). https://t.co/uNZjgbR5Bm pic.twitter.com/paG0hMshXb

— OpenAI (@OpenAI) September 25, 2023

“We are beginning to roll out new voice and image capabilities in ChatGPT. They offer a new, more intuitive type of interface by allowing you to have a voice conversation or show ChatGPT what you’re talking about,” OpenAI said in a blogpost.

Also read: OpenAI’s Red Teaming Network Deployed For The Development Of Its Models

It’s important to note that the new voice and image capabilities in ChatGPT are rolling out to Plus and Enterprise users over the next two weeks. Voice is coming on iOS and Android and images will be available on all platforms.

Also read: ChatGPT Enterprise: OpenAI reveals ‘most powerful version of ChatGPT’ yet

Users will now be able to click a picture of a landmark while travelling and have a live conversation about what’s interesting about it.

“When you’re home, snap pictures of your fridge and pantry to figure out what’s for dinner (and ask follow up questions for a step by step recipe). After dinner, help your child with a math problem by taking a photo, circling the problem set, and having it share hints with both of you,” OpenAI said.

Also, users can now use voice to engage in a back-and-forth conversation with the AI chatbot.

To get started with voice, go to Settings > New Features on the mobile app and opt into voice conversations. Then, tap the headphone button located in the top-right corner of the home screen and choose your preferred voice out of five different voices.

This new voice capability is powered by a new text-to-speech model, capable of generating human-like audio from just text and a few seconds of sample speech. OpenAI collaborated with professional voice actors to create each of the voices.

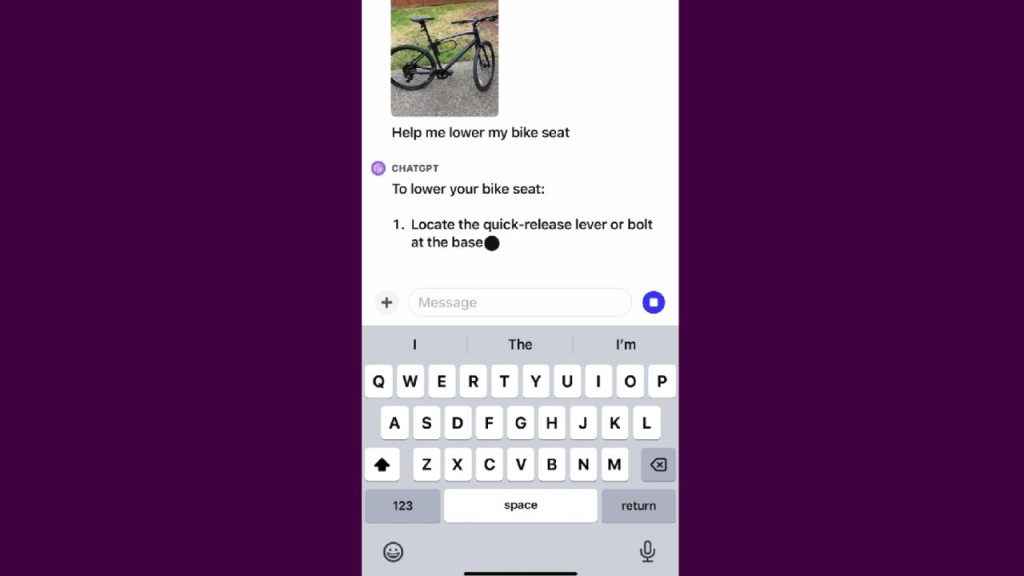

To use the image feature, tap the photo button to capture or choose an image. If you’re on iOS or Android, tap the plus button first. Image understanding is powered by multimodal GPT-3.5 and GPT-4. These models apply their language reasoning skills to a wide range of images, such as photographs, screenshots, and documents containing both text and images.

“OpenAI’s goal is to build AGI that is safe and beneficial. We believe in making our tools available gradually, which allows us to make improvements and refine risk mitigations over time while also preparing everyone for more powerful systems in the future. This strategy becomes even more important with advanced models involving voice and vision,” the company said.

Ayushi Jain

Ayushi works as Chief Copy Editor at Digit, covering everything from breaking tech news to in-depth smartphone reviews. Prior to Digit, she was part of the editorial team at IANS. View Full Profile