OpenAI launched GPT-4o but why can’t we access it? Find out here

OpenAI claims GPT-4o beats all current models in its ability to participate in discussions related to the images users share.

OpenAI is still rolling out this AI model in India.

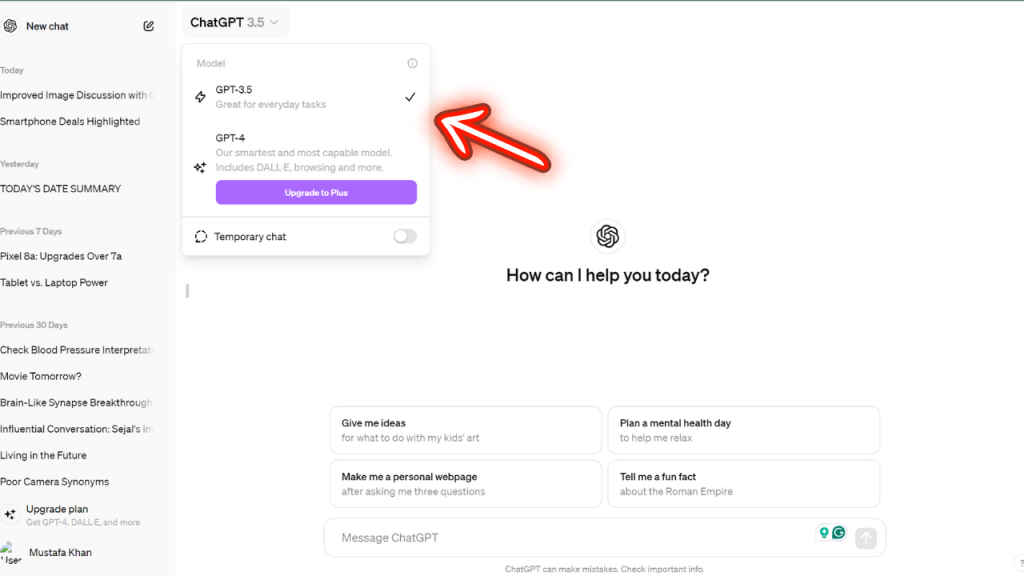

When GPT-4o gets rolled out to your device, it will automatically switch to your default AI model over GPT-3.5.

OpenAI is claiming that GPT-4o beats all current models in its ability to comprehend and participate in discussions related to the images users share. For example, using GPT-4o, you could take a picture of a menu in a foreign language and request a translation, explore the historical and cultural context of the dishes, and receive recommendations. Moreover, OpenAI is also improving the AI model that will make it easier to have smooth, real-time voice chats and to engage in video conversations with ChatGPT.

What’s really interesting about the GPT-4o is that everyone can use this new AI model, unlike the GPT-4.5. However, a lot of you must be trying to access it but you might be not able to. At least, I couldn’t.

Also read: ChatGPT desktop app rolls out for Apple Macs, Windows still has to wait

Why can’t we access the GPT-4o?

When I went to try out this new AI model, I couldn’t find where to access it. Then I went to an OpenAI support page which told me that the company is still rolling out this AI model. However, the question still remains, “How can we access it?” So, the answer to that question is that you don’t have to. When GPT-4o gets rolled out to your device, it will automatically switch to your default AI model over GPT-3.5. For ChatGPT Plus users, you will get the option to switch it in the top left corner. Basically, we just have to wait for some more time until this new model rolls out.

Also read: OpenAI launches GPT-4o AI model that’s free for all ChatGPT users: What’s new

Now, what’s new?

The GPT-4o comes with a significant upgrade that can process and generate outputs across multiple modalities. This includes text, audio, and images in real time. The model features these capabilities through a unified neural network, which makes it faster, more cost-effective, and more efficient if we compare it to its predecessors.

Apart from that, the GPT-4o has been rolled out with initial capabilities in text and image handling in ChatGPT. Further, they plan to add audio and video features later for specific partners.

Mustafa Khan

Mustafa is new on the block and is a tech geek who is currently working with Digit as a News Writer. He tests the new gadgets that come on board and writes for the news desk. He has found his way with words and you can count on him when in need of tech advice. No judgement. He is based out of Delhi, he’s your person for good photos, good food recommendations, and to know about anything GenZ. View Full Profile