New Smart Text Selection and Style Match features now rolling out to Google Lens – Here’s how to use them

Style Match allows Google Lens users to point their cameras at an object and bring up similar results, while Smart Text Selection allows for copying and pasting text from the real world to the phone.

At Google I/O 2018, the company demonstrated some nifty new features for the Google Lens, its AI and Machine Learning based visual search tool. The three new features announced for the Google Lens included – Smart Text Selection, Style Match and Real-time results. At the event, Google had also announced that the Lens will be integrated directly within the camera app of the Pixel and a handful of other phones, making it easier for users to access it without using Google Photos or Assistant. While we still haven’t seen Google Lens on our Pixel 2 XL’s camera app, the feature available within Google Assistant has received the Smart Text Selection and Style Match updates.

Smart Text Selection lets users copy and paste text from the camera’s view directly to their phones whereas Style Match recognises objects such as clothing and accessories, something you might want to copy or buy, to bring up similar results from the web. Both these features can now be accessed by Google Lens users.

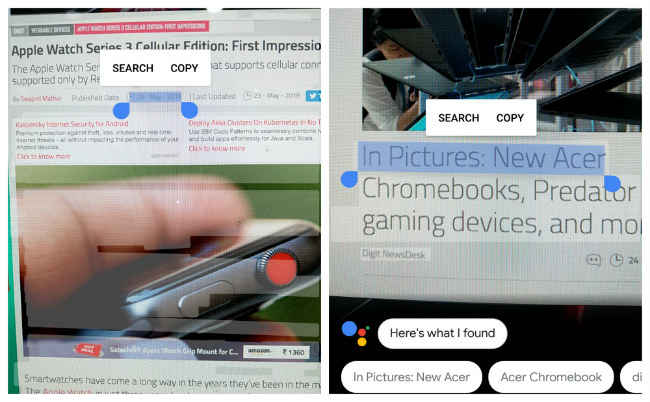

Smart Text Selection

With Smart Text Selection, copying text from the real world becomes much simpler. You no longer have to make a note of an address you spot while driving or transcribe text from a physical document, the Google Lens will do it for you. To do this, tap on the Google Lens icon by accessing your Google Assistant. Now point your camera to the text you wish to copy and tap on the text. This will select the entire text in the camera’s view. Tap on the text once more to bring up the selection guides and copy the parts that you like. You can also Search the web for the selected text. For instance, if you select text from a concert poster, you could search the web to find more details about the concert.

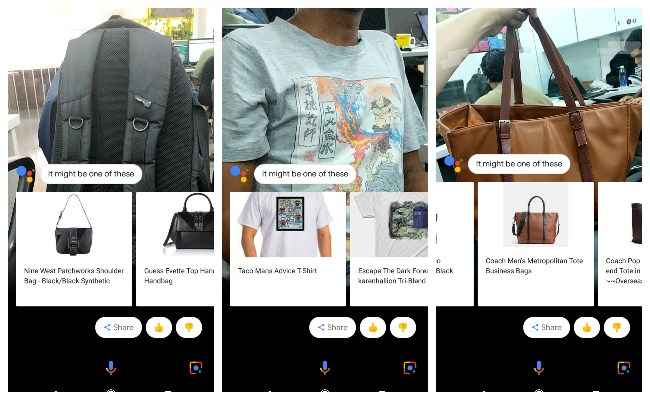

Style Match

Although the Style Match feature has rolled out to the Google Lens, it does not work as well as we had imagined. Style Match could be useful for people who want to quickly search for similar objects. For instance, if you spot your friend wearing a really cool pair of shoes, you could point the Google Lens at the shoes and it will bring up similar results from the web to help you find them. You can also do this for clothing items, accessories, household items, paintings and more. We tried pointing the Google Lens at a backpack, but sadly it matched it with Women’s purses. The result was a little closer to reality when we pointed the Lens at a T-shirt. The best matches came up when we pointed it at a Generic Brown Tote Bag. Looks like Google’s AI still needs some more training at this.

To access Style Match on Google Lens, simply point to the object you want to match, tap on it, and you should see results pop-up on the lower half of the app screen.

Besides these two features, we tried to check for the Real-time results feature in which the Lens is supposed to anchor information to objects in real time using Machine Learning. The feature did not work for us at all and might be rolled out in the coming weeks. The Google Lens can also turn a page of content into answers. For instance, when pointed at a menu, Lens will bring up images and ingredients of dishes right within the camera app to help users make a more informed choice. This also did not seem to work for us when we tried it.

We will keep tracking these new Google Lens features for you. Until then, let us know your experience with the two new Google Lens features in the comments section below.