New AI application can help you dance like a pro

Researchers have come up with a new model that utilises AI and deep learning to replace the moves of a user dancing in a target video with that of a professional.

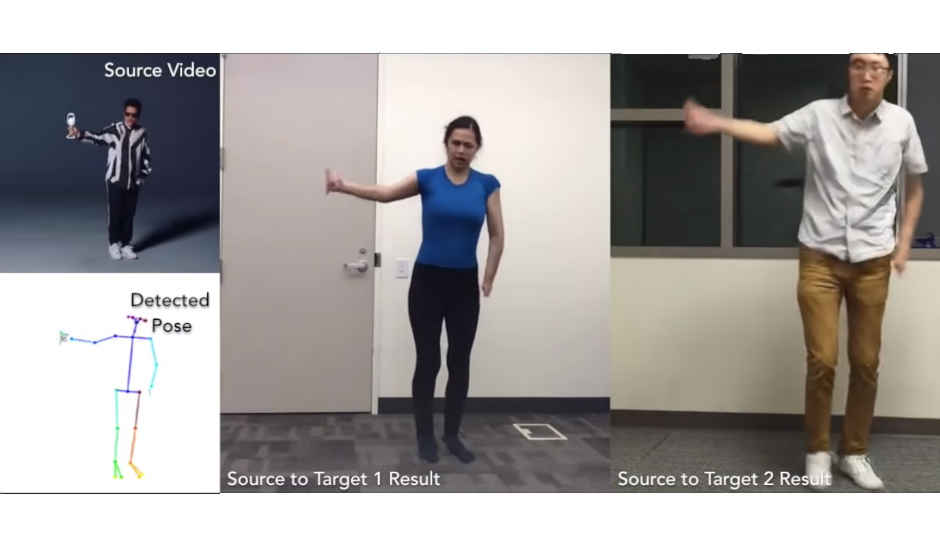

Researchers over at UC Berkeley have come up with a new application for AI, which will help anyone bust a move. Being called deepfake for dancing, the new method was first spotted by The Verge and its published on arXiV. The research paper details how deep learning techniques can be used to transfer motion between human subjects in different videos. In simple terms, the method can be described to superimpose dance moves from a source video to a target video. However, it is way more complicated than that as it consists of a series of steps in order to achieve good results.

The whole process of creating the dance routine can be divided into three stages, pose detection, global pose normalization, and mapping from normalized pose stick figures to the target subject. To detect poses, the system needs a source video and a target video to where it will superimpose the routine. A sub-program then converts the movements in the videos to a stick figure. However, for target video, around 20 minutes of good quality video footage captured at 120 frames per second is required by the program to get a good quality transfer of poses to stick figures. Additionally, the system does not encode information about clothes and thus people dancing in the target video had to wear tight clothing with minimal wrinkling.

It’s a given that there will be a difference between the movements of people dancing in the source video and the target video. This is where it gets tricky and the global pose normalization comes into play. It accounts for differences between the source and target body shapes and locations within the frame. Finally, the team designed a system to learn the mapping from the normalized pose stick figures, which were created in the first step of the process, to images of the target person with adversarial training.

The whole process is aided by subroutines that work on smoothing out the movements so that there are fewer jerks. There is also a neural network dedicated to generating the face of a person in the target video to increase realism. However, there are some instances where the AI fails to encode the dance moves. This is particularly evident when the input motion or movement speed is different in the source and target. One can notice rendering failures and jitters when the source’s movements are too fast to be superimposed over the person dancing in the target video. Still, the results are both impressive and worrying as how video manipulation can be quite accurately and easily done with the use of AI.