MIT uses AI to teach its robot to see, feel and recognise objects

Researchers at MIT's CSAIL teach robot arm to see, feel and recognise things using a new AI-driven optics system.

The system uses just one simple web camera but is capable of recognising up to 200 objects around it.

Understanding one's surroundings using the power of vision and touch is something that comes naturally to native living beings of the planet like you and me. However, that is not the case with computers and robots. So, researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a type of predictive artificial intelligence (AI) that can “learn to see by touching, and learn to feel by seeing.” In other words, the scientists at MIT have taught a robot how to be more human using AI.

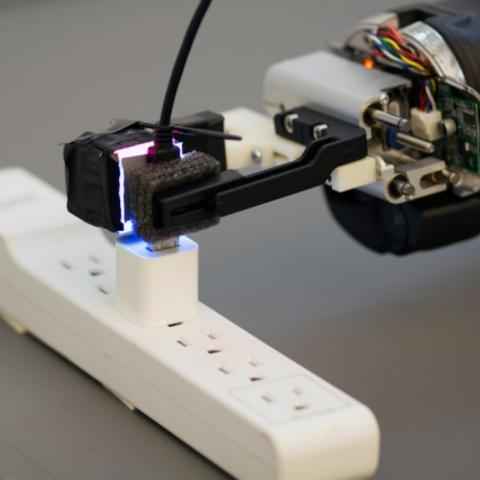

According to an MIT news release on the matter, a new system created by the scientists at CSAIL can create realistic signals of tactility (of touch and feel) using visual inputs. The system was tested on a robotic arm called KUKA with the help of a special tactile sensor called GelSight, which was designed by another group at MIT. The system uses just one simple web camera but has the ability to record nearly 200 objects around the arm, such as tools, household products, fabrics, and others.

During the test conducted at MIT's CSAIL, the system recognised over 12,000 touches visually using its single camera. Each of the 12,000 video clips were broken down into static frames to create “VisGel”, a dataset of over 3 million visual/tactile-paired images. The system also uses generative adversarial networks (GANs) to understand interactions between vision and touch better, something that was a challenge in MIT's project of a similar nature back in 2016.

“By looking at the scene, our model can imagine the feeling of touching a flat surface or a sharp edge,” explains Yunzhu Li, a PhD student at CSAIL and lead author on a new paper about the recently developed system. “By blindly touching around, our model can predict the interaction with the environment purely from tactile feelings. Bringing these two senses together could empower the robot and reduce the data we might need for tasks involving manipulating and grasping objects.”

Cover image courtesy: MIT

Vignesh Giridharan

Progressively identifies more with the term ‘legacy device’ as time marches on. View Full Profile