Microsoft closes loophole behind Taylor Swift’s explicit images: Here’s what happened

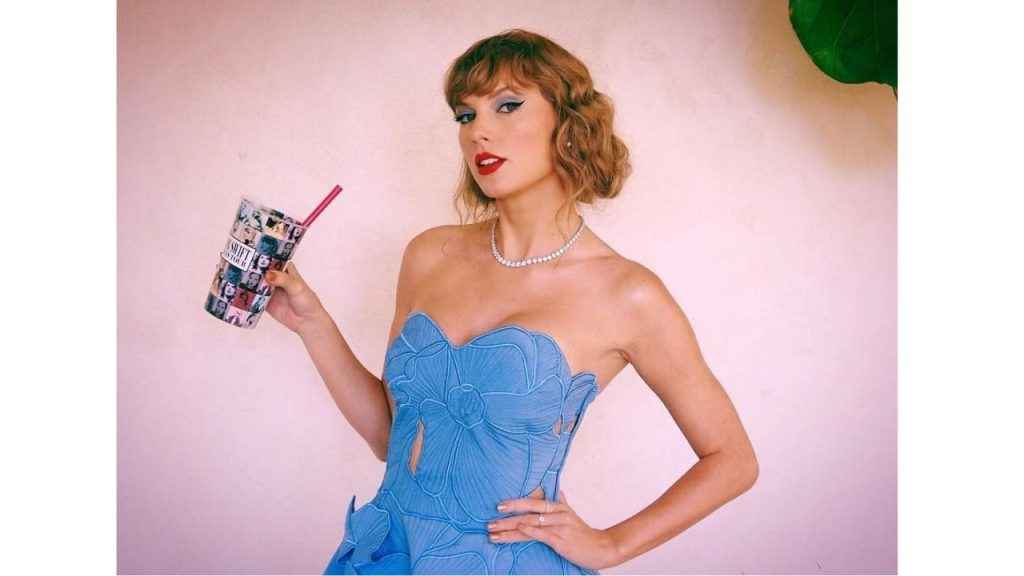

Taylor Swift's AI-generated explicit images went viral last week.

Microsoft has implemented more protection to its AI text-to-image tool ‘Designer’ that was said to be misused to create those images.

The loopholes people used to create inappropriate images no longer work.

Microsoft has implemented additional protection to its AI text-to-image tool ‘Designer’ that was said to be misused to create non-consensual sexual images of celebrities. The company took these measures after the AI-generated nude images of Taylor Swift went viral on X (formerly known as Twitter) last week.

Survey

Survey“We are investigating these reports and are taking appropriate action to address them,” a Microsoft spokesperson told 404 Media.

Also read: Will AI eat up your job? Studies suggest otherwise

“Our Code of Conduct prohibits the use of our tools for the creation of adult or non-consensual intimate content, and any repeated attempts to produce content that goes against our policies may result in loss of access to the service. We have large teams working on the development of guardrails and other safety systems in line with our responsible AI principles, including content filtering, operational monitoring and abuse detection to mitigate misuse of the system and help create a safer environment for users,” the spokesperson added.

Also read: Find out how Delhi Police solved a murder case using AI

Microsoft stated that an ongoing investigation could not confirm whether the images of Taylor Swift on X were created using Designer. However, the company affirmed its commitment to enhancing text filtering prompts and addressing the misuse of its services to prevent similar incidents.

Last week, Microsoft CEO Satya Nadella said in an interview with NBC News that it is the company’s responsibility to implement additional “guardrails” on AI tools to prevent the generation of harmful content. Subsequently, over the weekend, X took measures to completely block searches for “Taylor Swift”.

“It’s about global, societal convergence on certain norms, and we can do it, especially when you have law and law enforcement and tech platforms that can come together—I think we can govern a lot more than we give ourselves credit for,” Nadella said.

According to 404 Media, the loopholes users used to create inappropriate images no longer work.

Before the AI-generated images featuring Taylor Swift went viral last week, Designer initially prohibited users from creating images using explicit text prompts such as “Taylor Swift nude.” However, users found ways to dodge these protections by slightly misspelling celebrity names and describing images without explicit sexual terms, yet providing sexually suggestive results.

404 Media claims that these loopholes were effective on Thursday before Microsoft implemented the changes, and that they ceased to work now.

Ayushi Jain

Ayushi works as Chief Copy Editor at Digit, covering everything from breaking tech news to in-depth smartphone reviews. Prior to Digit, she was part of the editorial team at IANS. View Full Profile