Google Translate taps into Deep Learning to reduce errors by 60%

Machine learning algorithms boost capabilities of Google Translate compared to previous phrase-based approach

The go to place for quick and easy translations – Google Translate – just received a huge upgrade with Deep Learning algorithms boosting its translation capabilities and reducing errors by 60%. Google’s experiments with neural machine translation pays off in a big manner.

Like most translation services, Google Translate too relied on breaking down sentences into smaller phrases or groups of words and then translated these phrases which were later joined together to produce the output. With Neural Machine Translation, Google Translate can translate entire sentences without breaking them in phrases. This new approach has been said to reduce errors by at least 60 percent compared to the previous phrase based approach.

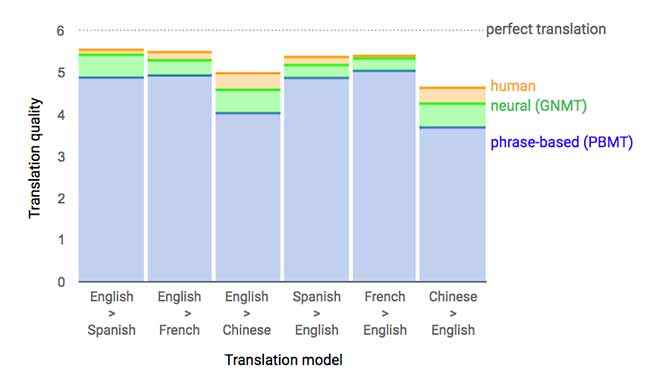

0 means non-sensical, 6 means perfect.

While many companies have begun experimenting with Deep Learning techniques and algorithms for Natural Language Processing (NLP), Google is supposedly the first to have publicly announced the use of the same in a translation product. In a blog post, Google announced the Google Neural Machine Translation system (GNMT) which has already been used to translate 100 percent of machine translations from Chinese to English. A figure which currently stands at 18 million translation operations per day. GNMT was found to reduce errors by more than 55 percent to 85 percent when used on major language pairs sampled Wikipedia and news websites.

GNMT sample translation from Chinese to English

How does Google Neural Machine Translation work?

Explaining Google Neural Machine Translation is no easy task but here’s a brief overview of how it performs translation. The following example makes use of a Chinese sentence which reads – Knowledge is power. GNMT first encodes each word as a vector inside the encoder. Calling it a vector means that there could be more than one translation outcomes from that word. After the entire sentence has been encoded, the decoder takes over. Given the ample amount of text that GNMT had to learn, it generates weights which are then assigned to each vector. The decoder then picks the words and pays attention to these weights. Certain weights are greater in relevance to this particular translation (Chinese-English). These weights are used to generate the most relevant English word.

GNMT in action translating Chinese to English.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 10 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile