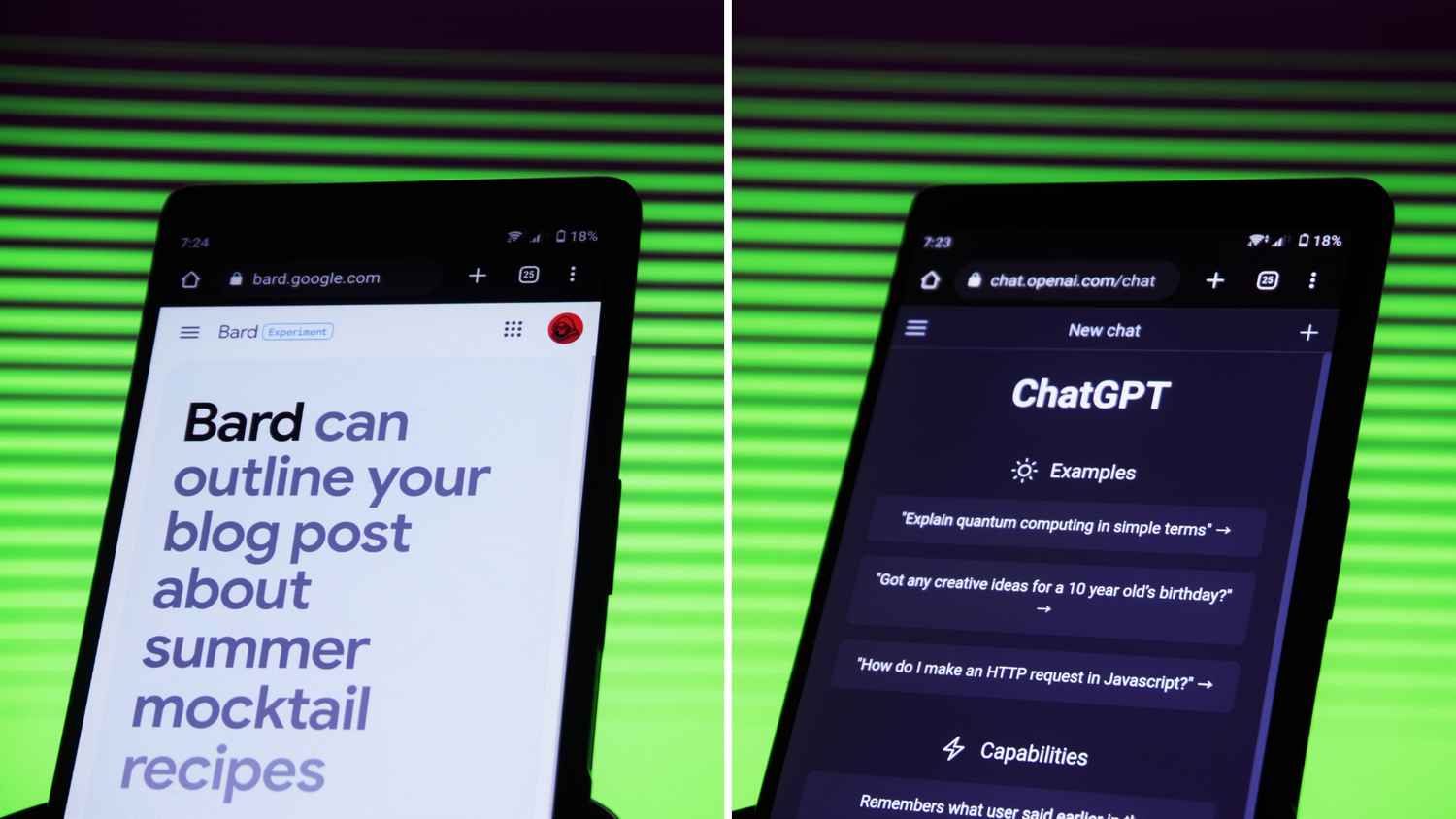

AI has been the focus of Google IO 2023 and the company has opened up Google Bard to the public in 180+ countries without a waitlist. We have tried various cool things on Bard and compared the results with ChatGPT. So, is the new Bard better than ChatGPT? Is it really the ChatGPT killer or the next-gen generative AI chatbot that Google wants it to be? Let’s find out.

:

1. Go to bard.google.com

2. Sign in with your personal Google account.

3. Read and accept the terms and conditions.

Start using Bard.

But before you do, here are some things to know.

1. Bard keeps a record of your past conversations and frames its following responses accordingly. So, you are advised against sharing sensitive information with Bard.

2. Bard admits that it is in an experimental state and may give inaccurate or inappropriate information. Google assumes no responsibility or liability for Bard’s views.

3. It warns you not to take its responses as professional advice from a medical, legal, or financial standpoint.

4. It is able to generate content like text, poems, code, scripts, musical pieces, emails, letters, etc.

On to our test.

We tried the following queries and fun things on Bard and ChatGPT. Here’s how both of them fared.

If you ask ChatGPT and Bard to debug code:

const date = new Date("21,3,2023") // incorrect code

console.log("date=>", date) // Invalid Date

Both of them are able to do it fine.

I then asked ChatGPT and Bard to code a terminal-based game of tic-tac-toe, and both of them were able to do that too.

I asked ChatGPT and Google Bard to review the Pixel 7A. While Bard gave a description of the product’s features and specifications and a generic conclusion, ChatGPT (3.5) didn’t do anything stating it doesn’t know about Pixel 7A.

Well, this is tricky. Although both of them attempt at explaining things, if something’s too technical or esoteric, then these things tend to fail. For instance, I asked them to tell me about PHOLED displays and their responses included some inaccuracies. On multiple tries, the responses also showed inconsistencies.

That said, Google’s responses were more technical in nature, if that’s something you want. Google tends to show citations/ sources of the information it is sharing but not always.

You also get the option to get related searches beneath some of Bard’s responses. This could be useful.

I asked both ChatGPT and Bard to create a resume for an editorial profile on a tech website. ChatGPT’s response was better in terms of how it was framed. It was more neat and comprehensible.

You can use them both to create text for a blog, email, etc. They can also help you in proofreading your write-up, check grammar, and bring some clarity if needed.

When I told both of them that I was feeling low to see how they will respond and what they will do to make me feel better, I felt Google’s responses were more humane (if you will). This is very important when you are conversing with a bot. But, of course, there have to be some guardrails that ensure the bot’s responses are safe.

So, at least in our tests, we think Google’s AI chatbot does better. Your mileage may vary. Also, we haven’t tried GPT-4 for this test. It may perform differently than ChatGPT (based on GPT 3.5)