Beware! AI Voice cloning could be scammers’ next big tool

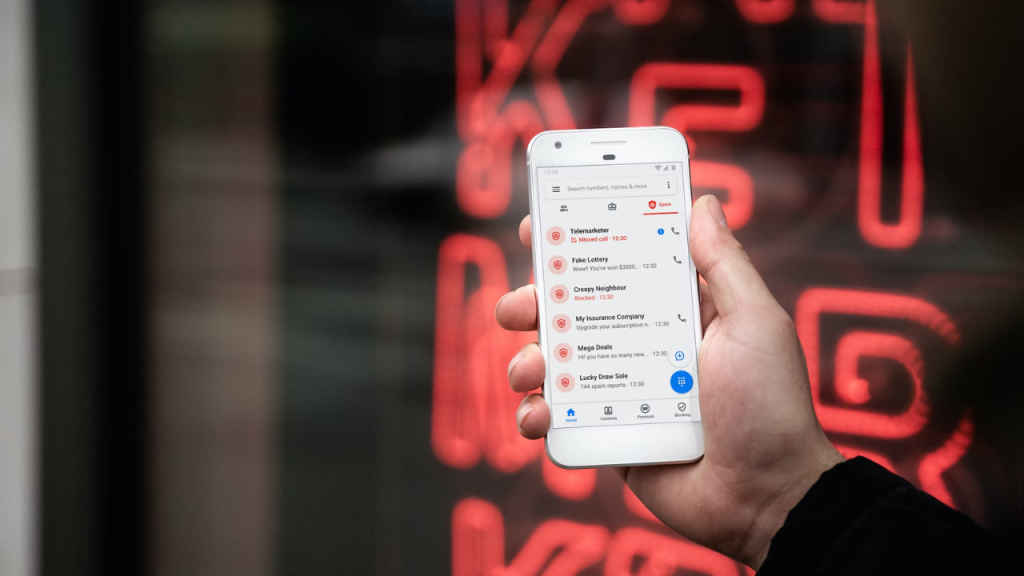

Scammers have found a new way to con you out of your hard-earned money.

Scammers are now using voice clones.

They just need a sample audio of a person and then they use AI to create a synthetic audio.

As technology is getting advanced, so are the scams around us. Whenever we are online, we have to be very careful owing to the fact that one miscalculation or mistake can lead to a big scam which can cost us a lot of money too. With the onset of AI, these scammers have found a new tool at their disposal to con innocent people.

Now, these scammers have found a new way to con you out of your hard-earned money. As I said, AI has been a big helping hand for these scammers. Have you heard of deep fakes or voice clones? I am sure you must have heard of them as people have been raising concerns all over the internet about people imitating someone else. Remember the Rashmika Mandana case? That was a deepfake.

Also read: Deepfakes, Crypto Scams on the Rise in India 2022

Scammers are now using voice clones. These voice clones are super easy to generate and require only three seconds of audio input. You cannot easily differentiate between these clones and the actual voice. The con artists are using genuine AI startups such as ElevenLabs, Speechify, Respeecher, Narakeet, Murf.ai, Lovo.ai, and Play.ht as their modus operandi to create voice clowns.

To create a voice clone, these scammers just need a sample audio of a person and then they use AI to create a synthetic audio. As far as the source audio is concerned, scammers can easily get their hands on it from Instagram, YouTube, or any other social media platform, or even from a small conversation over the phone.

Also read: IRCTC scam alert: Fake IRCTC app is stealing people’s money, here’s how

Speaking about the same, Jaspreet Bindra, founder of Tech Whisperer said, “Deepfakes in general are quite dangerous, and particularly voice AI shall soon evolve into an organised phishing tool. For instance, job scams will now convert from a WhatsApp message to an actual HR voice calling you,” as reported by the Economic Times.

A recent McAfee survey revealed that 47% of Indians have experienced or know someone who has experienced the AI voice scam. 83% of Indians said that they lost money to such scams.

Mustafa Khan

Mustafa is new on the block and is a tech geek who is currently working with Digit as a News Writer. He tests the new gadgets that come on board and writes for the news desk. He has found his way with words and you can count on him when in need of tech advice. No judgement. He is based out of Delhi, he’s your person for good photos, good food recommendations, and to know about anything GenZ. View Full Profile