This tutorial explains how to use Intel INDE Media Pack for Android to add video capturing capability to Unity applications.

This tutorial explains how to use Intel® INDE Media Pack for Android* to add video capturing capability to Unity applications.

Prerequisites:

- Unity 4.3.0. Capturing works as a fullscreen image postprocessing effect. As you know such effects are only available with Unity Pro.

- Android SDK

This tutorial is about creating and compiling your own Unity Plugin for Android. So let's start.

Open Unity and create a new project. Under Project create a new directory named /Plugins/ and then a directory /Android/.

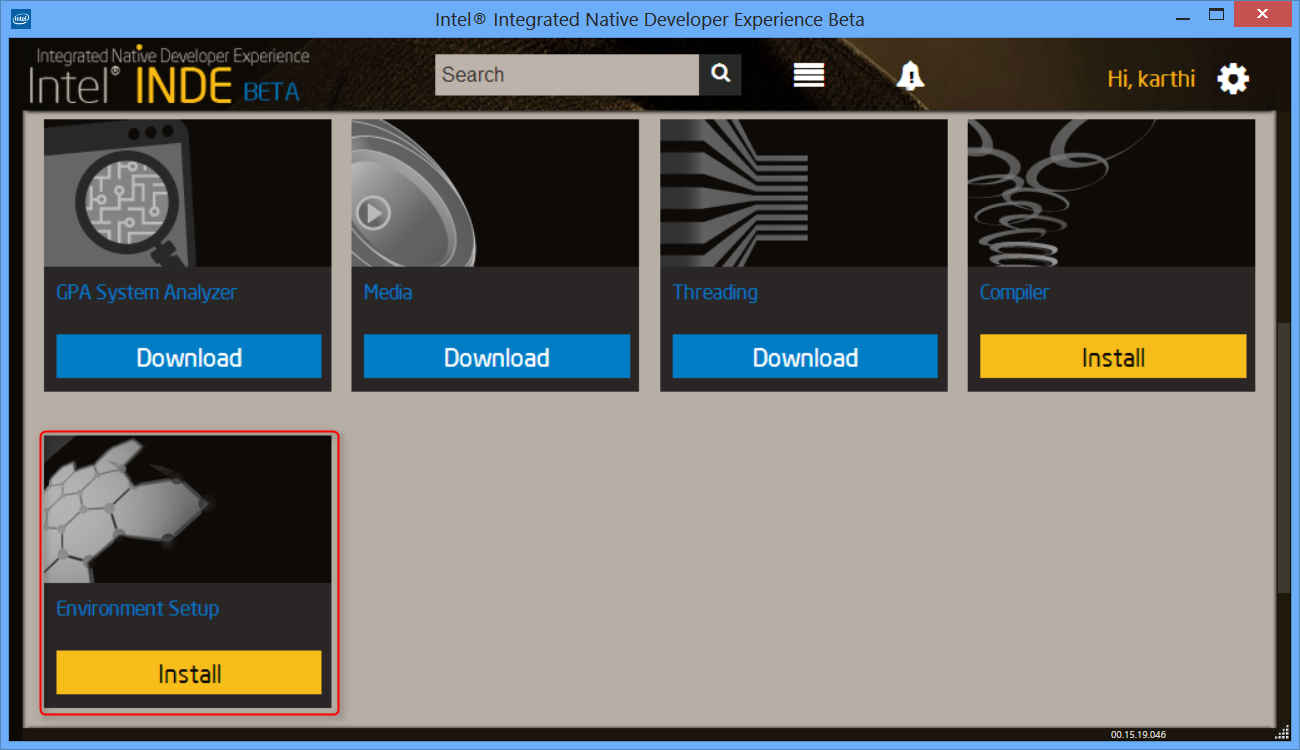

Download and install Intel INDE by visiting here. After installing Intel INDE, choose to download and install the Media Pack for Android. For additional assistance visit the Intel INDE forum.

Go to the installation folder of Media Pack for Android -> libs and copy two jar files (android-<version>.jar and domain-<version>.jar) to your /Assets/Plugins/Android/ folder.

In the same folder create a Java* file Capturing.java with the following code in it:

package com.intel.inde.mp.samples.unity;import com.intel.inde.mp.IProgressListener;import com.intel.inde.mp.domain.Resolution;import com.intel.inde.mp.android.graphics.FullFrameTexture;import android.os.Environment;import android.content.Context;import java.io.IOException;import java.io.File;public class Capturing{private static FullFrameTexture texture;private VideoCapture videoCapture;private IProgressListener progressListener = new IProgressListener() { @Override public void onMediaStart() { } @Override public void onMediaProgress(float progress) { } @Override public void onMediaDone() { } @Override public void onMediaPause() { } @Override public void onMediaStop() { } @Override public void onError(Exception exception) { } }; public Capturing(Context context) {videoCapture = new VideoCapture(context, progressListener);texture = new FullFrameTexture(); } public static String getDirectoryDCIM() { return Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM) + File.separator; } public void initCapturing(int width, int height, int frameRate, int bitRate) { VideoCapture.init(width, height, frameRate, bitRate); } public void startCapturing(String videoPath) { if (videoCapture == null) { return; } synchronized (videoCapture) { try { videoCapture.start(videoPath); } catch (IOException e) { } } } public void captureFrame(int textureID) { if (videoCapture == null) { return; } synchronized (videoCapture) { videoCapture.beginCaptureFrame(); texture.draw(textureID); videoCapture.endCaptureFrame(); } } public void stopCapturing() { if (videoCapture == null) { return; } synchronized (videoCapture) { if (videoCapture.isStarted()) { videoCapture.stop(); } } }}

Then create another Java file in the same directory. Name it VideoCapture.java and put the following contents in it:

package com.intel.inde.mp.samples.unity;import android.content.Context;import com.intel.inde.mp.*;import com.intel.inde.mp.android.AndroidMediaObjectFactory;import com.intel.inde.mp.android.AudioFormatAndroid;import com.intel.inde.mp.android.VideoFormatAndroid;import java.io.IOException;public class VideoCapture{ private static final String TAG = "VideoCapture"; private static final String Codec = "video/avc"; private static int IFrameInterval = 1; private static final Object syncObject = new Object(); private static volatile VideoCapture videoCapture; private static VideoFormat videoFormat; private static int videoWidth; private static int videoHeight; private GLCapture capturer; private boolean isConfigured; private boolean isStarted; private long framesCaptured;private Context context;private IProgressListener progressListener; public VideoCapture(Context context, IProgressListener progressListener) {this.context = context; this.progressListener = progressListener; } public static void init(int width, int height, int frameRate, int bitRate) { videoWidth = width; videoHeight = height; videoFormat = new VideoFormatAndroid(Codec, videoWidth, videoHeight); videoFormat.setVideoFrameRate(frameRate); videoFormat.setVideoBitRateInKBytes(bitRate); videoFormat.setVideoIFrameInterval(IFrameInterval); } public void start(String videoPath) throws IOException { if (isStarted()) throw new IllegalStateException(TAG + " already started!"); capturer = new GLCapture(new AndroidMediaObjectFactory(context), progressListener); capturer.setTargetFile(videoPath); capturer.setTargetVideoFormat(videoFormat); AudioFormat audioFormat = new AudioFormatAndroid("audio/mp4a-latm", 44100, 2); capturer.setTargetAudioFormat(audioFormat); capturer.start(); isStarted = true; isConfigured = false; framesCaptured = 0; } public void stop() { if (!isStarted()) throw new IllegalStateException(TAG + " not started or already stopped!"); try { capturer.stop(); isStarted = false; } catch (Exception ex) { } capturer = null; isConfigured = false; } private void configure() { if (isConfigured()) return; try { capturer.setSurfaceSize(videoWidth, videoHeight); isConfigured = true; } catch (Exception ex) { } } public void beginCaptureFrame() { if (!isStarted()) return; configure(); if (!isConfigured()) return; capturer.beginCaptureFrame(); } public void endCaptureFrame() { if (!isStarted() || !isConfigured()) return; capturer.endCaptureFrame(); framesCaptured++; } public boolean isStarted() { return isStarted; } public boolean isConfigured() { return isConfigured; }}

Important: please notice the package name com.intel.inde.mp.samples.unity. It has to be the same as in the player settings (Bundle identifier) in Unity:

Moreover you have to use this name in the C# script to call our Java class. If it doesn't match you will get problems with the calls because your class definition won't be found by the VM and you will get a crash at launch.

We need to setup some simple 3D stuff for our test application. Of course, you can integrate Intel INDE Media Pack for Android in your existing project. It's up to you. Be sure you have something moving in your scene.

Now, like for any other Android application, we need to setup a manifest XML file. This manifest file will tell at compilation time which activities should be launched and which functions are allowed to be accessed. In our case we can start from the default Unity AndroidManifest.xml located in C:/Program Files (x86)/Unity/Editor/Data/PlaybackEngines/androidplayer. So let's create a file AndroidManifest.xml under /Plugins/Android and place the following content in it:

<?xml version="1.0" encoding="utf-8"?><manifest xmlns:android="http://schemas.android.com/apk/res/android" package="com.intel.inde.mp.samples.unity" android:installLocation="preferExternal" android:theme="@android:style/Theme.NoTitleBar" android:versionCode="1" android:versionName="1.0"> <uses-sdk android:minSdkVersion="18" /> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/> <uses-permission android:name="android.permission.INTERNET"/> <!-- Microphone permissions--> <uses-permission android:name="android.permission.RECORD_AUDIO" /> <!-- Require OpenGL ES >= 2.0. --> <uses-feature android:glEsVersion="0x00020000" android:required="true"/> <application android:icon="@drawable/app_icon" android:label="@string/app_name" android:debuggable="true"> <activity android:name="com.unity3d.player.UnityPlayerNativeActivity" android:label="@string/app_name"> <intent-filter> <action android:name="android.intent.action.MAIN" /> <category android:name="android.intent.category.LAUNCHER" /> </intent-filter> <meta-data android:name="unityplayer.UnityActivity" android:value="true" /> <meta-data android:name="unityplayer.ForwardNativeEventsToDalvik" android:value="false" /> </activity> </application></manifest>

Notice the following important line:

package="com.intel.inde.mp.samples.unity"

Now we have our AndroidManifest.xml and our Java files under /Plugins/Android. Instead of writing a long cmd line for compiling with javac with classpaths and so on, we are going to simplify the whole process by building an Apache Ant* script. Ant allows to quickly create a script for folders creation, call .exe or like in our case for classes generation. Another nice feature is that you have the possibility to import your Ant script into Eclipse*. Notice: if you are using other classes or libs you will need to adapt the following Ant script (you can check the official documentation at Apache Manual/). The below Ant script is only for the purpose of this tutorial.

Create a file named build.xml under /Plugins/Android/ with the following content:

<?xml version="1.0" encoding="UTF-8"?><project name="UnityCapturing"> <!-- Change this in order to match your configuration --> <property name="sdk.dir" value="C:\Android\sdk"/> <property name="target" value="android-18"/> <property name="unity.androidplayer.jarfile" value="C:\Program Files (x86)\Unity\Editor\Data\PlaybackEngines\androiddevelopmentplayer\bin\classes.jar"/> <!-- Source directory --> <property name="source.dir" value="\ProjectPath\Assets\Plugins\Android" /> <!-- Output directory for .class files--> <property name="output.dir" value="\ProjectPath\Assets\Plugins\Android\classes"/> <!-- Name of the jar to be created. Please note that the name should match the name of the class and the name placed in the AndroidManifest.xml--> <property name="output.jarfile" value="Capturing.jar"/> <!-- Creates the output directories if they don't exist yet. --> <target name="-dirs" depends="message"> <echo>Creating output directory: ${output.dir} </echo> <mkdir dir="${output.dir}" /> </target> <!-- Compiles this project's .java files into .class files. --> <target name="compile" depends="-dirs" description="Compiles project's .java files into .class files"> <javac encoding="ascii" target="1.6" debug="true" destdir="${output.dir}" verbose="${verbose}" includeantruntime="false"> <src path="${source.dir}" /> <classpath> <pathelement location="${sdk.dir}\platforms\${target}\android.jar"/><pathelement location="${source.dir}\domain-1.1.1493.jar"/><pathelement location="${source.dir}\android-1.1.1493.jar"/> <pathelement location="${unity.androidplayer.jarfile}"/> </classpath> </javac> </target> <target name="build-jar" depends="compile"> <zip zipfile="${output.jarfile}" basedir="${output.dir}" /> </target> <target name="clean-post-jar"> <echo>Removing post-build-jar-clean</echo> <delete dir="${output.dir}"/> </target> <target name="clean" description="Removes output files created by other targets."> <delete dir="${output.dir}" verbose="${verbose}" /> </target> <target name="message"> <echo>Android Ant Build for Unity Android Plugin</echo> <echo> message: Displays this message.</echo> <echo> clean: Removes output files created by other targets.</echo> <echo> compile: Compiles project's .java files into .class files.</echo> <echo> build-jar: Compiles project's .class files into .jar file.</echo> </target></project>

Notice that you must adjust two paths (source.dir, output.dir) and, of course, the name of the output jar (output.jarfile).

If you don't have Ant, you can obtain it from the Apache Ant home page. Install it and make sure it is in your executable PATH. Before calling Ant, you need to declare the JAVA_HOME environment variable to specify the path where the Java Development Kit (JDK) is installed. Do not forget to add <ant_home>/bin to PATH.

Run the Windows* Command Processor (cmd.exe), change current directory to /Plugins/Android folder and type the following command to launch the build script:

ant build-jar clean-post-jar

After a few seconds you should get the message that everything was correctly built!

You've compiled your jar! Notice the new file Capturing.jar in the directory.

Switch to Unity. Create Capture.cs script with the following code in it:

using UnityEngine;using System.Collections;using System.IO;using System;[RequireComponent(typeof(Camera))]public class Capture : MonoBehaviour{public int videoWidth = 720;public int videoHeight = 1094;public int videoFrameRate = 30;public int videoBitRate = 3000;private string videoDir;public string fileName = "game_capturing-";private float nextCapture = 0.0f;public bool inProgress { get; private set; }private AndroidJavaObject playerActivityContext = null;private static IntPtr constructorMethodID = IntPtr.Zero;private static IntPtr initCapturingMethodID = IntPtr.Zero;private static IntPtr startCapturingMethodID = IntPtr.Zero;private static IntPtr captureFrameMethodID = IntPtr.Zero;private static IntPtr stopCapturingMethodID = IntPtr.Zero;private static IntPtr getDirectoryDCIMMethodID = IntPtr.Zero;private IntPtr capturingObject = IntPtr.Zero;void Start(){if (!Application.isEditor) {// First, obtain the current activity contextusing (AndroidJavaClass jc = new AndroidJavaClass("com.unity3d.player.UnityPlayer")) {playerActivityContext = jc.GetStatic<AndroidJavaObject>("currentActivity");}// Search for our classIntPtr classID = AndroidJNI.FindClass("com/intel/inde/mp/samples/unity/Capturing");// Search for it's contructorconstructorMethodID = AndroidJNI.GetMethodID(classID, "<init>", "(Landroid/content/Context;)V");// Register our methodsinitCapturingMethodID = AndroidJNI.GetMethodID(classID, "initCapturing", "(IIII)V");startCapturingMethodID = AndroidJNI.GetMethodID(classID, "startCapturing", "(Ljava/lang/String;)V");captureFrameMethodID = AndroidJNI.GetMethodID(classID, "captureFrame", "(I)V");stopCapturingMethodID = AndroidJNI.GetMethodID(classID, "stopCapturing", "()V");// Register and call our static methodgetDirectoryDCIMMethodID = AndroidJNI.GetStaticMethodID(classID, "getDirectoryDCIM", "()Ljava/lang/String;");jvalue[] args = new jvalue[0];videoDir = AndroidJNI.CallStaticStringMethod(classID, getDirectoryDCIMMethodID, args);// Create Capturing objectjvalue[] constructorParameters = AndroidJNIHelper.CreateJNIArgArray(new object [] { playerActivityContext });IntPtr local_capturingObject = AndroidJNI.NewObject(classID, constructorMethodID, constructorParameters);if (local_capturingObject == IntPtr.Zero) {Debug.LogError("Can't create Capturing object");return;}// Keep a global reference to itcapturingObject = AndroidJNI.NewGlobalRef(local_capturingObject);AndroidJNI.DeleteLocalRef(local_capturingObject);AndroidJNI.DeleteLocalRef(classID);}inProgress = false;nextCapture = Time.time;}void OnRenderImage(RenderTexture src, RenderTexture dest){if (inProgress && Time.time > nextCapture) {CaptureFrame(src.GetNativeTextureID());nextCapture += 1.0f / videoFrameRate;}Graphics.Blit(src, dest);}public void StartCapturing(){if (capturingObject == IntPtr.Zero)return;jvalue[] videoParameters = new jvalue[4];videoParameters[0].i = videoWidth;videoParameters[1].i = videoHeight;videoParameters[2].i = videoFrameRate;videoParameters[3].i = videoBitRate;AndroidJNI.CallVoidMethod(capturingObject, initCapturingMethodID, videoParameters);DateTime date = DateTime.Now;string fullFileName = fileName + date.ToString("ddMMyy-hhmmss.fff") + ".mp4";jvalue[] args = new jvalue[1];args[0].l = AndroidJNI.NewStringUTF(videoDir + fullFileName);AndroidJNI.CallVoidMethod(capturingObject, startCapturingMethodID, args);inProgress = true;}private void CaptureFrame(int textureID){if (capturingObject == IntPtr.Zero)return;jvalue[] args = new jvalue[1];args[0].i = textureID;AndroidJNI.CallVoidMethod(capturingObject, captureFrameMethodID, args);}public void StopCapturing(){inProgress = false;if (capturingObject == IntPtr.Zero)return;jvalue[] args = new jvalue[0];AndroidJNI.CallVoidMethod(capturingObject, stopCapturingMethodID, args);}}

Add this script to your Main Camera. Before starting capturing you have to configure the video format. Parameters names speak for themselves. You can tweak them directly from Unity Editor GUI.

We don't focus on Start(), StartCapturing() and StopCapturing() methods. They are trivial if you are familiar with the Java Native Interface (JNI). Let's go deeper. Check the OnRenderImage() method. OnRenderImage() is called after all rendering is complete to render the image. The incoming image is the source render texture. The result should end up in the destination render texture. It allows you to modify the final image by processing it with shader based filters. But we want to just copy the source texture into the destination render texture by calling Graphics.Blit() without any special effects. Before that we pass the native ("hardware") texture handle to the captureFrame() method of our Capturing.java class.

StartCapturing() and StopCapturing() methods are public. So you can call them from another script. Let's create one more C# script called CaptureGUI.cs:

using UnityEngine;using System.Collections;public class CaptureGUI : MonoBehaviour{public Capture capture;private GUIStyle style = new GUIStyle();void Start(){style.fontSize = 48;style.alignment = TextAnchor.MiddleCenter;}void OnGUI(){style.normal.textColor = capture.inProgress ? Color.red : Color.green;if (GUI.Button(new Rect(10, 200, 350, 100), capture.inProgress ? "[Stop Recording]" : "[Start Recording]", style)) {if (capture.inProgress) {capture.StopCapturing();} else {capture.StartCapturing();}}}}

Add this script to any object in your scene. Don't forget to assign your Capture.cs instance to public capture member.

It's all you need to know to be able to add video capturing capability to Unity applications. Now Build & Run your test application for Android platform. You can find recorded videos in /mnt/sdcard/DCIM/ folder of your Android device. Another tutorial may help you explore the logic of Capturing.java and VideoCapture.java code in more details.

Known issues:

- With this approach we can't capture Unity GUI layer. There is a workaround with OnPreRender() and OnPostRender() methods, but it doesn't work with drop shadows, deferred shading and fullscreen post-effects.

For more such Android resources and tools from Intel, please visit the Intel® Developer Zone