Intel India Leaders on the AI PC Revolution: “The Tipping Point Is Now”

At the launch of the Intel Core Ultra (Series 2), also known as Lunar Lake mobile processors, we spoke with Gokul V Subramaniam, President of Intel India, and Santosh Viswanathan, Managing Director of Intel India Region. They offered compelling insights into how artificial intelligence is reshaping the personal computing landscape. From the surge of AI-enabled PCs and evolving enterprise refresh cycles to Intel’s focus on delivering effective AI performance, the conversation highlighted the company’s vision for the future of computing and its impact on markets like India. Excerpts from the interaction below:

Digit – We’ve heard from the competition that they’re targeting an ASP (Average Selling Price) of USD 799 and above with the release of the new chips. Most of their designs will start from that price point and go higher and there are very few SKUs. However, Intel has a much wider portfolio, so what kind of opportunities do you perceive, especially in markets like India where the ASP is usually around INR 60,000-to-70,000?

Santosh – We’ve already shipped 20 million odd PCs of the 1st Generation. We think that as we get into the next year 2025-26 and beyond, more than half of the PC stack is going to be an AI PC. So, eventually I think the volume economics is something that will start to waterfall at all different price points. You’ve already started seeing that with the 1st Gen i.e. with the Intel Core Ultra (Series One) processors. Now with the Intel Core Ultra (Series Two) processors, you’ll see a breadth of offers that are going to come into the market as well. So, I think it’s a question of, you know, the scale that you’ll start to see, the innovation that our systems partners come up with, and then what the software builds on top of it. That’s going to go make this available at different price points.

Digit – And in terms of capturing markets, there’s usually these ‘refresh’ years or periods wherein companies want to get their products out in time to target the major IT companies who buy 10,000-20,000 units in each country. So when is the next Inflection point or major refresh cycle in your perspective and what kind of processors or which architectures are you targeting for that?

Santosh – I think the major inflection, or the major refresh point is now, right? Why do I say that? Because I just think that the AI PC is just another tipping point for us in many ways, because it redefines the way you work. It redefines the way data can be used, right? As we said, everything need not be processed in big, large data centers, you can really use your local data and harness that. So, it redefines many of the use cases that enterprises have today with their data. I think that the most important part for the Industry as well as for enterprises is that it is a moment that doesn’t come very often. It’s a moment that is here now where the PC is getting reimagined. It’s a whole new way of looking at PC and how it becomes an AI engine for you and the use cases are just starting off. So, it’s a great time for us to jump in and refresh and give it in the hands of the users, give it in the hands of your employees. Because then the innovation cycle begins. That’s what happened when you just imagine the old days, when you moved from fixed computing to Wi-Fi. And then you started to see the whole innovation cycle that you could work anywhere, and you could start to build stuff anywhere. And it freed you and you saw creativity being on wheels. This is a moment where intelligence is going to be on wheels in many ways. And it spurs up models and usages that are going to be very different from what we’ve done in the.

Also read: Sneak Peek: Intel’s Robert Hallock on What to Expect from Lunar Lake

Gokul -The other big thing is the enterprise sector always looks for security and manageability, and the kind of things that comes with the OS, and the kind of applications that they build on top of the AI infrastructure. So, making sure that you have the right combination of platform, operating system and their software assets that they’re building on AI, is also another big decision point for the enterprise. And clearly, we’re strongly positioned there with our enterprise SKUs. And Microsoft Copilot might drive some. And a lot of the GSIs who are building their own assets, how they manage large deployments, and how they service that with AI is going to be a big one riding on top of it, as well as what the silicon provides. Like Santosh said, I think all of them are coming together now.

Santosh – And traditional cycles are getting redefined. We used to always have a cadence like every four years there might be a refresh or every six years there might be a refresh. The refresh cycle is tied to your innovation cycle, right? Here’s something new that can dramatically change and solve my problem, increase my productivity, make my output better for my customers. Then you refresh now, right? And if we go back and do something very different next year and we bring in innovation, then the refresh cycle shortens even further. I think both are closely tied and there’s no fixed cycle of innovation. If it’s so compelling, you will do it. Otherwise, you won’t be in business, right? So that’s the whole cycle that we have to keep up with.

Digit – True, but companies tend to have budget allocations for two-to-three years, after which they tend to refresh. And that’s usually what major OEMs tend to target.

Santosh – Yeah, absolutely. And I feel that it’s changing for our industry. Many years ago I used to work for an OEM and you’re right. It used to be that a major software update would drop and then something doesn’t work any longer and then you do a refresh. The tendency is to go for a delayed refresh cycle, right? Like I want to stretch the asset as far as I can so that I can go and refresh only when needed. So that my capital allocation is not stuck on that.

Also read: Hands-On with Intel Lunar Lake AI Laptops, Claiming Unmatched Battery Life and GPU performance

I think that model is slowly shifting because of two things which are tied to the different models in which financing is done today. Even from your books, you’re starting to see companies innovate on device-as-a-service or any of the other financial model in which they giving it as an asset. Second, these companies realise that if there is a big innovation and they’re not part of it, the cost of delay is a cost of business. If my competitors are going in, it’s no longer a spend. It’s more a business essential that if I’m not there quick enough to go in a way, then I’m going to miss out on the opportunity to go make revenue on it. So it’s eventually a cost of business. I think you’re going to start to see some of this change even more rapidly in the years that we go ahead.

Digit – We are in this AI TOPS race where everyone’s gunning for that magical number of getting Microsoft Copilot+ certification, and then racing further and further. How long would it be until it becomes enough? Once you hit that baseline threshold where all your day-to-day productivity applications are just handled because you have, let’s say 80 TOPS? Then there’s no need to worry about marketing it. After that point it becomes sort of like a commoditised aspect.

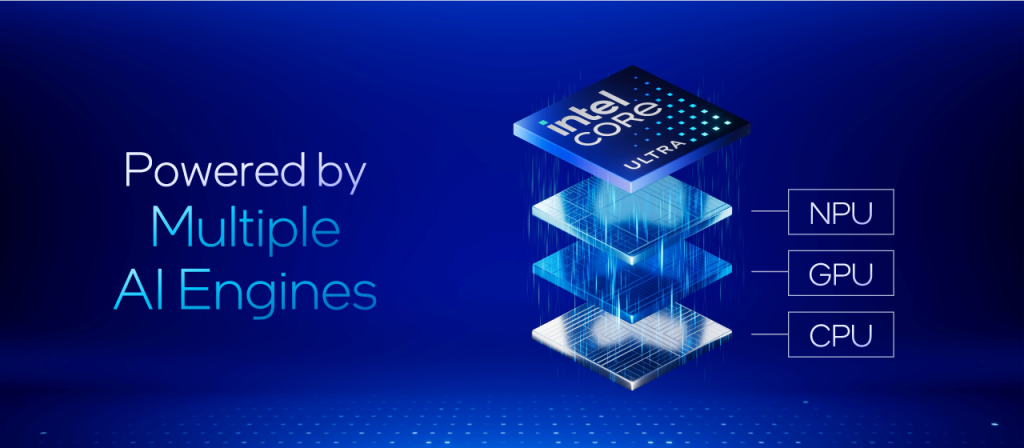

Gokul – TOPS is right now looked at as a number but the effectiveness of the TOPS is more important. How much can you eke out performance for every one of those? Let’s say, on paper, you’re having the tops and if you can’t even load the application, then that TOPS is just sitting there unused when the app can’t even run, right? So, you have to look at that. We’ve made sure that our developers who are building are actually tuning and making it more effective than fumbling with getting their basic app working. So that’s kind of fundamental now. “How much is enough?” — is a function of “as it gets deployed and more and more people use it, you’re going to figure that out.” It’s similar to the transition that phones had from dual core to octa core and all of that stuff. And then it started to kind of plateau after a point. We ‘re probably going to go through that.

Also read: How Intel’s Lunar Lake is Taking the Fight to its Competition

There is a rush to think that there’s a lot needed. And there is a diversity of workload that’s needed for AI. So, you want to build the diversity bit into your architecture first. You know the burst, the media, the sustain. How much of each one of them do you need right now is what matters. Everybody is rushing towards a the Max and I think it’s important to go for max, but more importantly, once the max is achieved, can you use it versus trying to figure out how to even get it up and running? I think that’s kind of where we feel it’s going towards.

Digit – So would that mean that later down the line, Intel would also look at a P-Core + E-Core kind of hybrid approach wherein you have small cores and big cores for different types of AI models that are being utilized?

Gokul – It can run on any one of our IP blocks depending on where it’s most effective. There’s a performance-per-Watt and energy efficiency tradeoff for all of all of them.

Santosh – I think the most important part to recognize is that you forget about TOPS and the speeds and the clocks. What do the users want? Is the performance enough? It’s never enough. If someone had predicted that you’d only need this many transistors on a chip today, then everyone would stop developing stuff. Our CEO has spoken about a trillion transistors on a single chip by 2030. So I think the need for performance, the need for us to better across all the different vectors that Gokul mentioned, that’s a constant rise because that demand of compute is never enough. So I think the cycle of innovation will continue, whether it’s the race for one particular parameter that you’re measuring a success today, will that parameter remain the same? I don’t think it’s going to be one static parameter. Just like how gigahertz or number of cores and all of those, were parameters that were used as proxies keep changing because our workloads change and our usages change. And therefore, parameters will start to change, but the sheer need for building world class performance on the chip and making it smaller, faster, better, that is definitely going to continue.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 10 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile