Human eye-inspired technology called “AMI-EV” could revolutionise smartphone cameras!

If you have ever taken a deep dive into the camera technology that we have around us, you would know that the basic operating principle of cameras, as we know them today, is based on the human eye. The imaging technology that we see around us draws very close to how the human eye works. However, it turns out that there are additional attributes of the human eye that could significantly improve the way we capture images. A team of researchers led by the folks at the University of Maryland’s College of Computer, Mathematical, and Natural Sciences have incorporated ‘microsaccades’ into their latest camera mechanism, dubbed “ Artificial Microsaccade-Enhanced Event Camera (AMI-EV)”, which could improve our imaging technology significantly. The applications of this technology go beyond improving smartphone image capturing or robots. These findings were published in a research article titled – Microsaccade-inspired event camera for robotics – published in Science Robotics, Vol 9, Issue 90.

Survey

SurveyRead along to find out how Artificial Microsaccade-Enhanced Event Camera (AMI-EV) could draw the outline of a clearer digital imaging future for us –

Micromovements for the win!

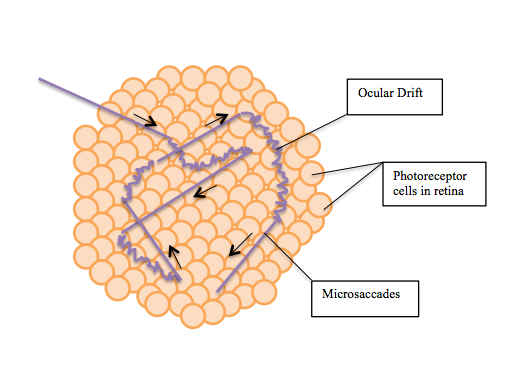

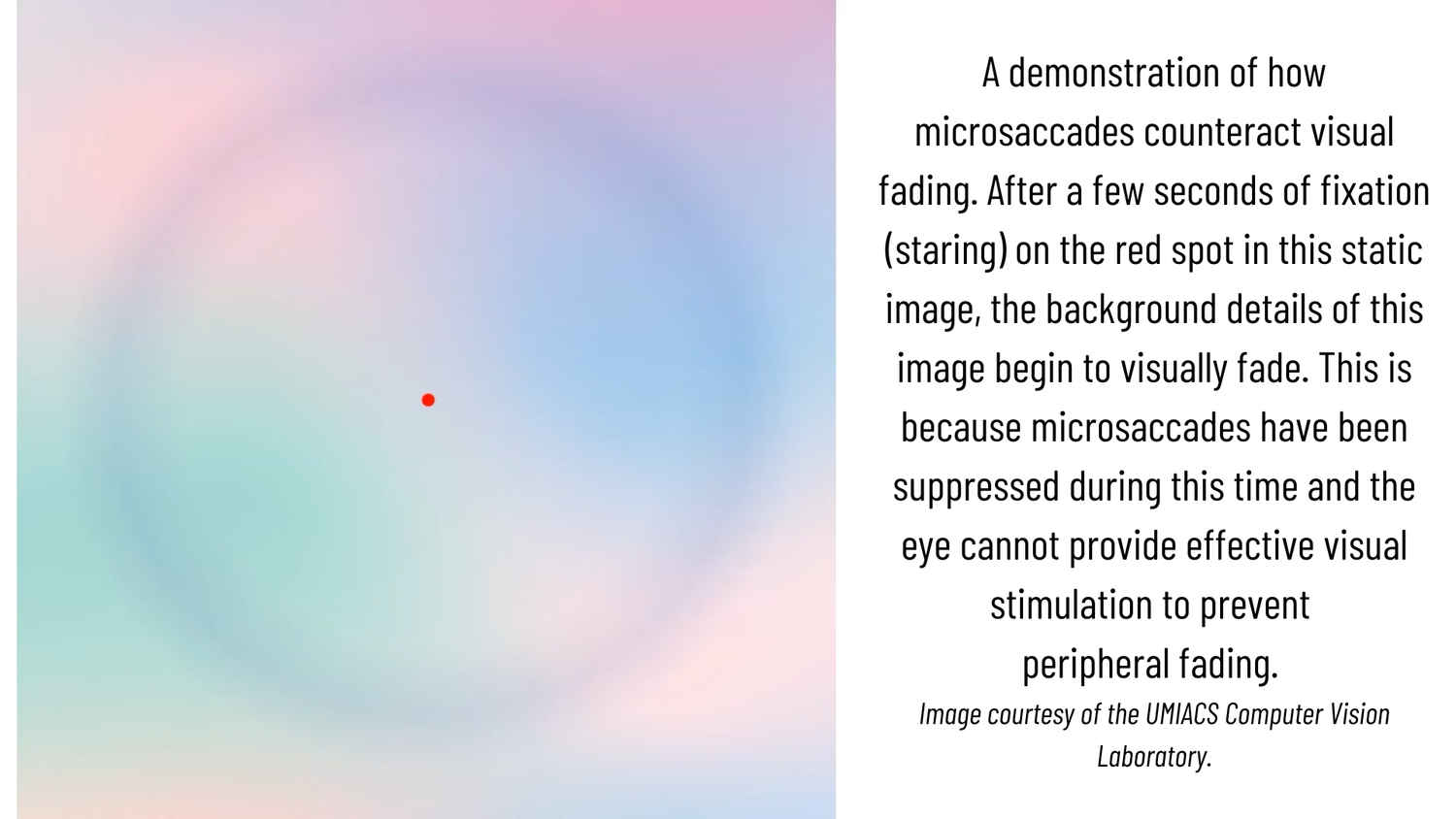

Let’s start by understanding the human eye’s attribute called microsaccades, which are the foundation for this innovation. To put it simply, microsaccades are the involuntary movements of the human eye when it is trying to fixate on a stationary subject.

A more formal definition of microsaccades can be found in the research paper titled – Microsaccades: A Neurophysiological Analysis – published in 2009. Researchers in the paper describe these movements as: “…the largest and fastest of the fixational eye movements, which are involuntary eye movements produced during attempted visual fixation.”

When the researchers at UMD began their work, their primary aim was to incorporate this into a camera module that would be able to capture images without the motion blur that we tend to see in cameras present today. “Event cameras are a relatively new technology better at tracking moving objects than traditional cameras, but today’s event cameras struggle to capture sharp, blur-free images when there’s a lot of motion involved,” said the paper’s lead author Botao He, a computer science Ph.D. student at UMD, elaborating on the limitations of the cameras that we have today.

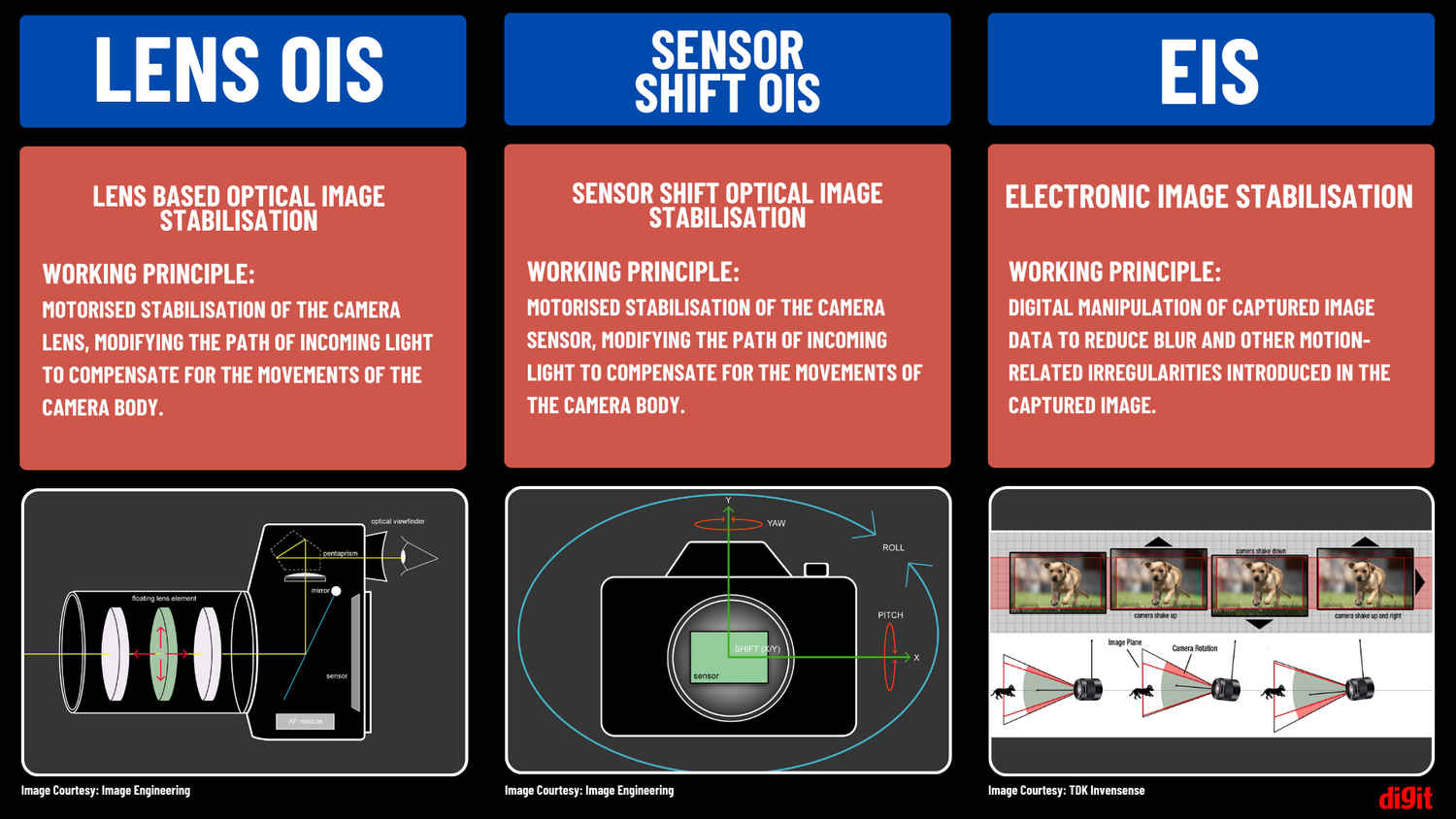

Over the years, researchers across the world have been trying to perfect digital imaging technology by incorporating more and more elements from the human eye into their tech. We have seen several technologies like Sensor-Shift, OIS, and EIS making their way into cameras across the board, and additional tech like Gimbals, trying to solve the issue of blurry pictures and videos. No matter how much brands develop their tech, there are limitations that are holding them back from producing absolutely flawless images like the human eye. However, AMI-EV seems to be the answer to this!

How does AMI-EV work?

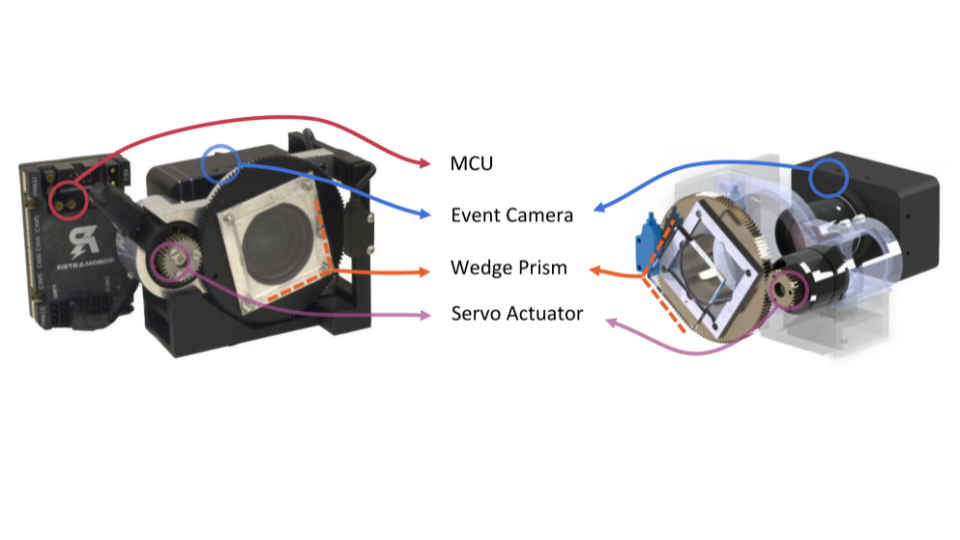

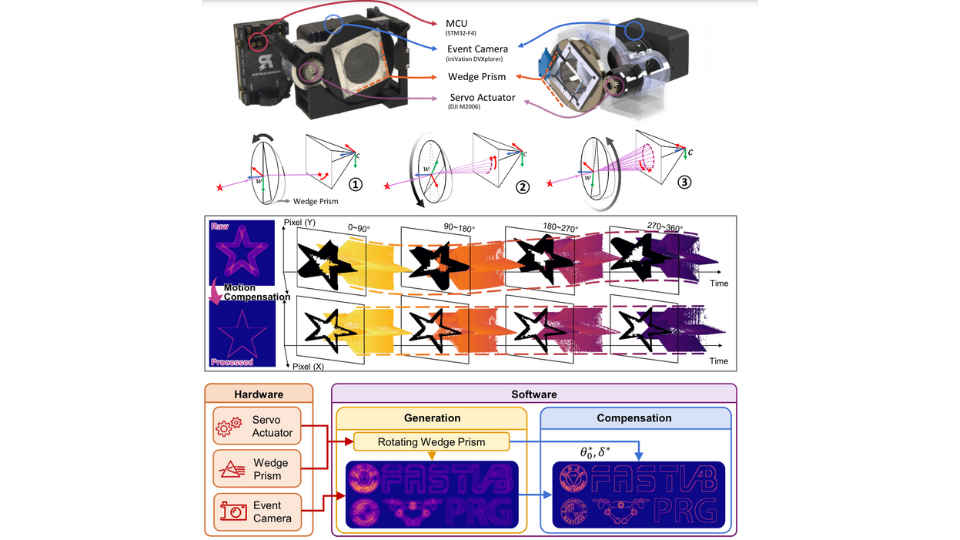

The basic development principle was simple – add a layer of stabilisation or information collection that could then be processed to create clear and crisp images. To accomplish this, the researchers added a rotating wedge prism right in front of the aperture of an event camera to ensure that the path of light was redirected which in turn triggered events. For the uninitiated, event cameras are a specialised set of cameras which feature individually operating pixels, which capture and respond to incoming light data and report brightness changes on the fly. These cameras are also called neuromorphic cameras, silicon retinas, or dynamic vision sensors.

Once they had figured out the mechanics of it and had the hardware in place, the next challenge lay in the form of developing software that could process this data. And they were able to do that successfully as well. The primary aim of the software, as published in an article covering this innovation by UMD, was “to compensate for the prism’s movement within the AMI-EV to consolidate stable images from the shifting lights”.

After this, the researchers were able to successfully capture and display movement accurately in a variety of contexts. This included the detection of human pulse and shape identification of rapidly moving subjects. The most astonishing part lay in the number of frames that AMI-EV was able to capture. As stated in the research article, tens of thousands of frames per second, which is significantly higher than that of the cameras that are commercially available today, are capable of capturing anywhere between 30-1000 frames per second.

Where do the roads lead to?

Well, if you look at it, AMI-EV can have applications beyond what we would think of after learning about this innovation. The researchers have high hopes that this new imaging technology will provide breakthroughs in applications that are not only limited to smartphones and robotics but also beyond. Commenting on this, Cornelia Fermüller, a senior author of the paper, said, “With their unique features, event sensors and AMI-EV are poised to take center stage in the realm of smart wearables.”

Fermüller added, “They have distinct advantages over classical cameras—such as superior performance in extreme lighting conditions, low latency and low power consumption. These features are ideal for virtual reality applications, for example, where a seamless experience and the rapid computations of head and body movements are necessary.”

It was further stated that AMI-EV could find its way into self-driving automobiles, enabling much more efficient and faster recognition of objects on the road. In terms of smartphones, the claimed power efficiency of the mechanism, coupled with its capabilities to capture clearer and crisper videos and photos and improved low-light performance, could make AMV-EV an avenue that manufacturers would definitely consider globally. Additionally, this technology could enhance security imaging and further the way in which astronomers capture images in space.

The possibilities are vast, and there is potential in AMI-EV. Now, it is up to researchers across the world to probe further into this and experiment with its different applications to ensure it improves the way we capture visual information.

Satvik Pandey

Satvik Pandey, is a self-professed Steve Jobs (not Apple) fanboy, a science & tech writer, and a sports addict. At Digit, he works as a Deputy Features Editor, and manages the daily functioning of the magazine. He also reviews audio-products (speakers, headphones, soundbars, etc.), smartwatches, projectors, and everything else that he can get his hands on. A media and communications graduate, Satvik is also an avid shutterbug, and when he's not working or gaming, he can be found fiddling with any camera he can get his hands on and helping produce videos – which means he spends an awful amount of time in our studio. His game of choice is Counter-Strike, and he's still attempting to turn pro. He can talk your ear off about the game, and we'd strongly advise you to steer clear of the topic unless you too are a CS junkie. View Full Profile