Wikipedia’s Tamil Superhero added 22,500 articles himself!

Wikipedia’s ubiquity is built on the shoulders of anonymous community contributors like Neechalkaran

Lakhs of visitors started organically reaching these Tamil Wikipedia pages that Neechal’s automated bot had created

Another brilliant tool Neechal has created is VaaniNLP, a one of kind open-source Tamil NLP python library

Wikipedia has become a necessary touchpoint for online research, whether you like it or not – it’s the fourth most visited online destination in the world, behind only Facebook, YouTube and Google. But where Google has Sundar Pichai, Facebook is Mark Zuckerberg, and other big tech platforms have familiar faces, Wikipedia’s brand isn’t personified by any one individual. On the contrary, Wikipedia’s ubiquity is built on the shoulders of anonymous community contributors like Neechalkaran, who’s carving a Tamil wiki niche unlike any other.

Originally from Madurai, Tamil Nadu, Neechalkaran started reading and writing in Tamil after he started working for Infosys, which involved relocating to Pune, Maharashtra. “Since I was far away from my native Tamil community, I kept in touch with Tamil by reading online Tamil blogs at the time, and eventually started my own Tamil blog on Blogspot,” Neechalkaran explained, who even started writing Tamil poetry.

For the love of Tamil

Soon he discovered the Tamil Wikipedia page and started contributing articles there in his free time, registering on it in 2010. A year or two down the line, he discovered a problem. “People who read my articles on Tamil Wikipedia and even my own blog started commenting that my articles had lots of grammatical errors,” admitted Neechalkaran wholeheartedly. But far from getting disheartened or dejected, Neechal took it as a challenge to solve through tech.

“That was truly the turning point for me, when I devoted some time to learning Tamil grammar and built Naavi, a Tamil spell checker specifically related to Sandhi rule,” Neechal recalled. Tamil readers from all around the world, including a few professors, appreciated Neechalkaran’s Tamil spell checker tool, he claimed. This only boosted his confidence further, making him learn not only Javascript but also Python and C#, and focusing on solving some of the problems faced by the Tamil Wikipedia’s volunteers. At the time, Tamil Wikipedia contributors were able to write articles, but they found some difficulty in doing housekeeping activities, like editing some values in multiple pages or bulk editing, a lot of small tasks that needed to be automated, according to Neechalkaran.

It was in 2015-16 when the Tamil Nadu government was looking to digitize and release some village-level data, where the Tamil Wikipedia got involved in the project, Neechalkaran told. “The Tamil Wikipedia community had several discussions with government officials on how best to publish over 13,000 raw data points related to panchayat-level – it included assembly names, population, demographics, literacy rates, number of buildings in an area, etc,” said Neechal. “The entire data was in Excel format, no sentences whatsoever. So internally, within the Tamil Wikipedia community we discussed and we formed a template with appropriate grammar, automating NLP-related tasks for singular and plural words (for example), and arrived at a perfect article template. We uploaded only 12,000 data points into articles, rejecting the other raw data points due to discrepancy in data,” he explained.

Now that they had a proof-of-concept of automating government records on Tamil Wikipedia, Neechalkaran and the community further automated and published temple records of the Hindu Religious And Charitable Endowments Board containing 24,000 articles related to Hindu temple names, addresses, location, deity name, and more, for the state of Tamil Nadu in 2016-17.

Building the bot

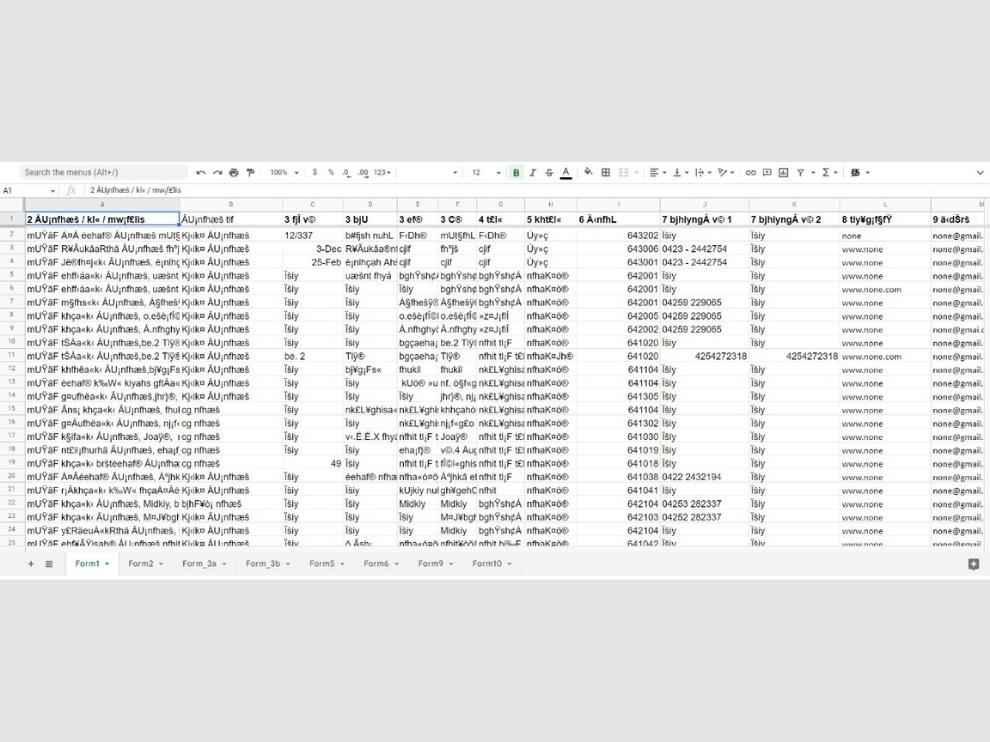

Raw data in non-Unicode format in Google Sheets

Neechalkaran highlighted how before publishing any of the data received from the Tamil Nadu government, it first had to be sanitized to fit data standards compatible with Wikipedia. He had to build a tool for converting the incoming data into Unicode and saving it on his Google Drive. He also shared the links to his Google Drive with the entire Tamil Wikipedia community, which Neechal said consisted of Tamil expats from Sri Lanka, Malaysia and other places contributing their time and effort, too.

Next step was feeding it all into the Neechal Bot, which was built using Google Apps Script. “I used Google Apps Script because I was more comfortable in JavaScript at the time, and Google Apps Script is nothing but Google’s version of JavaScript, and the fact that it seamlessly integrated with Google Sheets data helped as well,” he explained, as a lot of automation is built into Google Apps Script which allows developers to get started on a project without worrying about web hosts or server infrastructure – everything is free to begin with.

Final Wikipedia article created by Neechal’s bot

“The bot I developed helped convert raw Google Sheets data into a readable format, and linking it to the Wikipedia API allowed me to create articles on Tamil Wikipedia,” said Neechal, explaining how he had to request Wikipedia for API and bot access. He also highlighted how he also made use of the Tamil NLP library he had built a few years earlier.

Then something amazing happened, where the power of the community started flourishing. Lakhs of visitors started organically reaching these Tamil Wikipedia pages that Neechal’s automated bot had created.

“We had just created basic, simple articles in Tamil on Wikipedia, but people found enough value in them to come and modify and add more data in them. For example, most of our articles didn’t have photographs in them, but those who had contextual photographs or pictures related to the page in question, they uploaded it freely to enhance the article. They provided the ultimate validation to the basic idea of creating these articles in Wikipedia, precisely so anyone Tamil Nadu can still contribute to these Wiki pages and enhance their own village panchayat’s or constituency’s data,” Neechal remarked.

Neechalkaran felicitated in Canada, 2015

Neechalkaran’s “Neechal Bot”, which can undertake page creation, editing and statistics collection activities, has created more than 22,500 articles with community consensus – it would have taken 22 humans over three years to create so many Wikipedia pages manually, according to a conservative estimate. The bot can also automatically perform a lot of housekeeping activities on Tamil, Bhojpuri, Hindi Wikipedia, and other Wikimedia projects. It collects periodical stats in these languages and can update them on corresponding Wikipedia pages.

Another brilliant tool Neechal has created is VaaniNLP, a one of kind open-source Tamil NLP python library. This tool is being used by a startup Thiral, an AI-based Tamil News Aggregator. His newest work is a Tamil chatbot for Wikidata, which has executed over 72,000 edits so far in three languages Tamil, Hindi, and Bhojpuri.

For more technology news, product reviews, sci-tech features and updates, keep reading Digit.in.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile