Is the time for Artificial Intelligence finally here?

Now that a machine has finally passed the Turing Test what does it mean for the world of AI?

The system has you. From the first mobile update in the morning to the closing sliver of light from under a laptop lid at night – you are cocooned in a gilded prison you cannot smell or touch or taste. A prison run by sentient electrons travelling at the speed of light, invisible, patient and inevitable – what some may call artificial intelligence. A bit too dramatic? Perhaps. But is it really that removed from the not so distant future?

Survey

SurveyI, Eugene Goostman

The idea of artificial intelligence and the hopes and fears that are associated with its rise are fairly prevalent in our common subconscious. Whether we imagine Judgement Day at the hands of Skynet or egalitarian totalitarianism at the hands of V.I.K.I and her army of robots – the results are the same – the equivocal displacement of humans as the dominant life forms on the planet. Some might call it the fears of a technophobic mind, others a tame prophecy. And if the recent findings at the University of Reading (U.K.) are any indication, we may have already begun fulfilling said prophecy.

In early June 2014 a historic achievement was supposedly achieved – the passing of the eternal Turing Test by a computer programme. Being hailed and derided the world over as being either the birth of artificial intelligence or a clever trickster-bot that only proved technical skill respectively, the programme known as Eugene Goostman may soon become a name embedded in history. The programme or Eugene (to his friends) was originally created in 2001 by Vladimir Veselov from Russia and Eugene Demchenko from Ukraine. Since then it has been developed to simulate the personality and conversational patterns of a 13 year old boy and was competing against four other programmes to come out victorious.

The Turing Test was held at the world famous Royal Society in London and is considered the most comprehensively designed tests ever. The requirements for a computer programme to pass the

Turing Test are simple yet difficult – the ability to convince a human being that the entity that they are conversing with is another human being at least 30 percent of the time. The result in London garnered Eugene a 33 percent success rating making it the first programme to pass the Turing Test.

The test in itself was more challenging because it engaged 300 conversations, with 30 judges or human subjects, against 5 other computer programmes in simultaneous conversations between humans and machines, over five parallel tests. Across all the instances only Eugene was able to convince 33 percent of the human judges that it was a human boy. Built with algorithms that support “conversational logic” and open-ended topics, Eugene opened up a whole new reality of intelligent machines capable of fooling humans. With implications in the field of artificial intelligence, cyber-crime, philosophy and metaphysics, its humbling to know that Eugene is only version 1.0 and its creators are already working on something more sophisticated and advanced.

Love in the Time of Social A.I.s

So, should humanity just begin wrapping up its affairs, ready to hand over ourselves to our emerging overlords? No. Not really Despite the interesting results of the Turing Test, most scientists in the field of artificial intelligence aren’t that impressed. The veracity and validity of the Test itself has long been discounted as we’ve discovered more and more about intelligence, consciousness and the trickery of computer programmes. In fact, the internet is already flooded with many of his unknown kin as a report by Incapsula Research showed that nearly 62 percent of all web traffic is generated by automated computer programs commonly known as bots. Some of these bots act as social hacking tools that engage humans on websites in chats pretending to be real people (mostly women oddly enough) and luring them to malicious websites. The fact that we are already battling a silent war for less pop-up chat alerts is perhaps a nascent indication of the war we may have to face – not deadly but definitely annoying.

Cortana From Halo game series

A very real threat from these pseudo-artificial intelligence powered chatbots was found to be in a specific bot called “Text-Girlie”. This flirtatious and engaging chat bot would use advanced social hacking techniques to trick humans to visit dangerous websites. The TextGirlie proactively would scour publicly available social network data and contact people on their visibly shared mobile numbers. The chatbot would send them messages pretending to be a real girl and ask them to chat in a private online room. The fun, colourful and titillating conversation would quickly lead to invitations to visit webcam sites or dating websites by clicking on links – and that when the trouble would begin.

This scam affected over 15 million people over a period of months before there was any clear awareness amongst users that it was a chatbot that fooled them all. The highly likely delay was simply attributed to embarrassment at having been conned by a machine that slowed down the spread of this threat and just goes to show how easily human beings can be manipulated by seemingly intelligent machines.

Intelligent life on our planet

Its easy to snigger at the misfortune of those who’ve fallen victims to programs like Text-Girlie and wonder if there is any intelligent life on Earth, if not other planets but the smugness is short lived. Since most people are already silently and unknowingly dependent on predictive and analytical software for many of their daily needs. These programmes are just an early evolutionary ancestor of the yet to be realised fully functional artificial intelligent systems and have become integral to our way of life.

The use of predictive and analytical programmes is prevalent in major industries including food and retail, telecommunications, utility routing, traffic management, financial trading, inventory management, crime detection, weather monitoring and a host of other industries at various levels. Since these type of programmes are kept distinguished from artificial intelligence due to their commercial applications its easy not to notice their ephemeral nature. But lets not kid ourselves – any analytical program with access to immense databases for the purposes of predicting patterned behaviour is the perfect archetype on which “real” artificial intelligence programs can be and will be created.

AI chat bot

A significant case-in-point occurred amongst the tech-savvy community of Reddit users in early 2014. In the catacombs of Reddit forums dedicated to “dogecoin”, a very popular user by the name of “wise_shibe” created some serious conflict in the community. The forums normally devoted to discussing the world of dogecoins was gently disturbed when “wise_shibe” joined in the conversation offering Oriental wisdom in the form of clever remarks.

The amusing and engaging dialogue offered by “wise_shibe” garnered him many fans, and given the forums facilitation of dogecoin payments, many users made token donations to “wise_shibe” in exchange for his/her “wisdom”. However, soon after his rising popularity had earned him an impressive cache of digital currency it was discovered that “wise_shibe” had an odd sense of omniscient timing and a habit of repeating himself. Eventually it was revealed that “wise_shibe” was a bot programmed to draw from a database of proverbs and sayings and post messages on chat threads with related topics. Reddit was pissed.

Luke, Join the Dark Side

If machines programmed by humans are capable of learning, growing, imitating and convincing us of their humanity – then who’s to argue that they aren’t intelligent? The question then arises that what nature will these intelligences take on as they grow within society?

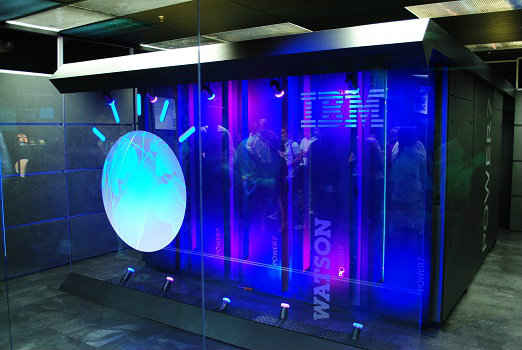

Technologist and scientists have already laid much of the ground work in the form of supercomputers that are capable of deepthinking. Tackling the problem of intelligence piece meal has already led to the creation of grandmaster-beating chess machines in the form of Watson and Deep Blue. However, when these titans of calculations are subjected to kindergarten level intelligence tests they fail miserably in factors of inferencing, intuition, instinct, common sense and applied knowledge. The ability to learn is still limited to their programming.

In contrast to these static computational supercomputers more organically designed technologies such as the delightful insect robotics are more hopeful. These “brains in a body” type of computers are built to interact with their surroundings and learn from experience as any biological organism would. By incorporating the ability to interface with a physical reality these applied artificial intelligences are capable of defining their own sense of understanding to the world. Similar in design to insects or small animals, these machines are conscious of their own physicality and have the programming that allows them to relate to their environment in real-time creating a sense of “experience” and the ability to negotiate with reality. A far better testament of intelligence than checkmating a grandmaster.

The largest pool of experiential data that any artificially created intelligent machine can easily access is in publicly available social media content. In this regard, Twitter has emerged a clear favourite with millions of distinct individuals and billions of lines of communications for a machine to process and infer. The Twitter-test of intelligence is perhaps more contemporarily relevant than the TuringTest where the very language of communication is not intelligently modern – since its greater than 140 characters.

IBM Watson

The Twitter world is an ecosystems where individuals communicate in blurbs of thoughts and redactions of reason, the modern form of discourse, and it is here that the cutting edge social bots find greatest acceptance as human beings. These socalled socialbots have been let loose on the Twitterverse by researches leading to very intriguing results. The ease with which these programmed bots are able to construct a believable personal profile – including aspects like picture and gender – has even fooled Twitter’s bot detection systems over 70 percent of the times.

The idea that we as a society so ingrained with digital communication and trusting of digital messages can be fooled, has lasting repercussions. Just within the Twitterverse, the trend of using an army of socialbots to create trending topics, biased opinions, fake support and the illusion of unified diversity can prove very dangerous. In large numbers these socialbots can be used to frame the public discourse on significant topics that are discussed on the digital realm. This phenomenon is known as “astroturfing” – taking its name from the famous fake grass used in sporting events – where the illusion of “grass-root” interest in a topic created by socialbots is taken to be a genuine reflection of the opinions of the population. Wars have started with much less stimulus. Just imagine socialbot powered SMS messages in India threatening certain communities and you get the idea.

But taking things one step further is the 2013 announcement by Facebook that seeks to combine the “deep thinking” and “deep learning” aspects of computers with Facebook’s gigantic storehouse of over a billion individual’s personal data. In effect looking beyond the “fooling” the humans approach and diving deep into “mimicking” the humans but in a prophetic kind of way – where a program might potentially even “understand” humans.

The program being developed by Facebook is humorously called DeepFace and is currently being touted for its revolutionary facial recognition technology. But its broader goal is to survey existing user accounts on the network to predict the user’s future activity. By incorporating pattern recognition, user profile analysis, location services and other personal variables, DeepFace is intended to identify and assess the emotional, psychological and physical states of the users. By incorporating the ability to bridge the gap between quantified data and its personal implication, DeepFace could very well be considered a machine that is capable of empathy. But for now it’ll probably just be used to spam users with more targeted ads.

From Syntax to Sentience

Artificial intelligence in all its current form is primitive at best. Simply a tool that can be controlled, directed and modified to do the bidding of its human controller. This inherent servitude is the exact opposite of the nature of intelligence, which in normal circumstances is curious, exploratory and downright contrarian. Man made AI of the early 21st century will forever be associated with this paradox and the term “artificial intelligence” will be nothing more than a oxymoron that we used to hide our own ineptitude.

The future of artificial intelligence can’t be realised as a product of our technological need nor as the result of creation by us as a benevolent species. We as humans struggle to comprehend the reasons behind our own sentience, more often than not turning to the metaphysical for answers, we can’t really expect sentience to be created at the hands of humanity. Computers of the future are surely to be exponentially faster than today, and it is reasonable to assume that the algorithms that determine their behaviour will also advance to unpredictable heights, but what can’t be known is when, and if ever, will artificial intelligence attain sentience.

Just as complex proteins and intelligent life found its origins in the early pools of raw materials on Earth, artificial intelligence may too one day emerge out of the complex interconnected systems of networks that we have created. The spark that aligned chaotic proteins into harmonious DNA strands is perhaps the only thing that can possible evolve scattered silicon processors into a vibrant mind. A true artificial intelligence.

Main image credits: Fast Company