Understanding real-time ray tracing: The RTX Way

Ever since NVIDIA announced its GeForce RTX platform, there's been a lot of buzz around the technology. Here's what it is really capable of.

Even a cursory glance at any marketing or promotional material pertaining to the NVIDIA Turing architecture will tell you how much NVIDIA is emphasising on the real-time ray tracing aspect of the new GPUs. Ray tracing on its own, isn’t a new concept. In fact, it’s been around since 1968. So why is it getting popular all of a sudden? To put it in simple words, Ray-tracing was extremely resource-intensive back then but the new GPUs can not only handle ray tracing easily but it can take it up a notch and trace rays in real time. Before we understand the new Ray Tracing feature present on NVIDIA Turing GPUs, let’s figure out how ray tracing evolved into its current avatar.

Survey

SurveyTracing back in the days

Arthur Appel came up with the concept of what was termed as Ray Casting back then. The algorithm would trace geometric rays radiating from objects in the view cone of the observer. It was just the colour of the light that would be calculated. Moreover, there’s no recursive tracing to account for rendering reflections, shadows and refractions. So rendering images with Ray Casting was possible but since the algorithm doesn’t account for reflections, refractions and shadows, developers had to resort to visual trickery to make reflections and refractions happen. Texture maps were a common method to make this happen back in the day. Scanline rendering was an algorithm that was used to achieve similar results much quicker, though not as detailed as Ray Casting.

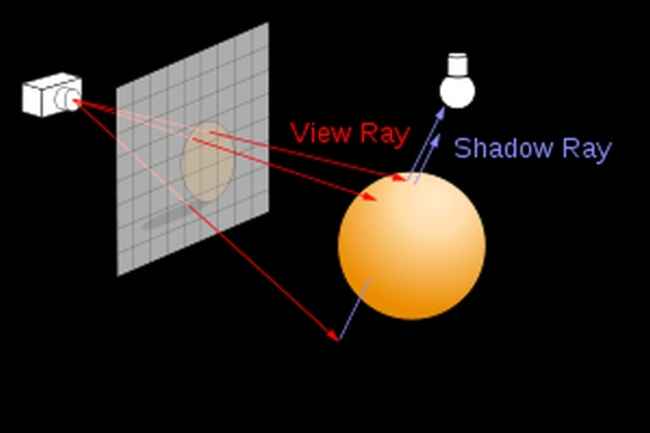

Ray tracing builds an image by extending rays into a scene

There were a few assumptions to be made in Ray Casting, one of them being that if any surface faces a source of light, it will have no shadows on it. So if we are to assume a light source, a pencil and an orange to be placed along the same line, then the pencil which sits between the light source and the orange, will not cast a shadow on to the orange. This simplification made tracing the ray of light, from a source to the viewer’s eye, much simpler. There’s obviously a lack of realism now that we think about it, but back then, Ray Casting was an eye-opener.

Video games such as Wolfenstein 3D and the Comanche Series made use of Ray Casting algorithms. In Wolfenstein 3D, the world was built using a square-based grid of walls which were of a uniform height. These merged with solid coloured floors and ceilings. While illuminating the world, a single ray is traced for every column of pixels on the screen. A vertical slice of the wall texture is then selected and scaled based on where it collides with the ray. This way the distance could be calculated and the walls could be scaled accordingly. Since the ceiling and floors are uniformly coloured, there’s no need to worry about those. This also reduced the computational and memory overhead. The savings could then be utilised to render the bodies that are in motion in the open areas of the map. The Comanche Series handled Ray Casting in a slightly different manner. Individual rays are traced for each column of screen pixels and when the ray interacts with an object, it’s plotted against a height map. It then determines which of the pixels are visible and which aren’t, subsequently using the texture map to pick the corresponding colour for the pixel.

Wolfenstein 3D made use of Ray Casting algorithms

The next major advancement with Ray Tracing was that of Recursive Ray Tracing. Older algorithms lacked realism because they didn’t account for reflections, refractions and shadows. This is because these older algorithms, as we mentioned earlier, would simply calculate the colour upon hitting an object. So the tracing process ended then and there. Turner Whitted, in 1979, decided to let the ray continue even after hitting an object. Except now, the ray had three options. It could generate up to three new types of rays, one for reflection, one for refraction and one for shadows. Reflection rays would travel back from an object. A refraction ray could travel through an object but at an angle to mimic the refractive index of the material. Lastly, a shadow ray would be traced towards the light source until it collided with an opaque object. This way, the iterative method of tracing and then breaking down the rays into the three categories allowed for a lot more realism to be added to the rendered image.

So where do GPUs come in?

The introduction of the highly parallelised GPGPU architecture and the subsequent use of a Unified Shader architecture by both, AMD and NVIDIA, led to a revolution in the computing world. Since the task of tracing each ray is very linear and repetitive, it is the exact same parallelised workload that benefits the most from many parallel cores in your everyday GPU. However, ray tracing across a fixed scene and ray tracing across a dynamic scene with moving objects is a different story altogether. The latter is a lot more intensive owing to the changing nature of the scene. This calls for a lot more parallel cores and improved algorithms that can account for the dynamic nature of the scene.

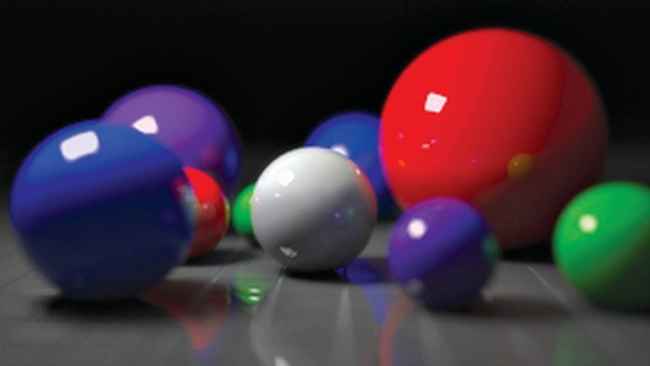

Ray tracing can simulate the effects of a camera

Real-time ray tracing was first demonstrated at SIGGRAPH in 2005 using tools developed in 1986 by Mike Muuss. The computational requirement to perform Real-Time Ray Tracing was so much that the REMRT/RT tools developed by Mike relied on a parallel network of distributed ray-tracing systems. Since 1986, the goal has been to improve the algorithm and to focus on increasing computational power.

NVIDIA’s Turing is a milestone in this regard. After achieving the computational power needed to handle fully-fledged VR headsets, the next big thing for GPU manufacturers was to incorporate AI aside from increasing gen-on-gen compute power. There have been several projects that have focused on making real-time ray-tracing happen. Mike Muuss’ tools led many others to work on the software. Even today, the original project continues to be in active development as an open source software.

OpenRT, OptiX, OpenRL and many other APIs have now risen to the challenge of enabling real-time ray tracing on GPUs.

Ray tracing, the NVIDIA way

With the new Turing GPUs, the compute power and the ability to perform real-time ray-tracing is present. However, the algorithms have also increased in complexity to account for greater realism. The number of rays that need to be cast per pixel has now gone up based on the scene as well as the objects that it has to interact with. Thanks to the specialised RT (Ray-Tracing) Cores present in Turing GPUs along with de-noising filtering techniques, this number of rays needed to be cast has been brought down to reasonable levels. Think of it as a trade-off. While tracing each and every ray of light per pixel can obviously result in greater realism, there is the rule of diminishing returns at play. Firstly, we are looking at a 2-dimensional display and there are inherent limitations of the display panel as well. So if the display panel cannot reproduce the outrageous levels of realism that can be achieved, why bother then? Might as well scale it down a notch till the viewer cannot perceive a loss in fidelity.

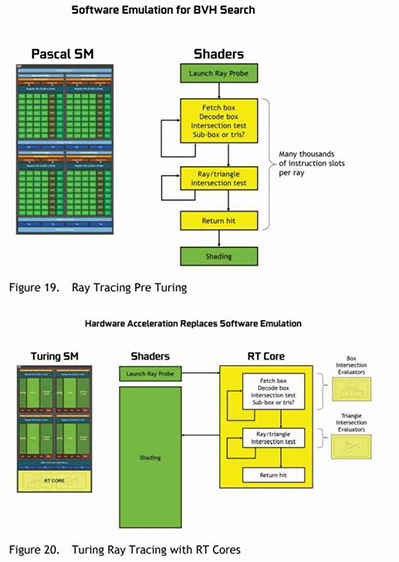

Comparison of Pascal and Turing shaders

NVIDIA’s Turing GPUs accelerate ray tracing by using a combination of several techniques. Some of which will be familiar, while some will be new. Not all of these techniques come into play during the rendering stage. Regardless, here’s everything that Turing makes use of to achieve real-time ray tracing.

- Reflections and Refractions

- Shadows and Ambient Occlusion

- Global Illumination

- Instant and off-line lightmap baking

- Beauty shots and high-quality previews

- Primary rays for foveated VR rendering

- Occlusion Culling

- Physics, Collision Detection, Particle simulations

- Audio simulation

- AI visibility queries

- In-engine Path Tracing

The RT cores accelerate Bounding Volume Hierarchy (BVH) traversal and ray/triangle intersection testing. These two processes need to be performed in an iterative fashion as the BVH traversal would need thousands of intersection testing to finally calculate the colour of the pixels. Since RT cores are specialised to take on this load, this gives the shader cores in the Streaming Multiprocessor (a sub-section of the GPU) room to focus on other aspects of the scene.

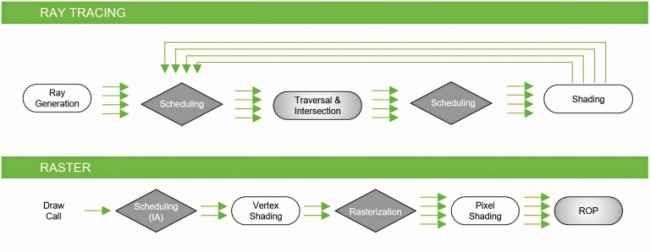

Hybrid Rendering pipeline

Without delving too much into the numbers, NVIDIA’s whitepaper states that Pascal GPU can handle 1.1 Giga Rays/Sec while Turing can do more than 10 Giga Rays/Sec. This is because of how specialised the RT cores are and due to the hybrid rendering pipeline used by the NVIDIA Turing architecture.

In order to attain higher frame rates, NVIDIA advises that developers incorporate the hybrid rendering pipeline. The ray tracing and the rasterization process can take place simultaneously in Turing GPUs. Essentially, the ray tracing part is handled first with the rays being generated for tracing. Because it is a voluminous process, there is a scheduler handling the sequence of ray generation. As each traced ray interacts with objects in the scene, the shader is called in to calculate the colours. Once that’s done, we have the standard raster model come into the picture and start drawing. Soon enough we have the entire scene calculated and sent to the ROP before it gets displayed on the screen.

Want to read more geeky tech articles? Check out Digit Geek!

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 14 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile