The self-drive knowhow: Elements that make autonomous cars tick

The heart, brain and nerves under the future machines that are all set to replace human chauffeurs in our near future.

By now, we have established how autonomous cars are the future. We’ve looked at concepts of varying shapes and values, evaluated their practicality, and even put forth optimistic notions toward what will be the most practical implementation of artificial intelligence in present time.

But, what makes these cars work? What resides inside the autonomous wizardry, that would remove the need for human driving intervention and make a car transit safely from one point to another? Here, we look at the three classification of components that make autonomous cars tick, and highlight the key components from each classification.

Knowing the roads

The very first element to make a car travel from point A to point B is to know the roads. Knowing involves gauging routes, learning a city’s shortcuts and localised traffic data, gathering live on-road information, and finally, making the journey. The three key elements here cover these very points.

Global Positioning System modules

GPS, or Global Positioning System, is anything but new. We’ve had integrated GPS services on our mobile phones and in our cars for a while now. With autonomous technology, however, GPS services are required to become smarter, more responsive and seamless. These advanced GPS modules are not mere beacons for the route that a car will take, but are more responsive in terms of knowing real-time data, gathering shortcuts and figuring out strategies to avoid traffic just the way humans do.

Inertial Measurement Units

Comparatively less popular in commonplace jargon, IMUs are crucial elements that basically lend directional judgement to cars when the GPS signal fails to be active. Many intermittent sections of roads can be ‘blind spots’, or areas where the GPS transceiver in a car fails to receive inward bound signals from satellites in terms of choosing which turn to take next. IMUs are platforms fit on a car and incorporate three gyroscopes and three accelerometers of high precision to determine the route that a car is already on, and where the inertia of motion is leading it to.

Algorithms take cue from motion data and tally it with previously entered GPS data to locally evaluate the best possible route, and guide the car to brake or turn as required. IMUs also work towards gauging the car’s own speed and communicate with other systems aboard the vehicle to determine when to slow down, shift lanes and avoid collisions, hence being the system that will truly know roads the way humans do.

Connected car platforms

Just like your peers inform you about when to not take a route because of any incident, connected car platforms will network a group of cars within a specific radius to know live statistics on which routes to take. Connected car platforms can store and relay live data on road conditions, thereby guiding the GPS module at hand to choose the best possible route, and is hence a crucial element for a car to know which way to go amid heavy Friday evening traffic.

While the functionalities of connected car platforms are much beyond just relaying traffic data, it is one of the most important elements for a car to know which road to go by.

Seeing the roads

Once a car gets to know which route to take, the next objective is to make cars see the roads. While this may seem pretty straightforward, it is quite the contrary. For cars to ‘see’ roads, only lending imaging equipment is not enough, as that would led to heavy data sizes to be processed in split seconds, which in turn makes it not ideal for swift on-road responses.

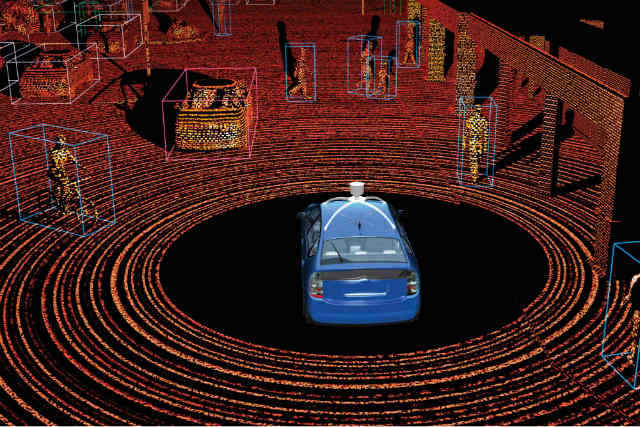

Lidars

It is this impracticality that makes Lidars the ultimate choice for all the autonomous vehicles out there. Standing for Light Detection and Ranging modules, lidars use laser pulses to gauge panoramic proximity from source. In-car lidars are one of the most crucial and well-documented elements, and bask in popularity partly because of the science experiment look that lidars lend to cars, and otherwise because of the ever-crucial job that it does of recording a car’s dimensions and gauging proximity to identify ample space for the car to drive along, and prevent collisions along the way.

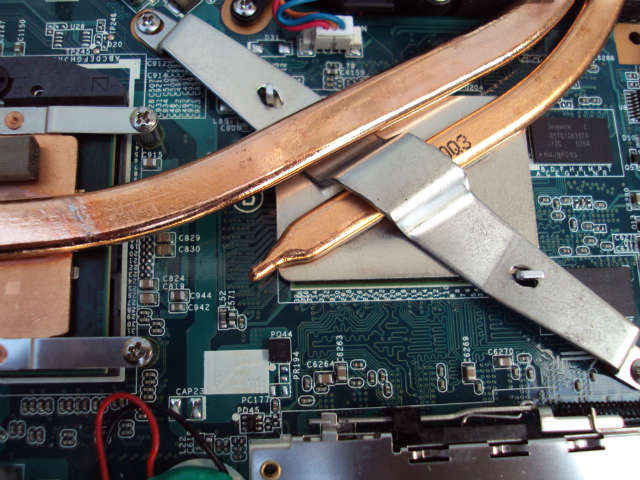

Radars and related sensors

For more minute adjustments, autonomous vehicles rely on a host of other sensors, including short-range radars, proximity sensors and more to form the basis of autonomous elements like advanced collision detection systems, pedestrian alerts and obstacle detection. Efficient use of radars in cars to maneuver in bumper-to-bumper traffic involve incorporating radars in bumpers with operating frequency of 77GHz, which is known to exhibit reliable frequency responses and high resolution data. For making the construction compact, many technology providers are using the subsystem PC boards of radar sensors as the radar antennae, which not only account for seamless transmission of data, but preserve aesthetics too.

Imaging equipment

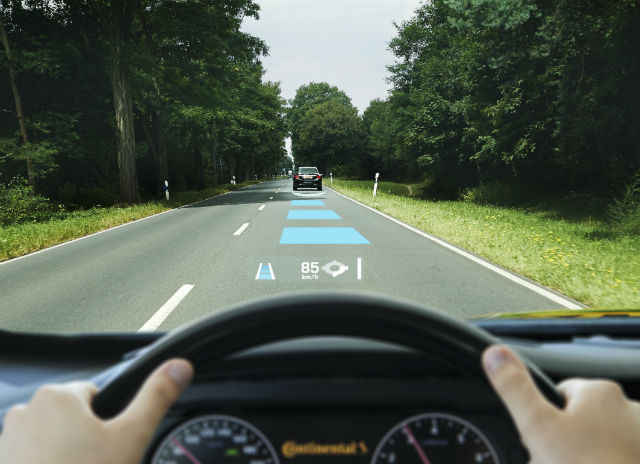

The third and most obvious inclusion in autonomous cars is imaging equipment. The plethora of cameras offer visual input for parking, driver assistance in case of emergencies, identifying lanes and gauging distance. The panoramic cameras work to provide assistance to the lidars and radars already present, thereby reducing the load of transmitting heavy imaging data to processors and making the cameras work only when needed, and in specific applications. This also helps optimise the bill-of-materials cost of a car, thereby working as a tool of efficiency rather than necessity, contrary to popular belief.

Making the move

With the routes set and the roads seen, the most vital element is to make the cars move. It is here that much of a car’s non-glamorous elements come to the forefront. These are the elements that will reside alongside batteries and drivetrains to assist movement, make the crucial decisions and tie all the elements together.

Vision Processing Units (VPUs)

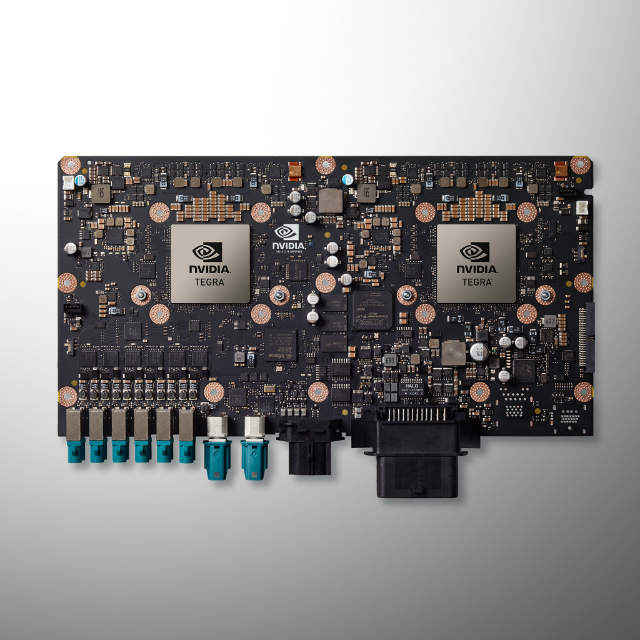

In combination with deep learning algorithms and neural networks, vision processing units will work in tandem to process imaging data, lidar and radar information, to gauge the visual path that is to be taken. A VPU is like the visual cortex of a human brain, and instruct the drivetrain to come in operation, finally making the move. VPUs are also crucial for the multiple assistive functions, that all make use of a car’s sensors to relay as much information as possible to the central processing board.

Power management

With the human connect now removed from cars, autonomous vehicles will compulsorily require power management services to ensure that a car’s dynamics keep in shape. These power management systems will monitor the multiple computers on board a car, alongside play the crucial role of ensuring that every element on the system level gets adequate distribution of power, keeps functioning properly and maintain a car’s performance.

An autonomous vehicle’s power management services will be the crucial heartbeat that conducts the even distribution of power, maintenance and working of systems, alongside reading heat levels of computing boards and ensuring everything remains in order. This also ensures that the battery, which will be the source of power for most autonomous vehicles.

The central board

Governing everything will be the central processing board, that reads input from maps, visuals, the processed visual data, power statistics and routes every ounce of information to where they should belong. Everything is routed through the central processor of the autonomous cars, which is what technology giants are aiming at perfecting. This includes all the algorithms and the necessary software that will keep everything ticking. The likes of Google, Apple and others will ultimately look to provide the key software to lay out on standardised hardware, and ultimately make for the heart of autonomous vehicles.