What powers a faster internet

Tech upgrades to the core network infrastructure promise to unlock access and connectivity speeds

For everyone who grew up using it in India, the Internet phenomenon hasn’t been without its fair share of woes. Whether it was struggling to connect through a dial-up modem into a Yahoo chat room full of Harry Potter fans or waiting for a much-awaited movie trailer to buffer on YouTube, our Internet experience has always been one full of hampered memories and stuttering video streams.

However, soon, most of the Internet’s most terrifying demons that we grew up with will be exorcised and be made a thing of the past. It’s not only in the interest of big internet companies to enhance the underlying infrastructure of the Internet, but it’s also something that users have been demanding for a while now. While we can’t imagine life without the Internet and the many services that depend on it, do remember that almost all of the Internet as it exists now is pretty much the same as it was designed back in the late 1970s by scientists for highly sophisticated communication. And in the 21st century, pretty much most of it is outdated technology. Surprising, isn’t it?

Needless to say, a lot has changed since all of us had our first dose of the Internet in India (or around the world) back in the late 1980s and 1990s. While messaging and email were the big online undertakings of that time, the digital world has moved on tremendously since then. In the 21st century, high-bandwidth consuming data in the form of streaming video, backup and sync of data between the cloud and end points, and online gaming are severely clogging our Internet pipes – both under the sea, on land and over the air. The biggest improvement since that time has been the advent of efficient router technology and wireless standards, but nothing fundamentally game changing has happened to save the Internet from collapsing on its own largely outdated infrastructure.

Seeing how the world’s Internet addiction and digital needs are only going to go up, Internet companies don’t have a choice in the matter – someone somewhere has to innovate and solve the problem. And there are efforts underway to do just that. We may soon enter into a new era of Internet connectivity bliss.

Akamai GIGA

If you’re cursed by perennially buffering video feeds that seem to take an eternity and more to load, Akamai has a solution in the works that might be just what the doctor ordered. Managing a global network of over two lakh servers, Akamai operates one of the largest CDNs (content delivery networks) in the world right now. Companies such as Facebook, Adobe, Autodesk and several online entertainment portals use Akamai’s server infrastructure to move extremely high volume of data across the world in extremely little time. Not only that, Akamai also offers services such as local caching of content especially for online video platforms which reduces buffering and video load times significantly. What’s more, Akamai thinks that the Internet can function even more efficiently through a new technology they’ve developed and tested. It plans to give the Internet a severe dose of steroids, which can help unlock a whole new level of performance never seen before. Ever.

Akamai hasn’t released a whole lot of technical nitty gritties of its GIGA protocol yet but according to tests it conducted across its global CDN servers, GIGA proved to boost Internet speed for most recipients by as much as 30 per cent. In fact, in regions like India, China and Bolivia, data moved faster by as much as 150 per cent thanks to GIGA, according to Akamai. This is a significant number which could help evolve the basic infrastructure of the Internet as it functions right now.

Just what exactly is GIGA? It’s a new set of protocols that Akamai aims to replace TCP with completely. For those who don’t know, TCP is the protocol on which fundamental Internet communications takes place currently, and it’s been largely unchanged since it was first implemented back in 1974. That’s a whopping 42 years ago! GIGA proposes several improvements over TCP which can help extract better efficiency standards when it comes to transferring data over the Internet. TCP is notoriously infamous for routinely labelling free or de-congested data routes between two servers or a client and server as full or at capacity even when they’re not. Also whenever the user’s Internet connection isn’t steady, TCP’s prone to dropping links frequently. Both of these TCP inefficiencies are severely reduced in Akamai’s GIGA, among other things.

What’s more, Akamai has no intention of keeping GIGA all to itself as it has plans to openly release the revamped Internet protocol and hopes for broad adoption – both by end user devices and new server manufacturers. Akamai, in fact, aims to combine GIGA with QUIC, a Google developed software protocol which is geared to enhance Internet speeds as well.

Google QUIC

It doesn’t take a genius to figure out why Google’s taking the lead on trying to fix the Internet’s fundamental architecture, seeing how the search engine giant has so much at stake in its online business. Ensuring the Internet improves isn’t just to ensure Google’s success but to safeguard the interests of the entire global online economy and to spur next generation of innovative technology. Which is what QUIC’s all about.

Short for Quick UDP Internet Connections, QUIC relies on UDP (User Datagram Protocol) instead of TCP (Transmission Control Protocol) – the current backbone of Internet communications, so to speak. While both UDP and TCP form the core of the Internet protocol suite as it was defined since the late 1970s, they differ at a fundamental level. Where TCP requires the client and server to establish a thorough handshake and data integrity is ensured down to the network layer, UDP does away with some of these overheads from the network layer (leaving it for applications to figure out these time-sensitive nitty gritties) thereby allowing for a better, speedier real-time connection compared to TCP.

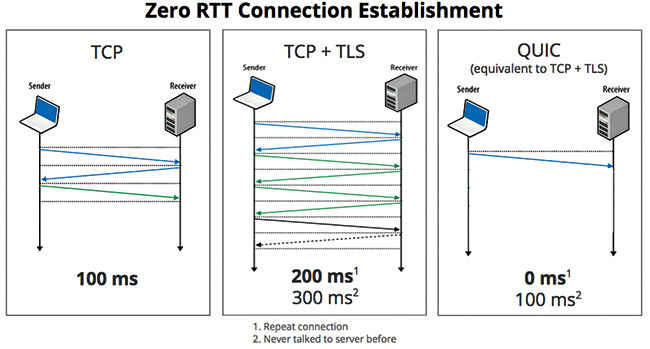

However, QUIC aims to solve both the transport (network) layer as well as application layer problems encountered by sophisticated web applications. If you were accessing a website on your browser or watching a streaming video, currently before you land on the website you see the whole “Waiting / Establishing connection” routine in the bottom corner of your browser. The sequence of events may seem lengthy depending on your connection speed but it’s also a factor of TCP’s inefficiency – since it requires at least 2-3 handshakes between client (user) and server (destination) to establish a successful connection and starts serving requested data. Through QUIC, for new connections only 1 handshake is required, while repeat connection requests need no handshakes and these requests are served in real-time – Google claims a speed of 0ms for repeat connection roundtrips, which means seeing the page or video load as soon as you hit enter in the URL or click a hyperlinked button. This is massive!

QUIC also mitigates TCP’s age old problem of a congested stream. Where TCP wrongly assumes data paths between a client and server as congested, even when they aren’t, it slows down data transmission to a trickle. QUIC’s protocol ensures more information is transmitted to the congestion control algorithm, ensuring no misreporting. Another potentially harmful instance of TCP is head-of-line blocking. Whenever you’re trying to watch a video, for instance, data arrives in several packets (as we all know). If any of these packets is lost in transit over TCP, it will have to be retrieved, retransmitted and received successfully before the line of packets continues. QUIC fixes this major data transmission hole, also providing for several simultaneous data streams to be downloaded at once, which is referred to as multiplexing.

Google has been working on QUIC since 2013. Unbeknownst to a lot of us, Google’s incorporated QUIC support into Chrome for a while now and it’s been testing performance metrics across a range of Google’s browser-based services. On high-speed connection, the efficiency isn’t all that apparent – 3 per cent improvement in serving highly complex search pages, for instance. However, the results are remarkable in slower speed networks (emerging markets, mobile cellular broadband, etc) where page load times were reduced as much as over one full second and Youtube video streamed and buffered 30 per cent faster, leading to less amount of stuttering on a faulty connection, according to Google’s claim.

So the fact that QUIC is potentially game changing as far as speeding up the Internet’s concerned is great, and since Akamai’s GIGA will potentially plug into it is a testimony of its prowess. Google plans to submit QUIC to IETF (Internet Engineering Task Force) – the folks who standardise core Internet technologies – hoping that it’s effectively adopted as a standard protocol in the coming months. Meanwhile, Google’s opened up QUIC’s source code for anyone who wants to experiment with it further.

Shift to IPv6, DNSSEC, BCP-38

According to the “Father of the Internet” Vint Cerf, who invented the TCP/IP network protocol – which is pretty much the backbone of the Internet as it exists now – the Internet still hasn’t largely adopted IPv6, which is the sixth generation of the Internet Protocol suite. If you’d recall the world seemingly ran out of IPv4 addresses back in 2011, scrambling to shift over to IPv6. Why? Because an unprecedented number of devices were connecting to the Internet, something that Vint Cerf didn’t really expect back in 1974! Where IPv4 supported a 32-bit address scheme which roughly allowed for over 4 billion unique IP addresses to exist, its successor IPv6 supported a 128-bit hexadecimal address scheme allowing for 3.4 x 1038 addresses.

Even after five years of adoption, not enough is being done, according to Vint Cerf, as currently only 4 to 6 per cent of Internet traffic runs on IPv6, which is woefully low. Among many things, IPv6 simplifies the processing of data packets in transmission which aims to boost speed levels. Security is another important issue troubling Vint Cerf, who believes Internet security standards should be raised by adopting DNSSEC – which is essentially security extensions at the DNS level. With wide scale adoption of DNSSEC, DNS spoofing will be eliminated, among other things.

Apart from DNSSEC, another IETF recommendation that needs to be implemented on an Internet-wide scale is BCP-38 – a process defined as Network Ingress Filtering, which will make Denial of Service (DoS, DDoS) attacks rampant on the Internet through IP address source spoofing a thing of the past. When Vint Cerf speaks about the Internet, the world listens. And we truly hope that’s true in this case.

Ericsson’s Gigabit LTE solution rollout

The fact that a majority of the global population that isn’t online will be connected to the Internet over a cellular smartphone network is pretty well known. To ensure smooth delivery of a rich variety of Internet applications and interactive media, not only cellular bandwidth needs to increase but so does access speed. It’s high time for the roll out of high speed data networks. While the maximum theoretical download speed supported over existing 3G networks is 56Mbps, even the most advanced versions of wireless 4G LTE cellular speeds are capped at 300Mbps ideally. But that headroom’s going to increase very shortly, thanks to new tech advancements proposed and rolled out by Ericsson, a company that enjoys over 30 per cent share of the wireless communications market in the world with several thousand patents to its name.

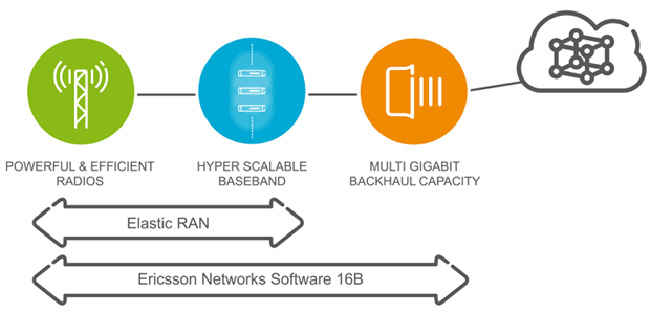

In February 2016, Ericsson publicly released a set of network and software enhancements which it claims will boost indoor commercial 4G LTE network uplink and downlink performance by several notches. The solution Ericsson’s offering (called Ericsson Networks Software 16A) to the congested wireless networks is made of four feature components: 4×4 MIMO on 20 MHz and high bands, three component carrier aggregation (3CC), 256 Quadrature Amplitude Modulation (QAM), and Ericsson Lean Carrier.

The 4×4 MIMO array significantly increase the radio antenna’s ability to support increased number of uplinks and download streams, while the 3CC boosts the cellular 4G LTE network’s bitrate and bandwidth. The 256 QAM modulation scheme propels the modem to encode and increase throughput on any given radio frequency, while Ericsson Lean Carrier strives to keep signal interference down to the absolute minimum that’s possible. Ericsson claims its new set of enhancements to the network infrastructure should increase uplink speeds by 200 per cent and downlink speeds by 30 per cent at least. According to tests conducted by Ericsson with Telstra (an Australian telco), it achieved the world’s first peak download rate of 600Mbps! Ericsson’s also rolling out its IoT Network Software 16B which, among other things, is aimed towards the IoT market with enhancements to the signal to ensure minimal battery impact of endpoint devices and sensors. Improved indoor coverage of GSM networks claims to deliver 20dB improvement of signal to ensure it reaches deeply embedded devices, too. All of these are important benchmarks towards 5G adoption scheduled to take place by 2020.

This article was first published in the March 2016 issue of Digit magazine. To read Digit's articles in print first, subscribe here.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile