Sapient’s RNN AI model aims to surpass ChatGPT and Gemini: Here’s how

In the fast-moving AI industry, there’s been an interesting development: Singapore-based startup Sapient Intelligence has announced the successful closure of its seed funding round, securing $22 million at a valuation of $200 million. This investment, led by prominent entities such as Vertex Ventures, Sumitomo Group, and JAFCO, primarily aims to advance Sapient’s mission – which is to address the limitations inherent in current AI models like OpenAI’s ChatGPT, Google’s Gemini and Meta’s Llama.

Survey

SurveyAlso read: Osmo’s scent teleportation: How AI is digitising scent for the first time

That’s right. A new AI startup wants to challenge the popular incumbents like OpenAI, Meta, Google and others with a more powerful, more comprehensive AI foundational model which better understands human nuance and has a longer lasting memory.

An AI framework based on neuroscience

Traditional AI models, including GPT-4 and Gemini, primarily utilise transformer architectures that generate predictions by sequentially building upon prior outputs. Yes, this is the same concept outlined in the ‘Attention Is All You Need’ research paper from 2017, that experts all over the world consider as a landmark moment in NLP and kickstarting the era of ChatGPT.

While effective for various tasks, this autoregressive method often encounters challenges with complex, multi-step reasoning, leading to issues like hallucinations – instances where the model produces incorrect or nonsensical information.

Also read: Google Gemini controversies: When AI went wrong to rogue

Austin Zheng, co-founder and CEO of Sapient Intelligence, elaborated on these challenges in a recent interview with Venturebeat, stating, “With current models, they’re all trained with an autoregressive method… It has a really good generalization capability, but it’s really, really difficult for them to solve complicated and long-horizon, multi-step tasks.”

To overcome these limitations, Sapient is developing a novel AI architecture that draws inspiration heavily from the world of neuroscience and mathematics. This new design integrates transformer components with recurrent neural network (RNN) structures, emulating human cognitive processes, according to Sapient. Zheng explained, “The model will always evaluate the solution, evaluate options and give itself a reward model based on that… [It] can continuously calculate something recurrently until it gets to a correct solution.”

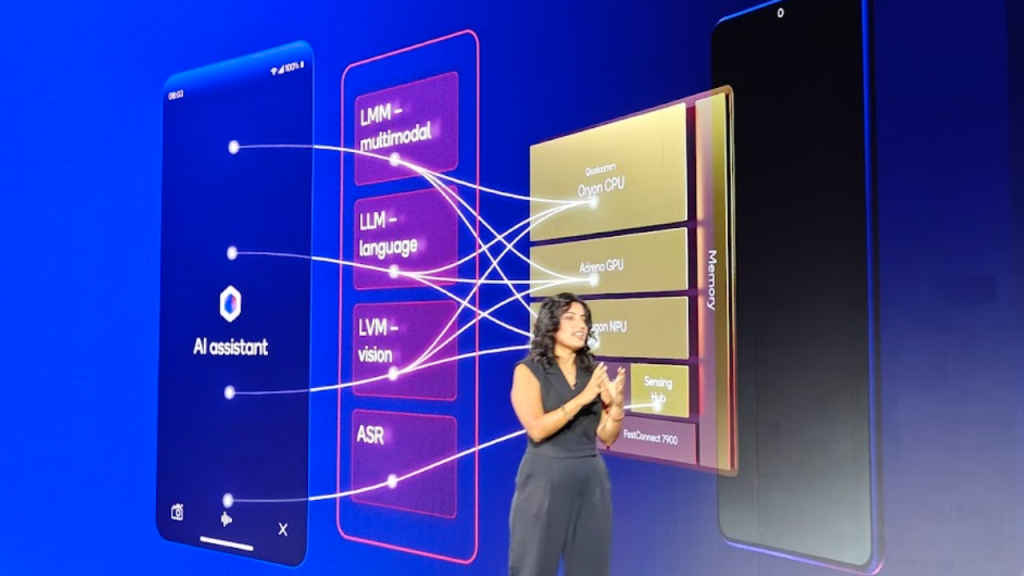

Recurrent Neural Networks (RNNs) in general process input in a sequential manner, maintaining a hidden state that captures information from previous inputs – this makes them great for data handling tasks where order is crucial, such as time-series forecasting. However, this same sequential processing nature of RNNs can lead to challenges in capturing long-range dependencies due to certain fundamental issues like the vanishing gradient problem. This is where Transformers help, where transformer-based models utilise a self-attention mechanism – which allows them to process all elements of the input sequence in parallel, effectively capturing relationships between distant elements without relying on sequential order. This parallel processing enables transformers to handle long-range dependencies more efficiently, making them particularly effective in tasks like language translation, where understanding the context across an entire sentence is essential.

Think of RNNs as the CPU which can only do sequential tasks, and Transformers as GPUs which are great at parallel processing. Both in tandem are great for complementing each other’s strengths and weaknesses, which is what Sapient’s attempting to infuse its AI model with.

Sapient’s AI model approach involves combining transformer components with recurrent neural network structures, which ultimately allows the model to evaluate its own solutions, consider various options, and assign rewards based on outcomes – similar to cognitive processes observed in humans. When presented with a problem, we humans also try to solve it by weighing multiple options as solutions and rewarding ourselves with a dopamine hit whenever we choose the right solution, right?

By emulating these brain functions, Sapient claims its model can iteratively refine its outputs until achieving accurate solutions, thereby improving its ability to handle complex, multi-step tasks. This brain-inspired design aims to create more adaptable and efficient AI systems, reflecting a broader trend in the AI research field where insights from neuroscience are informing the development of advanced AI models with greater capabilities.

Future of Sapient’s new AI model

What’s more, according to Sapient, their AI architecture is designed to enhance the foundational model’s flexibility and precision, which they hope will allow it to tackle a broad range of tasks with greater reliability. This approach by Sapient isn’t too dissimilar from emerging reasoning models from industry leaders like OpenAI’s o1 series and Google’s Gemini 2.0, for instance. According to their spokesperson, Sapient’s model has demonstrated superior performance in benchmarks such as Sudoku, achieving 95% accuracy without relying on intermediate tools or data – which is being hailed as a significant advancement over existing neural networks.

The company is also focusing on real-world applications, including autonomous coding agents and robotics. For instance, Sapient is deploying an AI coding agent within Sumitomo’s enterprise environment to learn and contribute to the company’s codebase. Unlike some existing models that require human oversight, Sapient’s agents aim to operate autonomously, continuously learning and improving through trial and error. AI agents are incoming!

Also read: AI agents explained: Why OpenAI, Google and Microsoft are building smarter AI agents

Sapient’s advancements reflect a broader industry trend towards developing AI systems capable of complex reasoning and planning. OpenAI’s recent release of the o1 model, described as the “smartest model in the world,” signifies a shift from prediction-based models to those emphasising reasoning capabilities. Similarly, Google’s Gemini 2.0 aims to perform tasks and interact like a virtual personal assistant, showcasing improved multimodal capabilities.

These developments suggest that the AI industry is moving towards more autonomous and adaptable systems, capable of handling intricate tasks with minimal human intervention. Sapient’s unique approach, combining insights from machine learning, neuroscience, and mathematics, positions it as another player that’s making its moves in the rapidly evolving AI landscape.

Also read: ChatGPT Canvas explained: What is it and how to use new OpenAI tool?

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile