Rise of NPU: The most important ingredient of AI PCs

A little over a decade ago, if you had mentioned Neural Processing Units (NPUs) at a tech conference or otherwise, you’d likely have been met with blank stares or polite nods at best. Fast forward to today, and NPUs have become a staple in the silicon architecture of almost every major chip maker, from Intel to NVIDIA, Qualcomm to MediaTek, and even Samsung. So, what changed? Why are NPUs now a critical component in our devices, and what does this mean for the future?

Also read: AMD Introduces Ryzen AI 300 and 9000 Series Processors at Computex 2024

Let’s rewind to the early 2010s. The tech landscape was dominated by CPUs and GPUs, with the former handling general-purpose computing tasks and the latter excelling in parallel processing, crucial for gaming and graphics-intensive applications. But as artificial intelligence (AI) started to permeate every facet of personal technology manifestations, the limitations of CPU-GPU duopoly became apparent. Enter NPUs, specialised processors designed to efficiently run and accelerate machine learning tasks.

Inspired by the human brain, the concept of NPUs arose from the need for specialised hardware to handle the demands of artificial neural networks. Think of the NPU as a highly specialised chef in a restaurant kitchen. While the CPU is the head chef, overseeing and managing all tasks, and the GPU is the sous-chef, best suited for handling and executing several large-scale tasks in the kitchen, the NPU is like the pastry chef, meticulously focused on creating the perfect dessert with a creative touch. Each chef plays a crucial role, but the NPU’s specialised skills make it indispensable for specific tasks like AI.

In fact, the trajectory of NPUs mirrors the rise of AI. As AI moved from research labs to everyday applications, the demand for efficient processing of AI tasks skyrocketed. The initial wave of AI applications, like image and voice recognition, required immense computational power. Traditional processors were not optimised for these tasks, leading to inefficiencies and increased power consumption and operating costs.

For example, consider the rise of voice assistants like Siri, Alexa, and Google Assistant. These services rely heavily on natural language processing (NLP), a subset of AI that interprets and responds to human speech. Early versions of these assistants were sluggish, often taking several seconds to process a request. With the integration of NPUs, these tasks became significantly faster and more efficient, allowing real-time interactions that felt more natural and intuitive.

Also read: Just how good is Intel Lunar Lake AI PC chip’s performance?

Smartphones are another perfect example. Released in 2017, the iPhone X featured Apple’s A11 Bionic chip, which included a dedicated “Neural Engine.” This NPU was specifically designed to accelerate AI tasks, enabling features like Face ID and Animoji to execute instantaneously. Similarly, Google’s Pixel phones leverage the Pixel Visual Core, an NPU that enhances image processing capabilities, resulting in stunning photos with advanced computational photography techniques.

In the broader tech ecosystem, NPUs have revolutionised various industries. Autonomous vehicles rely on NPUs to process data from sensors and cameras in real-time, making split-second decisions critical for safety. Healthcare applications use NPUs to analyse medical images, assisting doctors in diagnosing conditions with higher accuracy. Even in entertainment, NPUs power recommendation engines on streaming platforms, tailoring content suggestions to individual preferences. From relative obscurity, NPUs are driving the on-device AI boom – a fact that hasn’t been lost on chip and device manufacturers aiming to dominate the AI PC market.

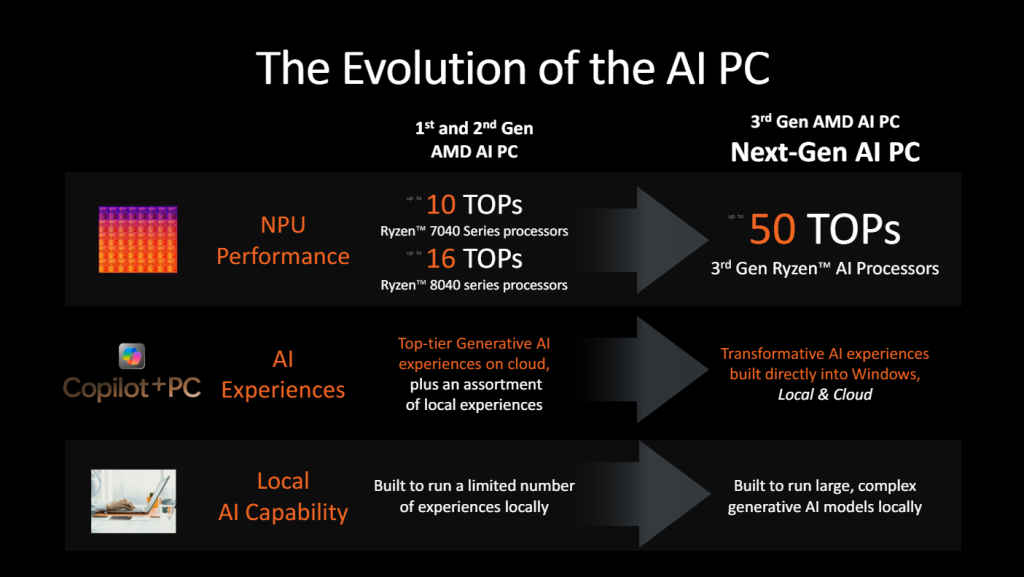

Just one look at the announcements from AMD, Intel, MediaTek and Qualcomm at Computex 2024 tells you everything about the heightened role of NPU inside chips being made for AI PC and other smart devices. While AMD’s Zen 5 chip clocks its XDNA2 NPU performance at 50 TOPS, higher than the 48 TOPS claimed by MediaTek Dimensity 9300+ chip’s NPU and 45 TOPS offered by Qualcomm’s Hexagon NPU in the Snapdragon X Elite chip, Intel’s adding a new twist into the NPU race.

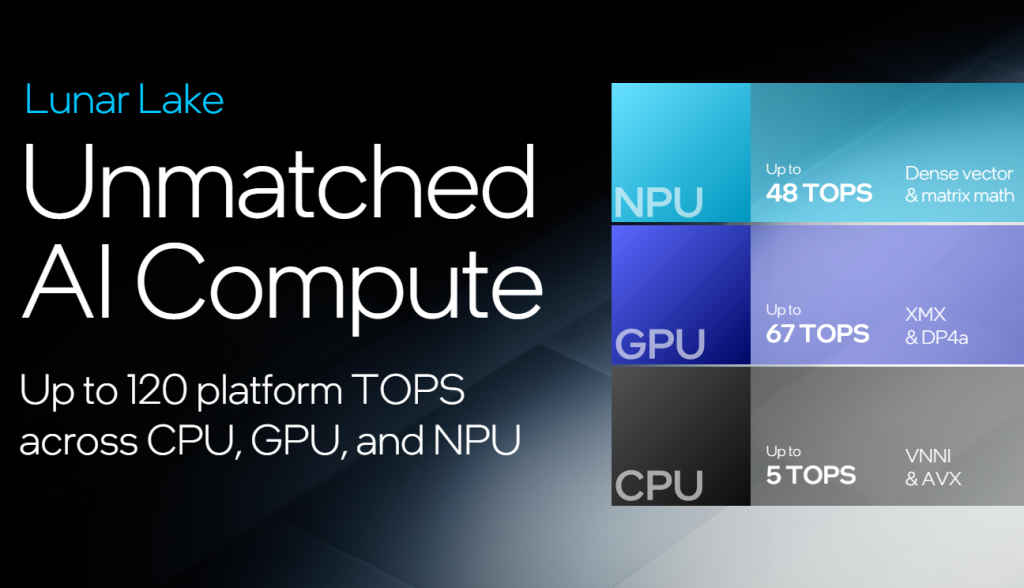

While its individual NPU clocks in at 48 TOPS – similar to MediaTek’s, higher than Qualcomm’s but lower than AMD’s – Intel emphasises a combined AI performance that factors in the GPU (67 TOPS) and CPU (5 TOPS) for a total of 120 TOPS, exceeding both AMD and Qualcomm’s offerings, aiming for a more holistic AI solution on the chip level. All of these chips are compliant with Microsoft Copilot+ AI PCs, which require chips with NPUs capable of doing at least 40 TOPS of AI compute. From a broader perspective, the competition between these chipmakers highlights the growing importance of AI capabilities on various end-user computing platforms.

Not to forget NVIDIA or Samsung, who are also playing a decisive role in this broader industry shift towards AI-centric computing. These companies recognize that the future of technology hinges on AI, and NPUs are the key to unlocking its full potential. By embedding NPUs into their SoCs, they ensure that devices are equipped to handle the growing demands of AI applications, from edge computing to cloud services. As a result, NPUs are at the heart of every conversation from AI-powered smartphones to the future of AI PCs.

Also read: Computex 2024: Hands-on with Snapdragon X Elite AI PC laptops from Acer, Lenovo, Samsung, Microsoft

This leaves no doubt in my mind that the evolution of NPUs is only going to accelerate. As AI models become more complex, the need for even more specialised and powerful NPUs will grow. We can expect future NPUs to offer greater efficiency, lower power consumption, and enhanced capabilities that we can only imagine for now. Innovations in chip design, such as integrating NPUs with other specialised processors like Tensor Processing Units (TPUs), will further propel AI advancements.

At the same time, the democratisation of AI tools and frameworks mean that NPUs will become accessible to a broader range of developers and applications. Conversely, as NPU adoption grows, there will be an increased push for standardisation across different chip makers and OEMs. This would allow developers to create AI applications that can run seamlessly on various devices with NPUs, fostering a more open and collaborative AI ecosystem.

Imagine a world where every device, from your smartwatch to your refrigerator, is equipped with a capable NPU, seamlessly processing AI tasks to offer smarter, more intuitive user experiences. Or an AI PC releasing in 2024, with its CPU and GPU reserved for general purpose compute or graphics intensive work, while the NPU does all the AI heavy-lifting. Whether it’s AMD’s focus on raw NPU performance, Qualcomm’s slightly better performance-per-watt (exceeding that of even the Apple M3 MacBook Pro) or Intel’s combined approach, the future seems to hold increasingly intelligent features seamlessly integrated into our devices, with chip makers and developers together pushing the boundaries of AI possibilities.

This column was originally published in the June 2024 issue of Digit magazine. Subscribe now.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile