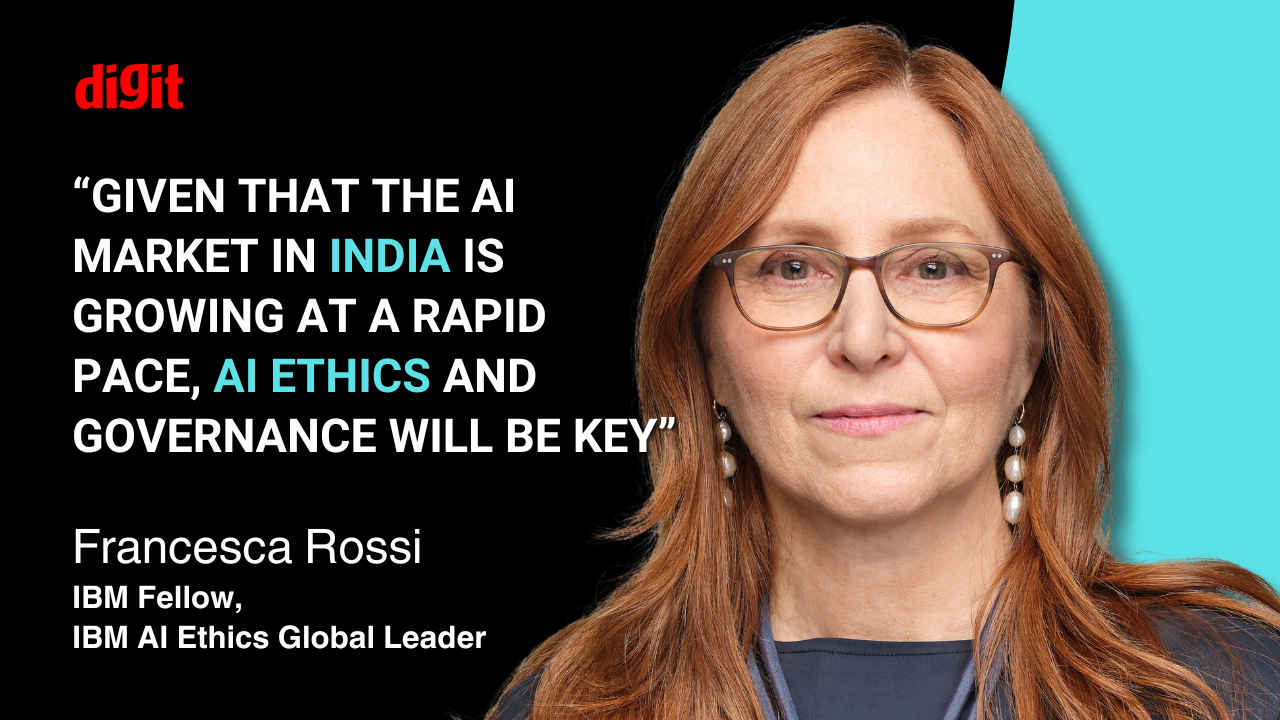

Navigating the Ethical AI maze with IBM’s Francesca Rossi

At a time when artificial intelligence is no longer the stuff of science fiction but an integral part of our daily lives, the ethical implications of AI deployments have moved from theoretical academic debates to pressing real-world concerns. As AI systems become more embedded in every aspect of our phygital existence, the question is no longer about what AI can do, but what it should be doing in a responsible manner. I had the opportunity to interview Francesca Rossi, IBM Fellow and Global Leader for AI Ethics at IBM, to delve into these complex issues.

Also read: How is Sam Altman defining AI beyond ChatGPT?

Francesca Rossi is no stranger to the ethical quandaries posed by AI. With over 220 scientific articles under her belt and leadership roles in organisations like AAAI and the Partnership on AI, she’s at the forefront of shaping how we think about AI ethics today and on building AI we can all trust.

Ethical challenges of rapid AI growth

“AI is growing rapidly – it’s being used in many services that consumers interact with today. That’s why it’s so important to address the ethical challenges that AI can bring up,” Rossi started off. She highlighted the critical need for users to trust AI systems, emphasising that trust hinges on explainability and transparency.

“For users, it’s important from an ethical standpoint to be able to trust the recommendations of an AI system. Achieving this needs AI explainability and transparency,” she said. But trust isn’t the only concern. Rossi pointed out that data handling, privacy, and protecting copyrights are also significant ethical challenges that need to be tackled head-on.

When asked how IBM defines ‘Responsible AI,’ Rossi detailed a comprehensive framework that goes beyond mere principles to include practical implementations.

“IBM built a very comprehensive AI ethics framework, which includes both principles and their implementations, with the goal to guide the design, development, deployment, and use of AI inside IBM and for our clients,” she explained.

The principles are straightforward yet profound, according to Rossi:

- The purpose of AI is to augment human intelligence.

- Data and insights belong to their creator.

- New technology, including AI systems, must be transparent and explainable.

But principles alone aren’t enough. Rossi emphasised the importance of turning these principles into action: “The implementation of these principles includes risk assessment processes, education and training activities, software tools, developers’ playbooks, an integrated governance program, research innovation, and a centralised company-wide governance in the form of an AI ethics board.”

Also read: Google Gemini controversies: When AI went wrong to rogue

IBM’s commitment to open and transparent innovation is also evident. “We’ve released our family of Granite models to the open-source community under an Apache 2.0 licence for broad, unencumbered commercial usage, along with tools to monitor the model data – ensuring it’s up to the standards demanded by responsible enterprise applications,” Rossi added.

Collaboration with policymakers is key

The role of policymakers in AI ethics is a hot topic, and Rossi believes that collaboration between companies and governments is crucial.

“As a trusted AI leader, IBM sees a need for smart AI regulation that provides guardrails for AI uses while promoting innovation,” she said. IBM is urging governments globally to focus on risk-based regulation, prioritise liability over licensing, and support open-source AI innovation.

“While there are many individual companies, start-ups, researchers, governments, and others who are committed to open science and open technologies, more collaboration and information sharing will help the community innovate faster and more inclusively, and identify specific risks, to mitigate them before putting a product into the world,” Rossi emphasised.

One might wonder how these high-level principles translate into practical measures within IBM’s AI systems. Rossi provided concrete examples: “IBM has developed practitioner-friendly bias mitigation approaches, proposed methods for understanding differences between AI models in an interpretable manner, studied maintenance of AI models from the robustness perspective, and created methods for understanding the activation space of neural networks for various trustworthy AI tasks.”

She also mentioned that IBM has analysed adversarial vulnerabilities in AI models and proposed training approaches to mitigate such vulnerabilities. “We made significant updates to our AI explainability 360 toolkit to support time series and industrial use cases, and have developed application-specific frameworks for trustworthy AI,” she added.

AI innovation within ethical boundaries

A common concern is whether strict ethical guidelines stifle innovation. On the contrary, Rossi sees ethics as an enabler rather than a hindrance. “AI can drive tremendous progress for business and society – but only if it’s trusted,” she stated.

She cited IBM’s annual Global AI Adoption Index, noting that while 42% of enterprise-scale companies have deployed AI, 40% are still exploring or experimenting without deployment. “Ongoing challenges for AI adoption in enterprises remain, including hiring employees with the right skill sets, data complexity, and ethical concerns,” Rossi said. “Companies must prioritise AI ethics and trustworthy AI to successfully deploy the technology and encourage further innovation.”

Also read: From IIT to Infosys: India’s AI revolution gains momentum, as 7 new members join AI Alliance

Building AI systems that prioritise ethical considerations is no small feat. Rossi acknowledged the hurdles: “We see a large percentage of companies stuck in the experimentation and exploration phase, underscoring a dramatic gap between hype around AI and its actual use.”

She pointed out that challenges like the skills gap, data complexity, and AI trust and governance are significant barriers. “IBM’s annual Global AI Adoption Index recently found that while around 85% of businesses agree that trust is key to unlocking AI potential, well under half are taking steps towards truly trustworthy AI, with only 27% focused on reducing bias,” she noted.

To address these challenges, IBM launched watsonx, an enterprise-ready AI and data platform. “It accelerates the development of trusted AI and provides the visibility and governance needed to ensure that AI is used responsibly,” Rossi explained.

India’s role in shaping global AI ethics

India is rapidly emerging as a major player in AI innovation, and Rossi believes the country has a significant role to play in shaping global AI ethics and governance.

“Given that the AI market in India is growing at a rapid pace, with some estimates suggesting it is growing at a CAGR of 25-35% and expected to reach 17 billion USD by 2027, AI ethics and governance will be key as the market continues to develop,” she said.

She highlighted recent initiatives like the Global IndiaAI Summit 2024 conducted by the Ministry of Electronics and Information Technology (MeitY), which focused on advancing AI development in areas like compute capacity, foundational models, datasets, application development, future skills, startup financing, and safe AI.

With India’s growing talent pool in AI and data science, education and training in AI ethics are paramount. Rossi mentioned that IBM researchers in India are focused on AI ethical challenges across IBM’s three labs in the country: IBM Research India, IBM India Software Labs, and IBM Systems Development Labs.

“These labs are closely aligned to our strategy, and their pioneering work in AI, Cloud, Cybersecurity, Sustainability, Automation is integrated into IBM products, solutions, and services,” she said.

Future of AI ethics

Looking ahead, Rossi is optimistic but cautious about the evolution of AI ethics over the next decade. “Investing in AI ethics is crucial for long-term profitability, as ethical AI practices enhance brand reputation, build trust, and ensure compliance with evolving regulations,” she asserted.

IBM is actively building a robust ecosystem to advance ethical, open innovation around AI. “We recently collaborated with Meta and more than 120 other open-source leaders to launch the AI Alliance, a group whose mission is to build and support open technology for AI and the open communities that will enable it to benefit all of us,” Rossi shared.

As AI becomes increasingly interconnected and embedded in our lives, new ethical challenges will arise. Rossi highlighted the importance of focusing on trust in the era of powerful foundation models.

“In keeping with our focus on trustworthy AI, IBM is developing solutions for the next challenges such as robustness, uncertainty quantification, explainability, data drift, privacy, and concept drift in AI models,” she said.

The TLDR version of my interview with IBM’s Francesca Rossi constantly underscores a fundamental truth: ethical considerations in AI are not optional – they’re essential for sustainable success. As Rossi aptly put it, “These considerations are not in opposition to profit but are rather essential for sustainable success.”

As AI’s influence is only set to grow, Francesca Rossi’s insights offer a roadmap for navigating the complex ethical landscape of AI. It’s an effort that demands transparency, collaboration, and an unwavering commitment to building systems that not only advance technology but also uphold the values that define us as a society. A collective effort that involves policymakers, educators, and industry leaders here in India and around the world.

Also read: IBM reveals faster Heron R2 quantum computing chip: Why this matters

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile