MIT: Linux will be able to keep up with multi-core development

Aaah, if ever there was vindication for open source, this might just be it…Three MIT professors and their students decided to undertake research in multi-core scalability, i.e., if you keep increasing the number of cores, can you make sure that performance keeps up?

They found that they could, but only by modifying the way the operating system worked, by addressing “clogged counters.” Of course, to modify the system, it had to be open-source (at least legally). Before we get ahead of ourselves though, let us describe their experiment…

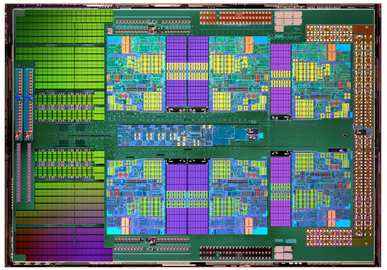

The team used eight six-core processors in tandem to simulate what a 48-core chip what behave like, and then tested numerous parallel-process intensive applications on the operating system, activating one core at a time and measuring the performance gains.

They found that at some point, each additional core that was activated ended up slowing the system down, instead of providing a performance boost. While this performance drag would have flummoxed many, MIT’s best identified the problem, and it was surprisingly obvious.

AMD 1090T BE six-core processor die shot

|

Since multi-core systems end up tasking multiple cores with calculations that require the same data set, the data remains stranded with one or the other cores during the course of the calculation. In other words, as long as the data was required by one core, it wouldn’t be deleted from the memory. When a core starts work on the data, it sets a central (available to other cores) counter up, and when it finishes its task on the data, it sets the counter down. The counter keeps realtime record of which cores are using the data, and when the counter resets itself to zero, the OS then deletes the data, and frees up the processor memory for other tasks.

All this is fine when the number of cores is not too large. As one keeps increasing the number of cores however, what happens is that the data gets split into ever smaller portions. This results in the cores “spending so much time ratcheting the counter up and down that they weren’t getting nearly enough work done.”

All they had to then, was to rewrite the Linux code slightly, instructing each core to keep a local count that was only periodically synchronized with the central reference counter, resulting in much improved overall performance.

Frans Kaashoek of MIT was quick to defend the progress that today’s multi-core processors and their instruction sets have made, saying: “The fact that that is the major scalability problem suggests that a lot of things already have been fixed. You could imagine much more important things to be problems, and they’re not. You’re down to simple reference counts.”

[RELATED_ARTICLE]Kaashoek was also quick to point out that MIT’s fixes aren’t necessarily going to be the ones that will be adopted by the Linux community, and that it is more than capable of managing the same itself, as the fixes themselves were not so difficult. Instead, he emphasized that identifying the problem itself was the hardest part, and now “there’s a bunch of interesting research to be done on building better tools to help programmers pinpoint where the problem is. We have written a lot of little tools to help us figure out what’s going on, but we’d like to make that process much more automated.”

Remzi Arpaci-Dusseau, a professor of computer science at the University of Wisconsin, lauded MIT’s paper as the “first to systematically address” the multi-core scalability question. He went on to say that in the future, once the number of cores on a chip become “significantly beyond 48,” new architectures and operating systems may have to be designed to address the issue. For now at least, or the near future (“next five, eight years”), MIT’s solutions will greatly help scale performance alongside multi-core development. He stressed that the “software techniques or hardware techniques or both” used to identify such multi-core problems will soon become a “rich new area” of research.

Of course, both Microsoft and Apple’s engineers obviously keep track of such research, and you can expect future operating systems from them to also be able to work around clogged counters, and future problems inhibiting multi-core performance. It’s obvious though, that Linux and its many distros will be able to address these problems more quickly, with more frequent product updates, and countless programmers in the open-source community. MIT’s paper will be presented on October 4 at the USENIX Symposium on Operating Systems Design and Implementation in Toronto.