Microsoft Phi-4: How to use, AI applications, everything you should know

For years, Microsoft has been exploring artificial intelligence to reshape industries and improve user experiences. The latest in this effort, Phi-4, adds to its suite of language models. Released as part of the Azure AI ecosystem, Phi-4 focuses on efficient and adaptable capabilities for specific applications, particularly completion tasks. While the model shows promise, a critical assessment of its design and applications is essential to understand its actual utility.

Survey

SurveyPhi-4 focuses on small-scale efficiency

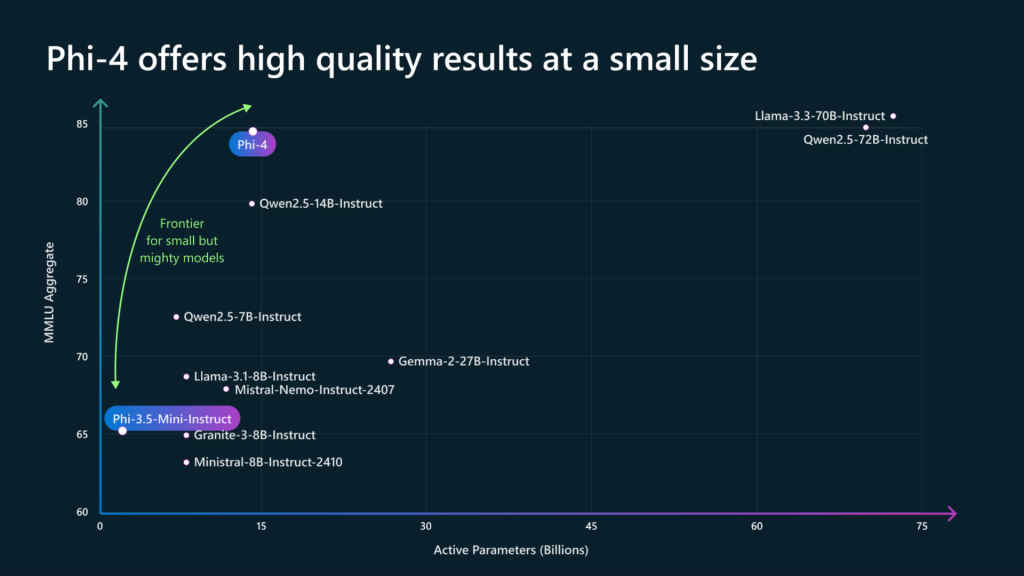

Phi-4 is positioned as a lightweight alternative to larger models like GPT-4, targeting tasks that demand precision without requiring substantial computational resources. It is designed for applications such as auto-filling forms, text predictions, and tailored content generation. Microsoft’s focus on completion-centric workflows suggests a deliberate choice to prioritise targeted efficiency over expansive generative capabilities. According to Microsoft, Phi-4 builds on the Phi series’ design but refines its architecture for concise, contextually relevant outputs. By reducing latency and improving efficiency, Phi-4 addresses specific needs rather than attempting to be a one-size-fits-all solution. This approach may appeal to businesses aiming to optimise performance within defined parameters.

Phi-4 is based on an optimised transformer architecture that balances scalability with modularity. This design enables the model to function effectively even on constrained hardware. Microsoft has emphasised its accessibility, suggesting it is suitable for industries like healthcare and finance, where compliance and quick insights are paramount. The model’s compact size offers advantages, particularly in reducing energy consumption, aligning with Microsoft’s sustainability goals. However, its smaller scale may limit its ability to handle more complex or generalised tasks, which remains a consideration for potential adopters.

Also Read: SLM vs LLM: Why smaller Gen AI models are better

Targeted applications and integration with Azure AI

Phi-4’s specialisation sets it apart from general-purpose language models. Its training on curated datasets allows for domain-specific accuracy, making it more applicable for industries with precise requirements. For example, in customer service, Phi-4 can handle nuanced queries and deliver focused responses. This level of specialisation, while beneficial for particular use cases, may require additional effort to fine-tune for broader applications.

Phi-4 integrates seamlessly with the Azure AI ecosystem, offering businesses a straightforward path to implementation. Through Azure OpenAI Service, the model can be deployed in natural language processing pipelines or custom AI applications. Its compatibility with other Azure tools, like Cognitive Services, expands its usability. Microsoft has highlighted Phi-4’s role in multimodal AI, combining text and image inputs for enriched outputs. While this feature broadens its scope, the effectiveness of such integration in real-world scenarios will depend on further user feedback and iterative updates.

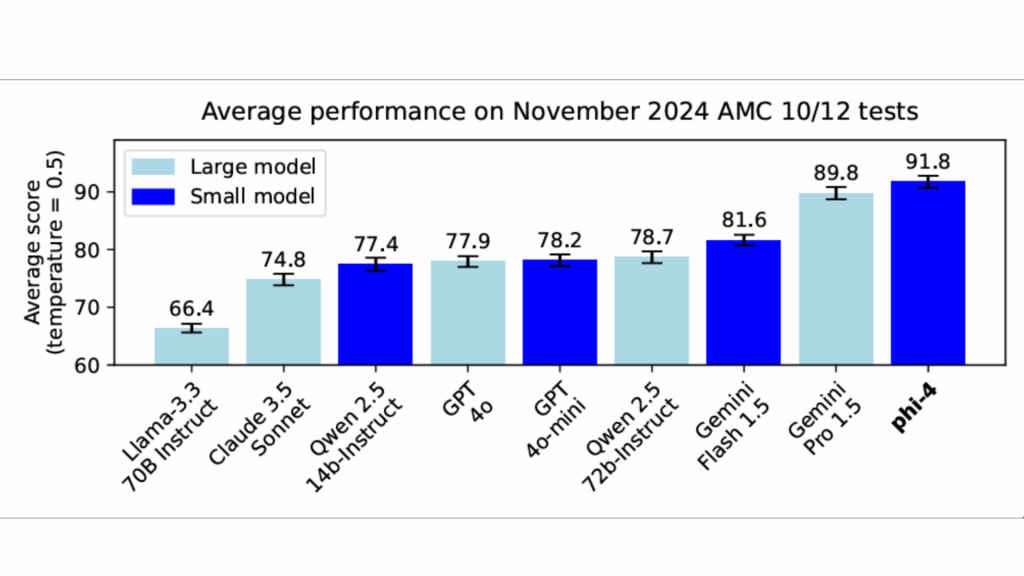

In a landscape dominated by larger models like OpenAI’s GPT series and Google’s Bard, Phi-4 carves out a niche by prioritising efficiency and specificity. Its ability to function on limited hardware makes it a cost-effective option for businesses. However, these strengths are offset by its narrower focus, which may not cater to all use cases. The smaller size and reduced computational requirements may appeal to industries with constrained resources. Still, users should weigh these advantages against the model’s limitations in versatility and scalability.

Ethical design and challenges in the world of AI in 2024

Microsoft’s emphasis on responsible AI development is evident in Phi-4’s safeguards against biases and unethical use. The company’s approach includes rigorous testing and an invitation for collaboration with developers to address potential shortcomings. However, achieving true transparency and accountability in AI models remains an ongoing challenge, requiring consistent oversight. While Phi-4 reflects Microsoft’s commitment to ethical AI, questions about the long-term reliability and fairness of such models persist. These concerns underline the importance of critical scrutiny in the deployment of AI technologies.

The global AI market has seen exponential growth, projected to reach $1.5 trillion by 2030, driven by advancements in natural language processing, machine learning, and autonomous systems. According to a 2023 McKinsey report, 50% of businesses have integrated AI into at least one function, emphasising its role in shaping industries like healthcare, finance, and retail. Within this context, models like Phi-4 represent a growing trend of domain-specific solutions that prioritize efficiency and scalability.

Also Read: Gemini 2.0 features: 5 ways Google improved its AI tool

Smaller AI models are gaining traction due to their reduced environmental footprint and cost-effective deployment. For instance, studies highlight that models requiring fewer resources can significantly lower carbon emissions compared to large-scale counterparts. As organisations prioritise sustainability, compact models like Phi-4 may align better with corporate social responsibility goals.

Future directions for Phi-4

Microsoft has announced plans to enhance Phi-4 with multilingual capabilities and extended APIs, aiming to broaden its appeal. These updates could improve the model’s versatility and adoption across diverse sectors. However, the success of these efforts will depend on how effectively they address user needs and market demands.

As the AI field evolves, the role of smaller, specialised models like Phi-4 will become clearer. Their ability to complement larger models while filling specific gaps could redefine how AI is leveraged across industries. Moreover, the push for ethical AI development and the integration of AI into regulatory frameworks will likely influence how businesses adopt technologies like Phi-4.

Satvik Pandey

Satvik Pandey, is a self-professed Steve Jobs (not Apple) fanboy, a science & tech writer, and a sports addict. At Digit, he works as a Deputy Features Editor, and manages the daily functioning of the magazine. He also reviews audio-products (speakers, headphones, soundbars, etc.), smartwatches, projectors, and everything else that he can get his hands on. A media and communications graduate, Satvik is also an avid shutterbug, and when he's not working or gaming, he can be found fiddling with any camera he can get his hands on and helping produce videos – which means he spends an awful amount of time in our studio. His game of choice is Counter-Strike, and he's still attempting to turn pro. He can talk your ear off about the game, and we'd strongly advise you to steer clear of the topic unless you too are a CS junkie. View Full Profile