Intel’s Indian AI initiatives start bearing fruit

Intel’s AI Developer Program trains 9500 developers, students, and professors across 90 organizations of the 15,000-target set earlier this year

At the Intel Cloud Developer Day held in Bangalore on October 9th, Prakash Mallya, MD Sales and Marketing Group, Intel India, shared the progress on Intel’s AI Developer Program that was set in motion at Intel’s AI Day that was held earlier this year. Intel and their partners have managed to train 9,500 developers across many industry verticals and furthermore, have managed to get academicians and students on board as well. These include over 3000 students and professors across 40 institutions. Apparently, India is one of the leading nations in the AI revolution due to its massive startup ecosystem, most of whom have adopted AI technologies in some form or the other.

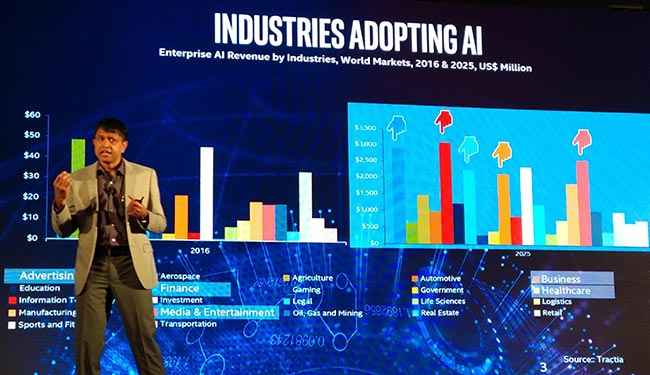

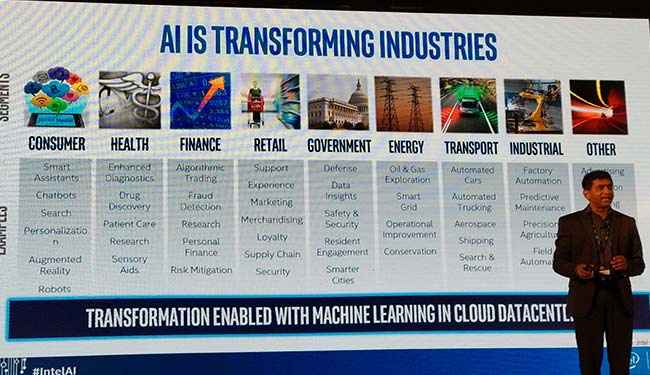

This is evidenced by the fact that 77% of server workloads in the country is related to AI. And it’s not just India, the AI phenomenon is spreading like wildfire across the world with even governments stepping in to capitalize on AI. And it begins with enterprise agencies first as can be seen from the chart below.

Intel’s AI portfolio

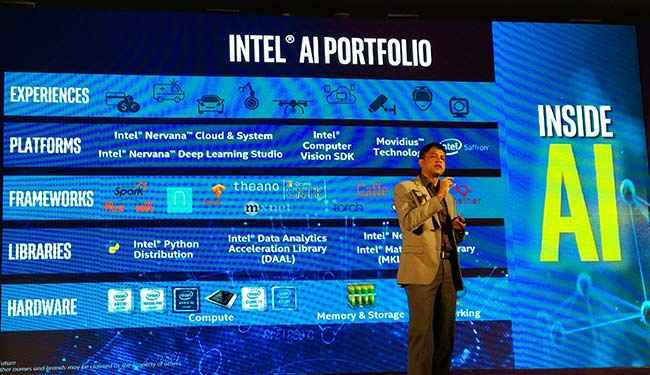

Given how every country on the planet is engaged with the AI ecosystem, hardware and silicon manufacturers such as Intel and NVIDIA among many others have begun producing AI specific hardware. Intel has a vast portfolio of such hardware based on the usage scenario.

There is the newly announced Nervana NNP, then there is the Loihi self-learning chip and if you wish to have a solution that can be programmed on the go to accommodate protocol changes, then you have the FPGA lineup from Intel’s Altera. And aside from all these, there is the scalable Xeon lineup which powers most data centres across the world. These are just some of the hardware that Intel has for AI-related development and workloads. There’s a lot more when you consider the platforms, frameworks and libraries that are optimized for Intel hardware.

Intel in India

As mentioned previously, Intel has been working with developers, students and professors from various academic institutes across the country. In total, Intel has been working with more than 40 institutes. At the Intel Cloud Developer Day, we were presented with a few AI projects that members of the academia had successfully executed. One such project was the Swachhata app, part of the Janaagraha initiative which used AI for image processing rather than traditional algorithms to identify and inform the municipal authorities of garbage pile-ups around and about the city.

Then there are partnerships with companies such as NxtGen which has resulted in initiatives like the AI Developer Cloud. NxtGen has a bevvy of AI solutions and resources which are open to developers and academia. Those interested must pass through a basic screening after which their feasibility is gauged and then NxtGen mentors these developers/developer groups. As of now, they’ve extended training to more than 51 organisations reaching more than 6500 developers.

And then there are a few engagements with Government departments to develop Government-to-Citizen services.

The FPGA revolution

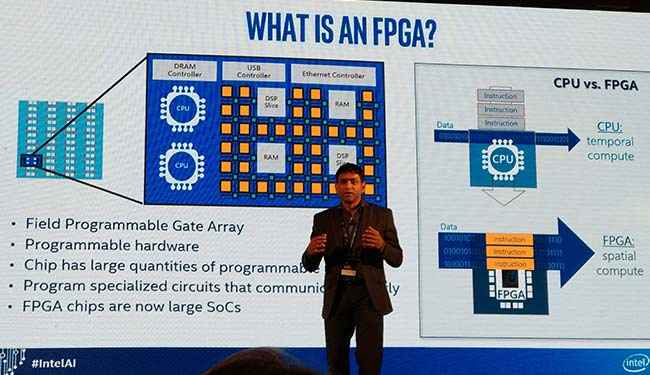

One of the aforementioned AI accelerator solutions from Intel’s stables is their FPGA lineup. They provide a flexible, deterministic low latency, high-throughput and energy-efficient solutions. FPGAs coupled with optimized libraries can help developers build AI solutions in a much shorter time frame.

Building an AI-based system has multiple phases which are broadly classified into two phases – training and inference. These two stages can be further broken down but for the sake of simplicity, we’re going to stick to the two broad classifications. Often, you require different hardware for each phase of the development cycle. The training phase requires hardware which can traverse a diverse set of data and build markers. And then the inference phase requires a different set of hardware which need to provide results with much lower latencies. Fortunately, FPGA solutions can do both. An Arria 10 discrete FPGA card coupled with a Xeon is apt for the training phase while switching to a Stratix FPGA makes more sense for the inference phase.

FPGAs were always seen as versatile chips but lacked the raw compute power needed to perform data heavy AI tasks. However, recent developments would indicate that FPGA chips have gained quite a bit of compute capability. Which is why we have started seeing real-time AI systems being built around FPGAs. Microsoft unveiled its Project Brainwave which is a deep-learning acceleration platform which is built using FPGAs.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 10 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile