Intel focuses on many-core computing: not just for ninjas

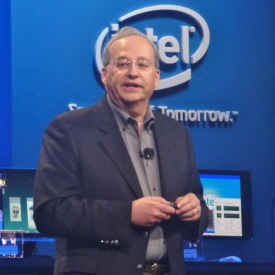

At his keynote at the Intel Developer Forum (IDF) this morning, Intel CTO Justin Rattner discussed the move to many-core computing. The shift is important not only for high-performance computing (HPC), but also for many standard tasks as well. The long-awaited Knights Corner chip will launch with more than 50 cores on 22nm, he said. Highlights of the keynote featured new parallel extensions for JavaScript and demonstrations of both many-core applications and future designs that use much less power for both processing and memory.

Five years ago, Rattner introduced the Core architecture and the company’s first multi-core processors. But now, we are “just beginning the age of many-core processors,” Rattner said, which often includes heterogeneous cores.

[RELATED_ARTICLE]

We can expect to soon see general purpose many-core products, as Intel pushes its Many Integrated Core (MIC) architecture, starting with Knights Corner. (A development version known as Knights Ferry is already available.) The MIC architecture shares the memory model and instruction set with existing Xeon processors, along with enhanced floating point. Intel’s Tera-Scale Computing Research Program is currently testing a 48-core single-chip cloud computer (SCC).

Many-core will not just be for HPC applications, Rattner assured, showing a very large range of applications with 30 or more times performance improvements as the number of cores increases to 64.

Andrzej Nowak of CERN Open Lab talked about using it at the Large Hadron Collider, which created 15-25 petabytes of data per year. Physics analysis at CERN uses distributed computing with about 250,000 Intel cores.

CERN has worked with Northeastern University to parallelize its software. The lab has seen a fortyfold performance improvement on a 40-core Xeon implementation. The company uses the compatible MIC architecture. Nowak ran an application on both a single core and on a 32-core MIC, noting that on its heavily vectorized applications, they were getting nearly perfect scaling.

Programming for many-cores is no longer difficult. “You don’t need to be a ninja programmer to do it,” Rattner said.

He demoed multi- and many-core computing, for “mega data centers,” web apps, wireless communications, and PC security.

The first demonstration dealt with content in the cloud. A 48-core rack ran an in-memory database (using MemCache) showing a 48-core rack capable of handling 800,000 queries per second.

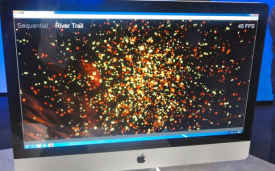

Mozilla CTO Brendan Eich, creator of JavaScript in 1995, then joined Rattner on stage to show off “River Trail,” a set of parallel extensions to JavaScript. They demoed a 3D n-body simulation that could run at three frames per second in sequential mode, but at 45 frames per section with the parallel extensions. These are available now on github.com/rivertrail.

Rattner displayed an LTE Base station implemented on multi-core Intel hardware. Done so in conjunction with China Mobile as part of the Cloud Radio Access Network (CRAN), only the actual radio is at the physical base station location; all the base station problems are actually computed on a “base station in the cloud.”

Following that, a PC security demo illustrated how so much confidential information can now be distributed online on the cloud. All the photos on a Web site were encrypted individually and then, when you appeared before a web camera, they could be decrypted differently for each user. (Some people could see all of the photos; some could see photos just of themselves; and some could see none at all.) Each picture is encrypted separated, which uses multiple parts of the CPU, including processor graphics, AES encryption and others.

Looking ahead, Rattner talked about “extreme scale computing.” Intel’s ten year goal was a 300-fold improvement in energy efficiency, decreasing power to 20 picojoules per floating point operation (FLOP) at the system level.

Intel’s Shekhar Borkar, who works on the DARPA Ubiquitous High Performance Computing project, said today’s 100 gigaFLOPs computer uses 200 watts. By 2019, it should use about 2 watts, due to reductions in power required not only by the cores, but by the whole system, including memory and storage. He specified running processors at near the threshold voltage operation.

Next, a concept chip, called Claremont, which can run at near threshold voltage and can ramp from full performance to low power, ran on less than ten milliwatts of power. The chip also ran on a small solar-powered chip. Earlier in the week, Intel CEO Paul Otellini ran Windows; today’s demo ran both Windows and Linux. The chip can scale to over ten times the frequency when running at nominal voltage, so it could be both very fast and very low power. When it runs at full power, Rattner said, it uses less power than a current Atom chip on standby.

In conjunction with Micron, a “hybrid memory cube” showing both the lowest energy ever required for DRAM at 8 picojoules per bit and the fastest throughput at about 128GB/sec.

“Technology is no longer the limiting factor,” Rattner concluded. “If you can imagine it, we can create it.”