HP’s memristors are a whole new breed of processors and storage devices combined in a single unit

The whole world is looking for alternatives to silicon-based computational and storage devices. As you squeeze circuits together in a smaller space, you are approaching the theoretical limits of scale and heat dissipation envisioned decades ago, causing the circuits to melt after a certain tiny size. Manufacturing silicon transistors on such a small scale is also unbelievably complex and expensive, and has its own limitations, where the size of the circuits designed become smaller than the wavelength of light used to create them. Newer manufacturing technologies (electron beam lithography, micro lasers) are being developed that can circumvent this second problem, but the resultant circuits, though incredibly minute, will still be affected by heat unless they can dissipate it fast enough. Researchers at HP Labs however, believe that the answer lies elsewhere, ironically in a type of circuit element that was proposed way back in 1971 by Leon O. Chua, an electrical engineer at the University of California, Berkeley.

Deemed unfeasible, memristors, or memory resistors as a concept in the 70s relied on magnetic flux. But in 2008, researchers at HP Labs realised that memristors could be “read and written using applied voltages”, and didn’t require magnetic flux quite as much as thought. Now, those same researchers at HP Labs have developed working memory resistors that function at the same speeds as today’s conventional silicon transistors, and say the technology will progress much faster than Moore’s law previously dictated.

Why they are better than silicon transistors

One of the things that make memristors so interesting is that they can simultaneously “perform a full set of logic operations at the same time as they function as non-volatile memory”. This will enable designers to design circuits built out of memristors that operate both as memory and processing units, thereby replacing the need for specialized units and the time-consuming required communication between them. They are also simpler to manufacture than silicon transistors, much more energy-efficient (see why below) and virtually immune to radiation disruption.

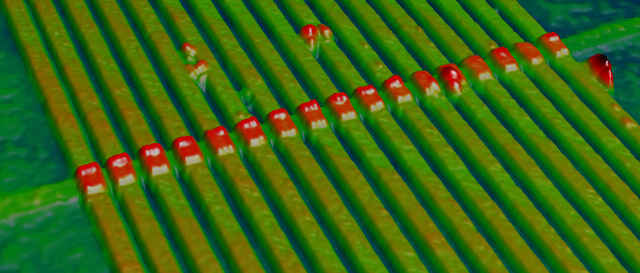

Memristors will also free designers to work in three dimensions, and to be able to “stack thousands of switches on top of one another in a high-rise fashion, permitting a new class of ultra-dense computing devices even after two-dimensional scaling reaches fundamental limits.” HP said in an official statement, “In five years, such chips could be used to create handheld devices that offer ten times greater embedded memory than exists today or to power supercomputers that allow work like movie rendering and genomic research to be done dramatically faster than Moore’s Law suggests is possible.”

Stan Williams, a physicist at HP, says “memristor technology really has the capacity to continue scaling for a very long time, and that’s really a big deal.” Today’s conventional silicon transistor technology is based on minimum feature sizes of 30 to 40 nanometers, and by contrast, working 3-nanometer memristors have been designed which can switch on and off in about a nanosecond, or a billionth of a second, much faster than ordinary silicon transistors.

Currently, memristors can store 100 gigabits on a single die in one square centimeter, much more than the 16 gigabits for a single flash chip. In the future, HP thinks they can get that up to a terabit or more per centimeter, meaning almost 20 gigabytes a square centimetre, outstripping projections of future flash memory device capacities by almost a factor of two.

Another amazing feature of memristors comes from their scalability. As you know, that as silicon transistors get smaller, they face many problems, including heat and complexity issues. The exact opposite is true of memristors. The smaller they get, they stronger their memristance effect, allowing them to scaled down for a long time to come, only limited by our nano-manufacturing capabilities.

Check out a short video below of Dr. Stan Williams from HP Labs explaining the potential of memristors:

|

|

How they work

Electronic circuit theory was traditionally based on three components: the resistor, the capacitor, and the inductor. The memristor is now considered the fourth. There are four fundamental circuit variables: electric current, voltage, charge, and magnetic flux. Each of the three above mentioned electronic components managed to link two out of four circuit variables – allowing for calculations to be made – where resistors relate current to voltage, capacitors relate voltage to charge, and inductors relate current to magnetic flux. But, what was missing was a way to relate charge to magnetic flux. This where the memristor comes in.

According to researchers at HP, “The fact that the magnetic field does not play an explicit role in the mechanism of memristance is one possible reason why the phenomenon has been hidden for so long; those interested in memristive devices were searching in the wrong places.” It has now been realised that relating magnetic flux to charge is based on variable resistance as a function of the charge passed through it.

The technology uses electrical currents to move atoms within an ultrathin film of titanium dioxide, a part of which compound is ‘doped’ to have some missing oxygen atoms. It is these holes, or defects in the crystal that allow an electric current to pass through titanium dioxide. The more holes, the lower the resistance. This is how resistance states can be created, based on the movement of oxygen atoms, detecting a single atom moving a single nanometer. Each movement can be read as a change in the resistance of the material, which persists even after the current is switched off, making extremely energy-efficient devices when compare to silicon transistors, which require a constant electrical current (or lack thereof) to maintain its state.

HP’s working memristors are capable of adopting either high or low-resistance states, which can be considered as equal to the ‘on’ or ‘off’ states of conventional silicon transistors, where ‘1’ is the presence of the electron charge, and ‘0’ is the lack of charge. A certain positive voltage will flip the memristor to its high-resistance state, while a negative voltage of the same magnitude will flip it to its low-resistance state. These states are stable, and therefore are can allow the memristor to work as non-volatile memory, not requiring the electrical current to maintain the state.

The memristor approach that is very different from a type of storage developed by IBM, Intel and other companies, called “phase-change memory”. In phase-change memory, heat changes the phase or state of a glassy material from an amorphous to a crystalline state, which requires time and consumes lots of power, making it energy inefficient and slow.

Because of their non-volatile nature, memristor-based systems can also be used to produce analog computing systems, functioning more like biological brains, as they work similarly to biological synapses. This is considered by many to be the holy-grail of electronic technology.

A new kind of logic

Most silicon transistors perform a full set of logic operations using a combination of NAND (not-and) gates. Previously, it was thought that memristors will not be able to perform a full set of logic operations. But, it has now been found that a memristor can also be made to transfer its state to other memristors, therefore producing devices that can reprogram themselves in a manner that depends on the evaluation of other logic operations. Memristors can hence use NAND gates for logical operations, but in a new way, with a combination of three memristors using a logical operation called “material implication”, where for Boolean states p and q, a material implication is “p implies q”, and if p is true, then q must also be.

It is also possible to build an IMP logic gate by using two memristors and combining them with a standard resistor. When you add a further memristor, it can act like a false operation (it always returns false), to deliver a complete set of logic operations. As the authors of the paper (published in the The Proceedings of the National Academy of Sciences) put it, “the major lesson from this research is that when confronted with a new device, one needs to determine whether it has a natural basis for computation that is different from familiar paradigms.”