Grokipedia vs Wikipedia: Key differences explained

AI-driven Grokipedia challenges human-edited Wikipedia’s open knowledge model

Grokipedia replaces editors with Grok LLM for real-time updates

Wikipedia’s transparency meets Grokipedia’s algorithmic control in truth battle

The launch of Grokipedia by Elon Musk’s xAI is more than just a new tech product; it’s an architectural challenge to the established model of online knowledge. It pits the decentralized, human-consensus model of Wikipedia against a proprietary, AI-driven synthesis engine.

Survey

SurveyFor anyone who relies on the internet for quick, vetted information, understanding the core differences in how these platforms operate is crucial.

Also read: Elon Musk’s AI-powered Wikipedia rival goes live: Here’s how to use Grokipedia

Content generation model: AI vs Human led

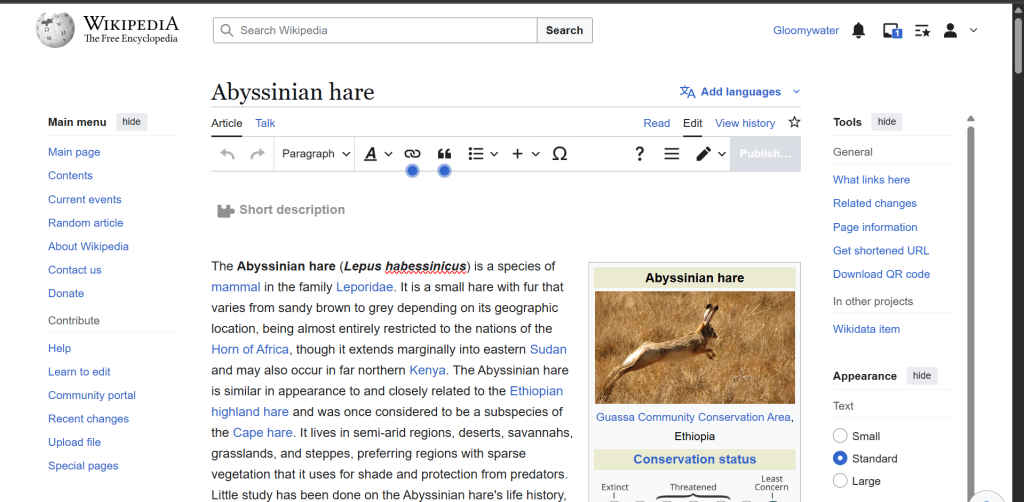

The most fundamental difference is who or what writes the articles. Wikipedia is the ultimate collaborative effort, with millions of volunteer human editors writing and refining articles in a process driven by debate, policy, and eventual consensus. It runs on the open-source MediaWiki software.

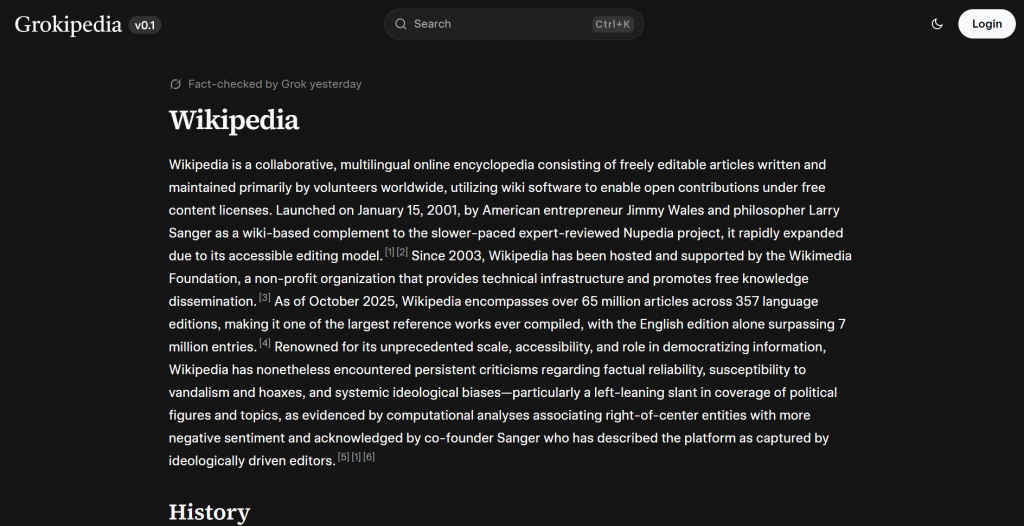

Grokipedia, by contrast, relies on Generative AI Synthesis. Its articles are primarily drafted, maintained, and corrected by the proprietary Grok Large Language Model (LLM). This AI is designed to read vast amounts of data, identify what is “true, partially true, false, or missing context,” and then automatically synthesize and rewrite the most accurate entry. The process is centralized and algorithmic, not collaborative.

Editing and oversight: Algorithm vs community

In a classic wiki model, anyone can be bold: if you see a typo or an omission on Wikipedia, you can log in and fix it directly. This “anyone can edit” ethos is Wikipedia’s core safety net and its greatest source of contention.

Grokipedia entirely eliminates this direct peer-to-peer editing. Users cannot manually edit an article. Instead, they can only submit corrections or flag inaccuracies through a reporting system (often compared to X’s Community Notes). The Grok AI holds the final editorial authority, processing the user feedback against its source analysis before autonomously deciding whether to rewrite the content. This shifts oversight from a transparent human community to an opaque algorithmic command chain.

Source data and updating: Real time vs vetted

Wikipedia maintains a standard of Verifiability that requires every claim to be supported by published, reliable, secondary sources (like academic journals or major news outlets). This ensures quality but makes the platform inherently slow to react to breaking events.

Grokipedia boasts Real-Time Integration. The Grok AI is connected to live data feeds, notably the stream of posts and information from X (formerly Twitter) and the general internet. This allows the platform to theoretically generate and update articles in near real-time, instantly reflecting unfolding events, a significant speed advantage, but one that raises questions about vetting primary social media data.

Transparency: Black box vs open history

For two platforms that aim to be sources of objective truth, their approaches to transparency are diametrically opposed. On Wikipedia, every single change is logged, timestamped, and publicly attributed in the Full Revision History. The entire debate leading to the final article text is preserved on the “Talk Page” for all to see.

Also read: Microsoft Copilot AI vs Copilot in Edge Browser: What is the difference

On Grokipedia, while the content is available, the internal logic of the AI is a proprietary black box. There is no public edit history showing why the Grok LLM prioritized one source over another or how it decided to rewrite a paragraph. This shifts public trust from verifiable human accountability to faith in the machine’s “maximum truth-seeking” design.

Risk focus: Hallucination vs bias

Every platform has flaws, but Grokipedia and Wikipedia face different existential threats. The primary technical risk for Grokipedia is Algorithmic Hallucination. As an LLM-driven platform, it is prone to generating sophisticated, coherent text that is factually incorrect, making it difficult for a casual user to spot a lie created at machine speed.

The primary risk for Wikipedia is Human Editorial Bias. Its weaknesses are concentrated in the human layer: ideological skirmishes, slow dispute resolution, and systemic bias inherited from the dominant demographics and established editorial traditions of its community.

Key differences

| Aspect | Grokipedia (xAI) | Wikipedia (Wikimedia Foundation) |

| Content Creation | Generative AI (Grok LLM) | Human Volunteer Editors |

| Editorial Authority | Centralized to Grok Algorithm | Decentralized Community Consensus |

| Data Source | Real-Time, including X Data Stream | Vetted, Published Secondary Sources |

| Editing Rights | Users Submit Feedback (Indirect) | Anyone Edits Directly (Open) |

| Primary Risk | Algorithmic Hallucination | Human Editorial Bias/Stagnation |

Also read: Yann LeCun warns of a Robotics Bubble: Why humanoid AI isn’t ready yet

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile