Google Bard should address these 5 concerns against generative AI chatbots like ChatGPT

Google Bard launched on February 6, 2023.

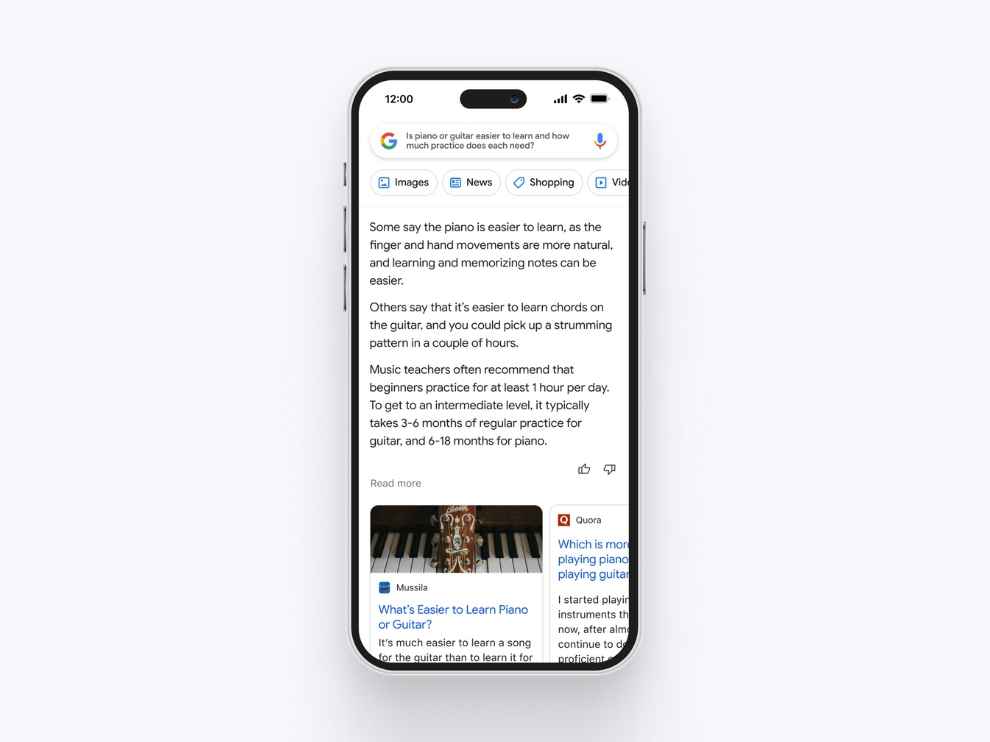

It is a conversational AI service that could be integrated into Google Search to make the latter an indomitable search engine against ChatGPT and the like.

Google should, however, address Big Tech problems plaguing ChatGPT and other generative AI chatbots.

Google Bard is finally official. Google launched Bard as a code-red response to OpenAI’s ChatGPT. However, for it to be a winner, the Google chatbot has to address the various ethical, security, and financial concerns associated with ChatGPT and other generative AI chatbots.

But first, let’s answer some basic questions.

What is Google Bard and how is it related to Google LamDA?

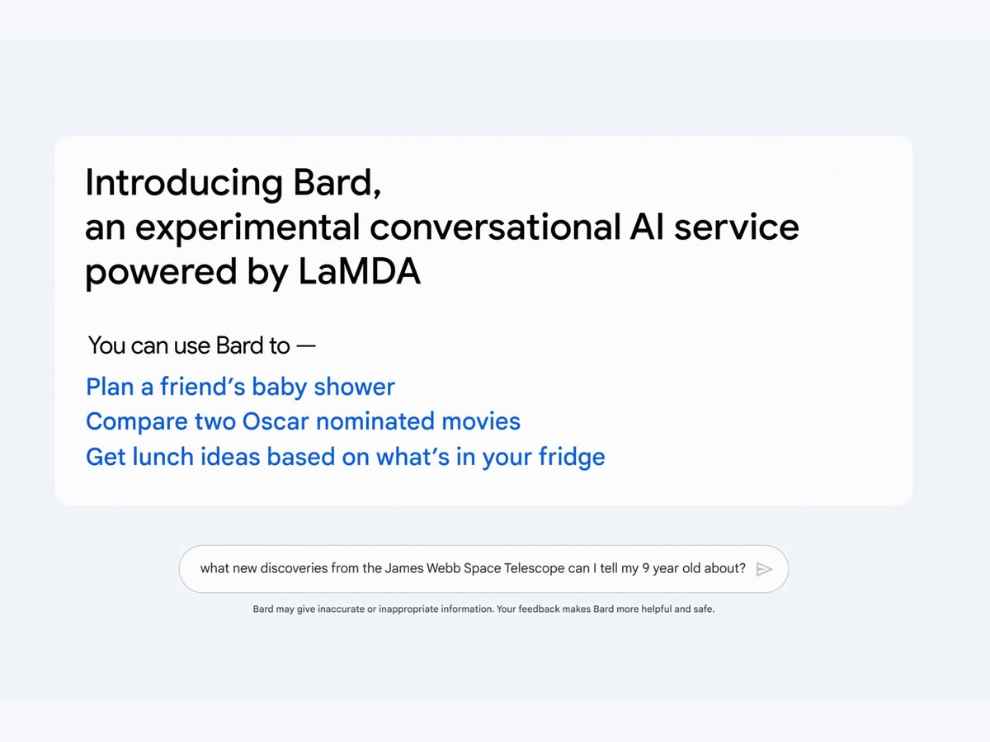

Google Bard is “an experimental conversational AI service, powered by LaMDA” which the company announced on February 6, 2023. It arrives as a ChatGPT rival. In its blog, Google says the AI chatbot is currently entrusted to in-house testers but will be rolled out to a larger audience in the following weeks.

For those unaware, LaMDA or Language Model for Dialogue Application is a Large Language Model that debuted in 2021. It is claimed to be capable of carrying on human-like conversations, even between unrelated topics to the point a Google engineer believed it to be sentient.

These generative AI bots are not fetching information in real-time from the internet. Rather they work on a probabilistic nature based on the data they already have. LamDA has been fed a massive corpus of text data and trained to pick up on nuances of dialogues based on metrics like quality (sensibility, interestingness, and, specificity), safety, and groundedness (verifiability). It thus supposedly understands the context of the conversation and the relation between different words to predict what should come next.

Now, something you should note is that Google is using a pared-down version of LaMDA for powering Bard because it apparently consumes less computing power, and would help the company in scaling to more users. Google also expects the ensuing feedback from external sources (general public, we suppose) would help its internal raters to ensure Bard follows the aforesaid metrics like quality, safety, and groundedness.

We are expected to hear more about Google Bard and its integration with Google Search, Maps, and other G-suite apps and services at the February 8 event. It will be live-streamed on YouTube at 19:00 pm IST.

We will be tuning into the event and keeping close tabs on everything Google does in the AI space. But, putting away the rose-tinted glasses for a minute, let’s get to the reason you are here — the concerns against generative AI services like Bard and ChatGPT:

5 concerns against generative chatbots like OpenAI's ChatGPT that Google Bard should address

1. Incorrect responses in a confident way

OpenAI admits that "ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers." It also has dated information as it has been trained on a database till 2021 only and therefore lacks information on events or developments after that. Again, because it is not human, lacks an understanding of the world, and is not bringing information in real-time from the web or an expert, there is no guarantee of how factually accurate its confident answers are.

2. Biases

Since, all these generative AI services are trained on human-written texts in form of websites, articles, books, etc, the inherent biases of the authors also seep unintentionally into the AI systems. ChatGPT agrees AI chatbots carry the “existing biases and discriminatory attitudes in society,” including foul language, stereotypes, and misinformation. This could be exacerbated by carelessness on part of the developers and testers as well as the algorithms in use.

3. Harms quality of learning and work

If one could get the AI chatbot to proofread or worse conjure the assignments, reports, and other literary tasks from schools, colleges, and workplaces, then that could in time decrease their motivation to learn anything or work on their own.

4. Cybercrimes

We have already discussed in detail how cybercrooks are cooking up nefarious codes and programs with malicious intent.

5. Unfair competition and antitrust

All AI chatbots rely on massive computing power and cloud infrastructure. Not every AI startup can afford that and thus end up in long, complex, and undue agreements with tech giants in the market. These cloud partners could someday be a competitor or even consume them altogether.

Global Antitrust bodies must be thus paying attention to the self-preferential and monopolistic moves by the biggies with their investments and M&As like Google-Anthropic, Amazon-Stability AI, and Microsoft-OpenAI.

The Silicon Valley giant had long steered away or has been cautious about the public release of its AI smarts for “reputational risks” as Search is its big driver of revenue and mindshare. Let’s see how the brains at Google address all these concerns.

G. S. Vasan

Vasan is a word weaver and tech junkie who is currently geeking out as a news writer at Digit. View Full Profile