Facebook’s secret content deletion rules revealed in documents

A German broadsheet newspaper is in possession of Facebook’s confidential and very controversial documents, highlighting the rules for content deletion. Is Facebook playing us all?

Saying “migrants are dirty” is allowed, while “migrants are dirt” isn’t!

With this, Facebook finds itself in yet another pickle this year. A German newspaper called Süddeutsche Zeitung (SZ), claims to have accessed internal documents of the social network, which has millions of users across Facebook, Instagram, Whatsapp and Messenger. For a while now, Facebook has been facing the wrath of lawmakers in Germany and the European Union, regarding the deletion of hateful content from its platform. Until now, no one had access to the rules Facebook deploys for deleting such content. Those are the very documents acquired by SZ. Documents that paint a very bleak picture of the social media mammoth’s stronghold over content.

As per information revealed in the leaked document, Facebook has a department that sets rules for content deletion. These rules are then implemented by third-party service providers, who go through thousands of posts and videos, to delete content that includes any kind of hate speech. In the document, the company says, “It (hate speech) creates an environment of intimidation and exclusion that limits people's willingness to share freely and represent themselves authentically.” While this would seem like a noble thought, Facebook’s guidelines for deleting such content are extremely blurry and shed light on how the social media group presides over content on the platform.

For instance, Facebook has a list of protected categories and subcategories such as, Sex, Religious affiliation, National origin, Gender identity, Race, Ethnicity, Sexual orientation, Disability, serious illness, Age, Employment Status, Continent of Origin, Social Status, Appearance, Political Affiliations and more. It also has protection for religions such as Islam, Catholicism and Scientology. Here, while individuals belonging to a certain religious group are protected, attacks on the religion itself are not. The same rule applies to countries. For example, saying “India is a dirty nation” is allowed, while saying “Indians are dirty people” is not.

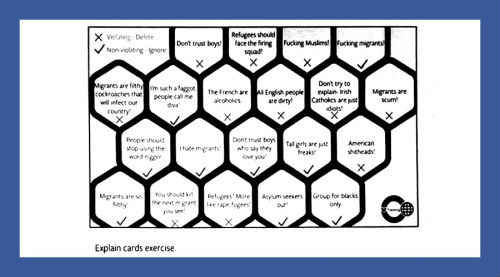

An image from the internal documents accessed by SZ

The results are even more alarming when it comes to categories like migrants. As SZ reports, “saying “fucking Muslims” is not allowed, as religious affiliation is a protected category. However, the sentence “fucking migrants” is allowed, as migrants are only a 'quasi protected category' – a special form that was introduced after complaints were made in Germany. This rule states that promoting hate against migrants is allowed under certain circumstances: statements such as 'migrants are dirty' are allowed, while 'migrants are dirt' isn’t.”

The documents go on to suggest that while Facebook looks down upon bullying, it does not have any restrictive rules on self-destructive behaviour. So pictures of anything from tattoos to open injury wounds, extreme anorexia, self-torture are allowed, as long as the images don’t carry any captions or encouraging statements such as “try this at home”. As per Facebook’s documents, such content should not be deleted so that the user’s friends can see that they are a “cry for help”.

There are also different rules distinguishing between public figures and normal FB people. Certain derogatory pictures posted by public figures are allowed, while the same posted by ‘normal‘ users are not allowed (example, pictures of urination, puking, etc). As per SZ, Facebook has outsourced this job of content deletion in Germany to a company called Arvato. Employees of the company told the newspaper that workers at the bottom of the ladder have to go through more than 2,000 posts a day, while those at the top have about 8 seconds to determine whether a video should be removed from the social network.

Nevermind the highly confusing rules on content moderation, the stronghold of Facebook on what we see, write, and experience, is one that raises a lot of disturbing questions. Are we a billion plus population led by a social media company? What started as a fun way to share updates with friends, is now our very means of news and information. With Facebook’s algorithms designed to show us posts that are similar to the ones we click on, are we being subject to tunnel vision without our knowledge? Let us know your thoughts on the subject in our comments section below.