ElevenLabs AI Voice technology faces scrutiny for alleged exploitation in influence campaigns

The rise of artificial intelligence has been nothing short of revolutionary, but its rapid adoption has also raised critical ethical concerns. ElevenLabs, a company celebrated for its advanced AI voice-generation tools, has recently found itself in the eye of a storm. Reports suggest that its technology may have been exploited in a Russian influence operation, sparking debates about the responsibility of AI developers in preventing misuse.

The technology behind ElevenLabs

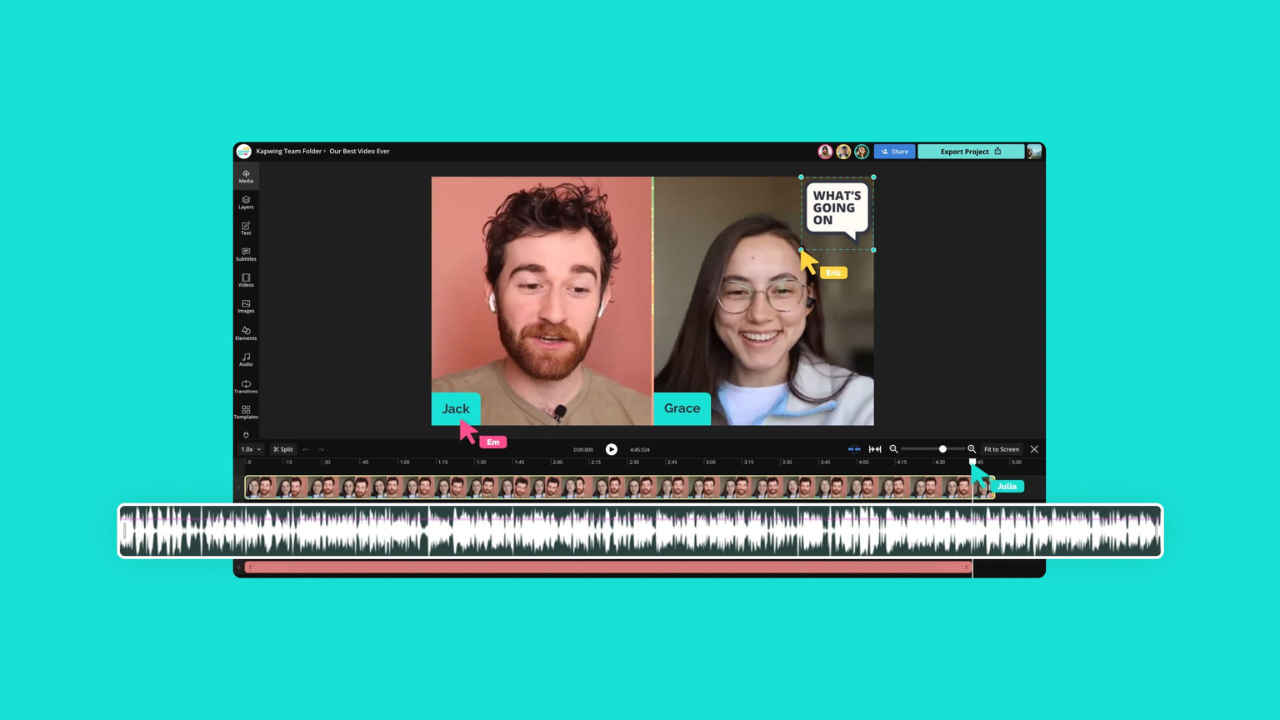

ElevenLabs is at the forefront of AI voice synthesis, offering tools capable of generating hyper-realistic human voices from simple text prompts. Earlier this year, the company introduced a feature called Voice Design, which allows users to create a completely unique voice from scratch. This innovation enables the generation of personalized voices tailored for applications such as audiobooks, virtual assistants, and gaming characters. The system’s ability to mimic accents, emotions, and tones with incredible accuracy has made it extremely popular among creators and developers.

Yet, with great power comes great responsibility, or in this case, vulnerability. The same tools that enable creative possibilities can also be misused for nefarious purposes, as recent events have demonstrated.

Allegations of misuse in Russian campaigns

Reports indicate that the platform’s realistic voice capabilities were exploited in a Russian influence operation aimed at spreading misinformation. This campaign allegedly involved crafting fake audio messages to manipulate public opinion and disseminate propaganda. The use of AI-generated voices added a layer of authenticity to the fabricated messages, making it harder for audiences to discern fact from fiction.

While ElevenLabs has not officially confirmed these allegations, the reports underscore the potential for such technology to be weaponized. This incident has reignited discussions about the ethical implications of AI and the measures companies must take to safeguard their tools from misuse.

Balancing innovation and security

ElevenLabs’ vision has always been to democratise voice technology, making it accessible to anyone with a creative idea. Its tools are widely used by audiobook narrators, content creators, and educators to enhance their work. However, the alleged exploitation of these tools for misinformation highlights the fine line between accessibility and security.

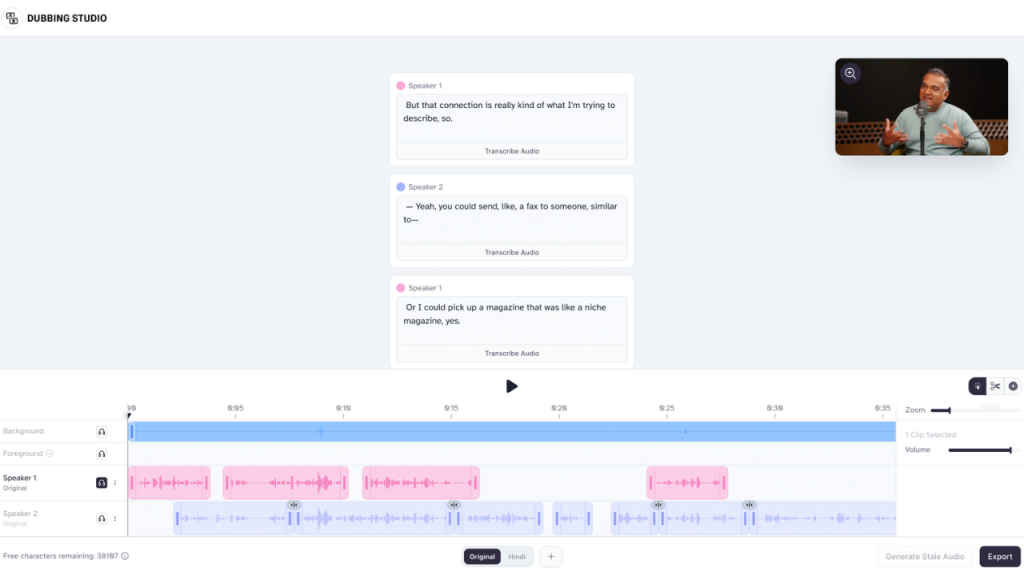

In response to growing concerns, ElevenLabs has implemented measures to mitigate misuse. The company is strengthening its security protocols and collaborating with stakeholders to prevent future misuse. The company now requires users to verify their identities before accessing advanced features like Voice Design. They’ve also developed a detection tool capable of identifying audio generated by their AI.

These steps aim to balance user accessibility with safeguards against unethical applications. However, experts argue that industry-wide guidelines are essential to curb malicious applications of such technology.

The broader implications

The controversy surrounding ElevenLabs is not an isolated incident but a reflection of broader challenges in the AI industry. AI-driven tools like ElevenLabs’ voice generator are increasingly being used for personal and professional tasks, such as creating narrations for YouTube videos or generating custom voiceovers for podcasts. These legitimate use cases highlight the transformative potential of the technology.

However, as tools become more sophisticated, their potential for misuse grows exponentially. Deepfake technology, for example, has already been used to create convincing but false audio and video recordings. The stakes are even higher when such tools fall into the hands of bad actors seeking to manipulate public opinion or spread misinformation.

In the absence of comprehensive legislation, companies like ElevenLabs are stepping up to self-regulate. Still, the road ahead is challenging. Governments and organizations must work together to create robust frameworks that protect users while fostering innovation. Initiatives like public awareness campaigns and AI literacy programs can also help mitigate risks by empowering individuals to critically evaluate digital content

What can be done?

Industry experts suggest a multi-pronged approach to addressing these challenges. Firstly, companies like ElevenLabs must continue to innovate on security features. Identity verification, watermarking AI-generated content, and real-time detection algorithms are crucial in preventing misuse. Secondly, regulatory frameworks need to catch up with technological advancements. Governments and international bodies should collaborate to establish guidelines for the ethical use of AI.

Education also plays a pivotal role. Users and consumers must be made aware of the existence and potential misuse of AI-generated content. Encouraging media literacy can help individuals critically assess the authenticity of the information they encounter.

A cautionary tale for the AI industry

The alleged misuse of ElevenLabs’ technology serves as a cautionary tale for the AI industry at large. It highlights the unintended consequences of innovation and the importance of proactive measures to prevent abuse. While ElevenLabs’ tools offer incredible possibilities for creative expression and productivity, their potential for misuse cannot be ignored.

For ElevenLabs, this controversy is a stark reminder of the challenges that come with leading in an emerging field. The company’s response to this crisis will likely set a precedent for other AI firms navigating similar issues.

The road ahead

Despite the controversy, the future of AI voice technology remains bright. Tools like ElevenLabs’ voice generator have the potential to revolutionize several industries, from entertainment to education. However, realising this potential will require a delicate balance between fostering innovation and ensuring ethical use.

As the debate over AI ethics continues, it is clear that the responsibility for preventing misuse lies not just with developers but with society as a whole, which does sound like a lofty utopian dream. But yes, collaboration between tech companies, regulators, and the public will be essential in shaping the future of AI.

In the case of ElevenLabs, the coming months will be critical. How the company addresses these allegations and strengthens its safeguards will not only impact its reputation but also influence the trajectory of AI voice technology as a whole.

Deepak Singh

Deepak is Editor at Digit. He is passionate about technology and has been keeping an eye on emerging technology trends for nearly a decade. When he is not working, he likes to read and to spend quality time with his family. View Full Profile