The origins of Artificial Intelligence

The proliferation of artificial intelligence (AI) in the 21st century does not paint the picture of its history dating back too far into the past. However, the concept of mechanized 'humans' first came to be in 380 BC when numerous mathematicians, theologians, professors, philosophers, and authors pondered over calculating machines and numeral systems that could 'think' like humans.

Today, we know AI as the broad branch of computer science which concerns itself with 'smart' or intelligent machines that are capable of performing cognitive practices that typically require human intelligence. It is based on the principle that human thought processes can be both replicated and mechanized. Machine learning (ML) is a popular subset of AI which is the method of machines parsing data, learning from that data and then applying it to make an informed decision. Now, we will delve into the origins of AI and how it burgeoned through the years.

The early days

As we mentioned above, the history of AI dates all the way back to antiquity when intellectuals mulled over the idea of mechanical 'men' and automatons that could exist in some fashion in the future. They appeared in Greek myths, with some examples being the golden robots of Hephaestus and Pygmalion's Galatea. Fast forward to the early 1700s, Jonathan Swift's renowned novel, 'Gulliver's Travels' included a device termed 'the engine', which was akin to computers today. The device's intended purpose was to improve operations and knowledge by lending its assistance and learning to skill-less people. In 1872, Samuel Bulter, an author, wrote 'Erewhon' which toyed with the idea of machines in the future possessing consciousness.

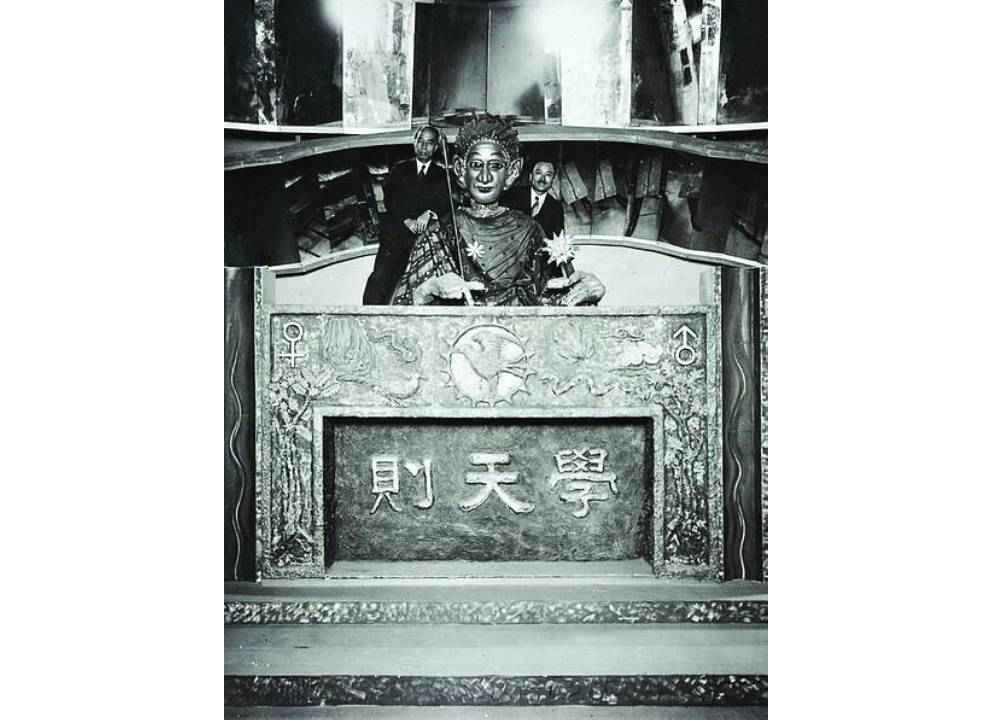

Thanks to these early thinkers, philosophers, mathematicians, and logicians were instigated to develop mechanized 'humans'. In 1929, Makoto Nishimura, a Japanese biologist, and professor built the first robot in Japan called 'Gakutensoku' which translates to 'learning from the laws of nature'. This implied that the robot could derive knowledge from people and nature.

These advances led to the invention of the programmable digital computer called the Atanasoff Berry Computer (ABC) by John Vincent Atanasoff, a physicist, and inventor, alongside his assistant Clifford Berry in 1939. The ABC could solve up to 29 linear equations simultaneously and further inspired scientists to create an 'electronic brain' or an artificially-intelligent non-living entity.

The decade that truly started it all

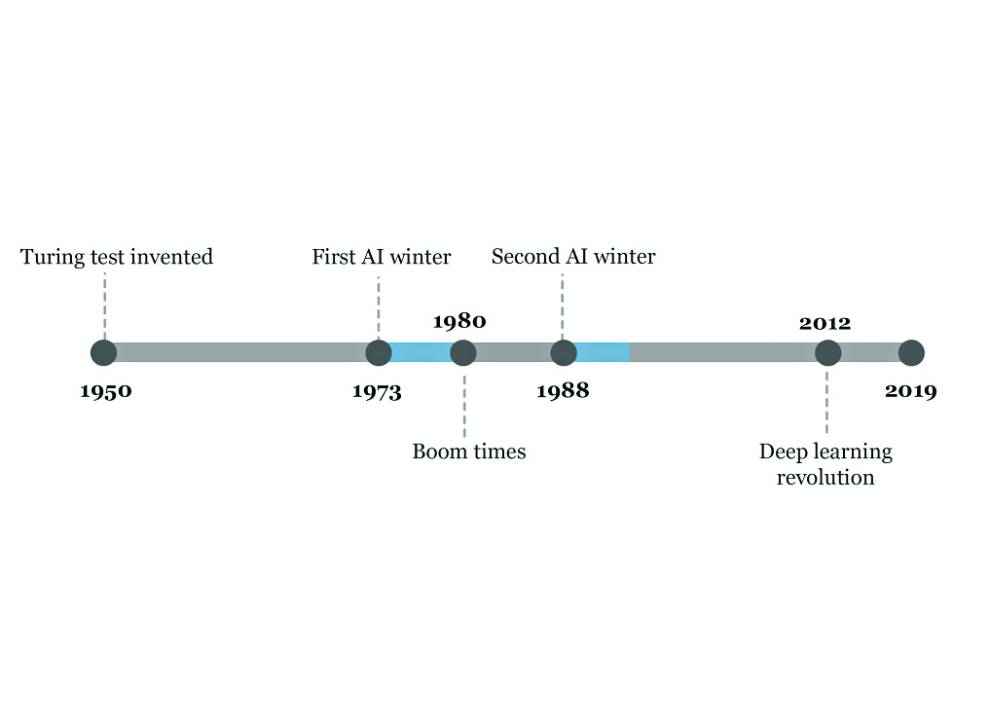

The 1950s saw the concept of AI being catapulted into high gear since many advances in the field of AI came to fruition at this time. The first major success in this decade was when Alan Turing, a famed mathematician, proposed 'The Imitation Game'. According to Turning, a machine that could converse with human beings without them knowing it is the machine would win this 'imitation game' and could be perceived 'intelligent'. This proposal went on to become 'The Turing Test' which became a measure of machine (artificial) intelligence.

In 1955, Herbert Simon (economist), Allen Newell (researcher) and Cliff Shaw (programmer) conjointly authored 'Logic Theorist', which was the very first AI computer program. Funded by Research and Development (RAND) Corporation, it was designed to imitate the problem-solving skills of humans.

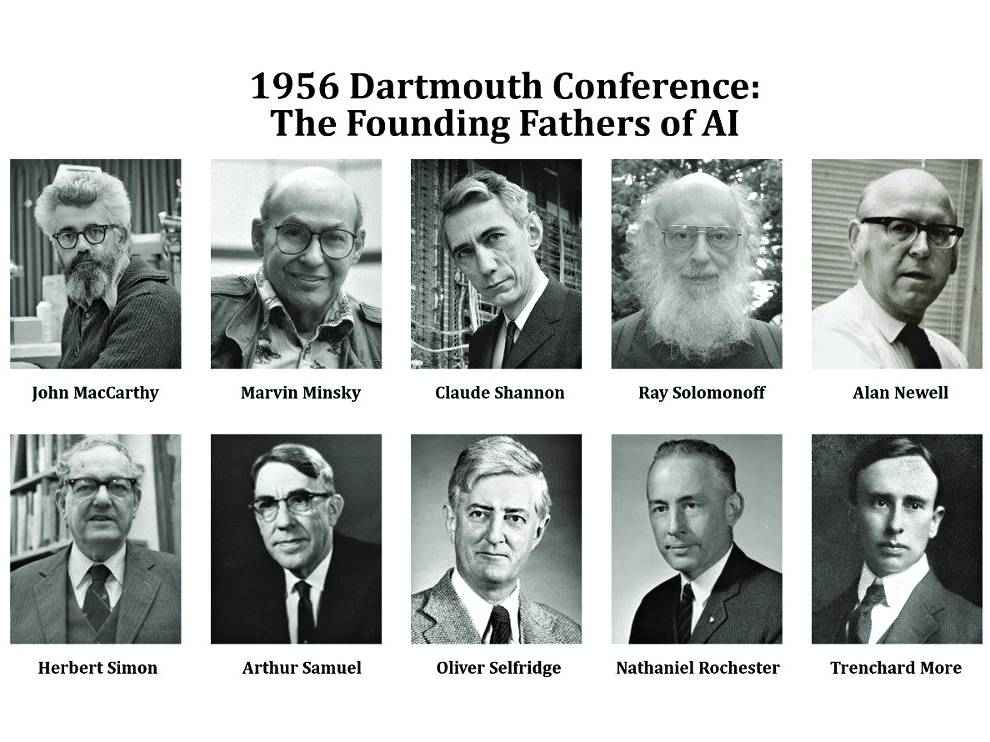

However, the greatest success arguably in this decade was when computer scientist, John McCarthy arranged the Dartmouth Summer Research Project at Dartmouth College in 1956. The term 'Artificial Intelligence' was coined at this conference by McCarthy. 'Logic Theorist', which we mentioned above, was unveiled at the Dartmouth Conference. Following this, several research centers popped up across the US to explore AI and its potential.

In 1958, McCarthy also developed Lisp, which is one of the most popular programming languages for AI research and is still used to date. A year later, Arthur Samuel, a computer scientist, coined the term 'machine learning' when he was speaking about building a computer that could play a game of chess much better than the person who programmed it (the computer).

Rapid advancements

Starting from 1957, all the way through the 1960s, research in the field of AI and innovations around it began flourishing. Computers were faster now and could store more information. They also became more easily accessible and cheaper. The innovators in the field of ML created better algorithms and became accustomed to assigning the right algorithm to the right problem. Innovations such as SAINT, a heuristic program for symbol integration in calculus and STUDENT, an AI program that solved algebra word problems became more prevalent due to the influx of capital in this field. Newell and Simon's 'General Problem Solver' and Joseph Weizenbaum's 'ELIZA' programs began exhibiting promise towards the goal of interpreting spoken languages and problem-solving by machines.

The government seemed to be especially interested in innovations surrounding machines that could transcribe and translate spoken languages and those with high throughput data processing. Films such as 2001: A Space Odyssey and Star Wars started featuring sentient machines, HAL and C3PO respectively, in these movies, which were largely based on the concept of AI. In 1970, Marvin Minsky, an American cognitive scientist, confidently stated to life magazine, "(In) three to eight years we will have a machine with the general intelligence of an average human being". However, even though the basic proof of concept was present, AI still had a long way to go before the processes of natural language processing (NLP), abstract thinking and self-recognition in machines could be perfected, or even usable on a mass level .

Obstacles leading to 'AI Winters'

Once the feverishness around AI started clearing, the obstacles in this field began revealing themselves. Computer scientists were finding it increasingly difficult to inject human-like intelligence in machines. The most prominent obstacle was the lack of computational power. Computers, at the time (around 1980s), were not technologically-advanced enough to store and process large volumes of data required to bring AI applications such as vision learning to fruition. As governments 'and corporations' patience began dwindling, so did the funding. Therefore, from the mid-70s to the mid-90s, there was an acute shortage of funding for research AI. These years were termed the 'AI Winters'.

The AI project in Britain was partially revived in the 1980s by the government to compete with Japanese AI innovation in the field of expert systems, which mimicked decision making processes of human experts. Additionally, John Hopfield (scientist) and David Rumelhart (psychologist) popularized 'deep learning' during this period which allowed computers to learn with experience. However, ambitious goals during this phase weren't met and the field experience yet another 'winter' from approximately 1988 to 1993 which coincided with the collapse of the market for general-purpose computers.

The revival of AI

Despite the lack of government funding and public hype, AI research and innovations continued to thrive. Subsequently, in the 1990s, American corporations' interest in AI applications was revitalized. In 1995, Richard Wallace, a computer scientist, developed the AI chatbot ALICE (Artificial Linguistic Internet Computer Entity), largely inspired by ELIZA by Weizenbaum. However, the difference was the addition of natural language sample datasets.

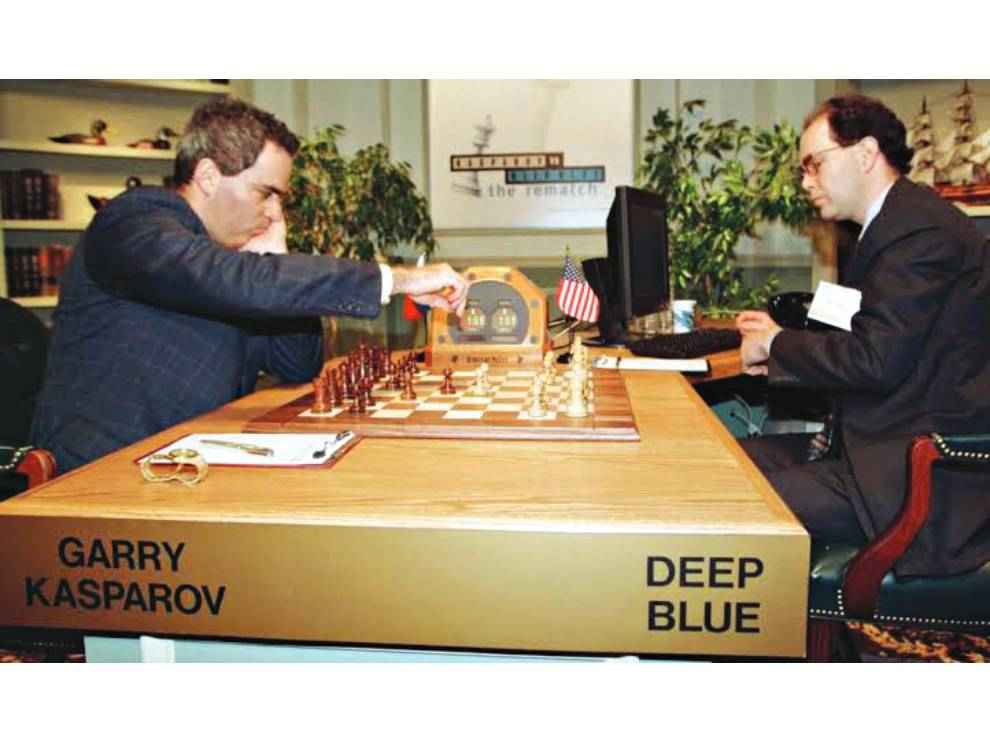

The most celebrated development in this century was a chess-playing computer program by IBM dubbed 'Deep Blue' beating reigning world chess champion and grandmaster, Gary Kasparov at the game in the year 1997. The match was highly publicized and this pushed AI into the limelight. AI was also becoming more capable of handling NLP and recognizing and displaying human-like emotions.

Even though AI funding saw a slight setback in the early 2000s due to the dotcom bubble burst and issues due to Y2K, ML kept going strong owing to improvements in computer hardware. Governments and corporations were successfully utilizing ML methods in narrow domains at this time.

The fundamental limit of computer storage which held AI back 30 years ago was no longer an issue. By this time, the memory and speed of computers managed to catch up, and in some cases, even surpass the needs of AI applications. Computational power was the primary obstacle and this issue was getting less pressing in the 2000s. Today, the issue has been vanquished for the most part.

We saw innovations such as Kismet, a robot that could recognize and relay emotions on its face (2000); ASIMO by Honda which was an AI humanoid robot (2000); iRobot's Roomba, an autonomous robot vacuum that can avoid obstacles (2002); NASA's robotic exploration rovers, Spirit and Opportunity, which navigated through the surface of Mars without human intervention (2004); and more. In 2007, Fei Fei Li (computer science professor) and his colleagues created ImageNet. This is a database of annotated images that could aid in object recognition software research. Google was secretly developing a driverless car at the end of this decade, which passed Nevada's self-driving test in 2014.

AI in the 2010s and the possible future

The 2010s was an extremely significant decade for AI innovation. AI began to become embedded in our day-to-day lives more than ever and devices we carry around every day ie our smartphones, tablets, and smartwatches began having AI ingrained in them. With the escalation of 'big data', the process of collecting large sums of data and processing it became too cumbersome for humans. AI has been of huge assistance in this regard and began being used fruitfully in industries such as banking, marketing, and technology.

Even though algorithms did not improve substantially, big data and massive computing abilities allowed AI to learn more through brute force. Tech moguls such as Google, Amazon, Baidu, and others began storing vast quantities of data for the very first time. They leveraged ML to gain massive commercial advantages by processing user data to understand consumer behavior and align their marketing and recommendations according to these observations. NLP and other AI applications also became prevalent, while ML is embedded in almost all the online services we use. Voice Assistants such as Siri, Google Assistant, Amazon Alexa and more used NLP to infer, observe, answer and recommend to the human user. Anthropomorphized to create a better user experience, voice assistants began offering hyper-individualized experiences.

So, what's the future of AI going to look like? Language processing in AI can still go a long way and applications such as Google Assistant being able to make reservations for you should become more commonplace. Driverless cars are yet another avenue that is constantly seeing developments and obstacles alike, however, the next decade could see the proliferation of these in a couple of cities in developed countries. The long-term goal seems to be creating machines that can surpass human cognitive abilities in almost every task. While this will still take much more than a decade, ethical concerns regarding AI could serve as obstacles in this regard. Conversations and regulations regarding machine policy and ethics should steadily increase, however, one thing is for sure.AI is not going anywhere and with time, its applications will only mushroom.

Dhriti Datta

Perpetually sporting a death stare, this one can be seen tinkering around with her smartphone which she holds more dear than life itself and stuffing her face with copious amounts of bacon. View Full Profile