Demystifying smartphone AI

In the smartphone ecosystem, AI is everywhere. In the chipsets, in the camera, the interface, the apps and even in ringtones and charging. What exactly are the technologies behind these features? Let's find out.

Now that spec-sheet wars are over, smartphone companies have shifted focus to the experience of using a phone. Software is the new battleground and is one of the few ways of differentiating oneself, now that hardware is the same in almost every phone in its respective price segment. The software of a phone includes the user interface, something that phone makers have consistently attempted to reinvent. But more than that, it’s the features that make a phone smarter than the rest.

These days, smartphone companies love to call these differentiating features ‘Artificial Intelligence’. AI is the latest buzzword. A catchall phrase that can mean anything from virtual voice assistants to battery saving trickery.

As a term, Artificial Intelligence has seen a resurgence in the past two years. We are being increasingly told that AI is going to be life-changing and ground-breaking. As a technology, it is poised to disrupt the underlying framework of our society — From automated workplaces to self-driving cars. But they are still quite some time away. What we have in the name of AI in everyday use right now are these smart features on our phones. But are these features really as path-breaking as they are hyped up to be? More importantly, can we even call these features AI?

In the smartphone ecosystem, AI is everywhere. The chipsets that power the smartphones now have dedicated co-processors to handle the AI workloads. The apps that we use are made smarter using AI. The user interfaces of phones come baked with AI. Every aspect of a phone now has AI infused in it. Internet giants like Google, Microsoft and Facebook have restructured themselves to be AI-first companies, while phone makers are using every platform available to make users aware of how smart their devices have become.

John McCarthy, an American computer scientist coined the term Artificial Intelligence and was quite influential in the early development of the technology. The term was coined in 1956 when he invited a group of researchers in a conference regarding the topic. The proposal for the conference said, “the study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” In general, the term AI can be easily defined. It’s when machines start thinking like humans. Achieving that is far more complex. We hardly have any knowledge of how we think consciously, let alone make machines think like us. The human brain is marvelously complex and mysterious. We can go and come back from space, understand the workings of the cosmos, realise that an apple falls from a tree because of gravity, and search for extraterrestrial life in outer space. However, no brain in the universe has understood how the brain can understand such things. Emulating that thought process is a whole different goal. So why then are these smart smartphone features hailed as artificial intelligence?

Do machines dream of electric sheep?

Google’s Deepmind has surpassed the best Go player in the planet. IBM’s Watson has beaten the best trivia minds in a game of Jeopardy. But ask the Go-playing AI to play Monopoly and it would be just as dumb as a monkey trying to play it. Have a heart-to-heart conversation with Watson and you might not come out convinced. The catch here is that the most powerful AI programs in the world are experts only in their specific domains — The tasks they have been trained to do. And to train them, what’s needed is a huge pile of data. Both DeepMind and Watson rely heavily on raw data to learn the things they are good at. Data is the building block of AI. It’s what books are to humans seeking knowledge. It’s only thanks to the large amount of data that is flowing in through myriads of connected devices along with advances in deep learning algorithms, that such AI programs have been made possible in recent times.

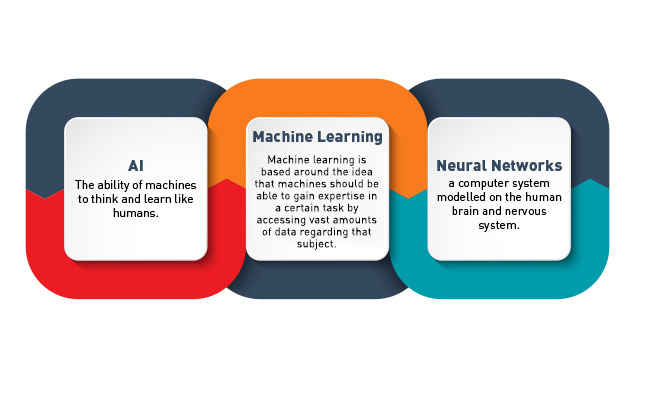

For now, the definition of AI is restricted to programs that use raw data to draw inferences. These inferences are the features that make our smartphones smarter. Machine learning is the key to making these programs work. It’s one of the tools that powers AI as we know it right now, along with computer vision and natural language processing. Google, Facebook and the likes use these terms a lot. Machine learning is based around the idea that machines should be able gain expertise in a certain task by accessing vast amounts of data regarding that subject. It’s similar to how we are taught to do things. The flight attendant performs a demo of emergency situations every time a flight is about to take off, so that we know what to do in case of an emergency. We process the visual data of the actions with our eyes. Similarly, AI uses computer vision to understand digital images. The visual data that we acquire is usually converted into an action, or at least forms the basis of taking an action. Likewise, a device empowered by ‘AI’ will unlock by scanning your face; looking for things it was taught to look for. Now, a flight attendant also speaks out instructions in the language you know while performing the actions, which we parse into actions when required. Similarly, an AI-powered phone uses natural language processing to understand every time you say ‘ok Google’. Thus, in these specific areas where an AI program has been deployed, it behaves more or less like an intelligent being. Processing the data it has available and performing actions based on decisions made from that data.

That’s where the similarities end. To the end user, it may seem like Google Assistant understands everything you’re saying. In reality, Google Assistant is simply scouring for the keywords it has been trained to recognise from the rest of the gibberish by listening to hours of real conversations, and replying back in a natural tone. The Google Photos app knows the faces in your photos because it’s been trained to recognise faces from thousands of photos available on the internet. And it’s not just Google apps and services. AI, as we know it right now relies almost completely on data and techniques like machine learning, computer vision and natural language processing. These are the fundamentals, the building blocks that are used to build deep neural networks, which is another step towards imitating the human brain. The human brain is an infinite criss-cross of neurons, which carry the impulses that trigger the responses from our hands and legs. Similarly, neural networks are algorithms or software that takes in raw data, filter out the parts it doesn’t need and applies the part it does, to perform actions like translating text or speech from one language to the other, recognising handwriting, taking better photos and what not.

The deep neural networks require a large number of processes to be computed in parallel and arranged in tiers. The CPU on the SoC can run the processes but that would mean allocating lesser resources to the usual tasks it performs. That is why Huawei, MediaTek and Apple have come out with chips that have a dedicated co-processor only for AI-centric tasks. Qualcomm has taken a different approach. It has designed its chipset in such a way that it can distribute the compute load between the CPU, GPU and the DSP. These AI-ready chipsets can crunch the computing load required to power the neural networks within the device itself. It’s called on-device AI. Others who don’t have a dedicated processor either use the CPU (which slows the phone down) or perform the actions in the cloud and send the output back. This requires a lot of internet data and subsequently, a lot of battery as well.

AI as we know it

Machines thinking like humans are far off, but that doesn’t meanour devices aren’t getting smarter. The proliferation of raw data and the democratisation of machine learning has resulted in smarter smartphones. The mobile ecosystem is now consistently serviced by ancillary AI companies like Face++, Viv Labs, SenseTime and more that develop AI-powered applications used by smartphones. Google and Microsoft are two big players in this segment. Almost all of Google apps are infused with machine learning. Google Maps use deep learning to form better Street View images by combining satellite data and photos clicked by people. Google Photos relies heavily on computer vision and deep neural networks to identify photos based on subjects and places. If you had gone hiking in the mountains, searching for mountains in Google Photos will bring those up. Faces that have a Google Plus account automatically get tagged by their names. Google Translate uses natural language processing to recognise text through the camera. Then there is the Google Assistant which, in its current version, uses machine learning-based speech synthesis, WaveNet to sound more human. Microsoft has its own suite of AI-centric apps as well. Seeing AI is the one of the newer ones which tells you what’s around using the camera, becoming a reliable companion for visually impaired users.

These apps and features are quickly becoming mainstream, indicating a shift in the R&D of smartphones. The Hardware powering phones has plateaued in importance and AI has become the next frontier. The silicon used to power smartphones have also evolved to support on-device AI. Huawei’s Kirin 970, MediaTek’s Helio P60, Qualcomm Snapdragon 845 and 710, all of them are optimised to handle AI workloads without consuming much power. Here’s how the emerging technology is changing the smartphone landscape:

Face Unlock

It’s the authentication method replacing good ‘ol fingerprints. Phones like Realme 1, Oppo Find X don’t have a fingerprint reader and rely solely on facial recognition to unlock. They rely on facial recognition algorithms to authenticate your face. Companies like Face++ and SenseTime are the suppliers of this technology to most smartphone makers while some, like Apple and Samsung have developed their technology in-house. The face recognition algorithm scans for various unique facial features like distance between the eyes, shape of your face, facial hair density, colour of the eyes and more and stores them as information in the phone. Every time a face is held up against the front camera, the face unlock software scans for the features it has stored and if it matches with the data stored, it unlocks the phone. Most phones rely on two-dimensional scanning, which isn’t as secure as a 3D scan. The iPhone X uses its TrueDepth cameras to make a 3D scan of your face, mapping every inch using an infra-red dot projector and storing the data within the phone itself. Samsung, on the other hand, uses an iris scanner to do the same.

Voice Assistants

Unlike face unlock softwares that store the data locally, voice assistants like Samsung’s Bixby, Google Assistant, Cortana and Siri send voice data over to their respective servers where the voice input is semantically processed to understand what you’re asking for. Voice Assistants use natural language processing that converts speech into sounds and words. In the server, your voice input is broken down into sounds which are then matched to a database of pronunciations to find which word matches the sound the best. Once it figures out what you’re saying, the voice assistants look for keywords like ‘weather’ and ‘traffic’ and performs the required actions. If you’ve noticed, voice assistants take a few seconds to answer back. This is the time it takes to process what you’ve said and come back with a suitable reply. Google and Amazon have outperformed all their rivals in this category. Both have confident voice assistants that have been deployed in people’s living rooms in the form of Google Home and Echo devices. You can nearly have a breezy conversation with them. Samsung’s Bixby is contextually aware as well. It understands the scenario when it is invoked and takes the appropriate action. This year, Google took voice assistants a notch higher by demonstrating its Duplex technology where an AI was successfully able to make a reservation for two at a restaurant without sounding robotic and understand all the context around making such a phone call.

AI Camera

This is one of the most popular AI features seen in smartphones. AI-powered cameras that use software to improve photos taken by phones rely on computational photography where digital inputs are used instead of optical inputs to make photographs better. Companies like Oppo, Vivo, Xiaomi and Honor are the biggest evangelists of AI cameras. But the best application is once again done by Google. The HDR+ feature in the Google Pixel phones relies on deep learning and neural networks. The camera app takes multiple underexposed shots that exposes the highlights of the frame. The shadows are then colourised and denoised using simple mathematics. Google calls the technology “Semantic Image Segmentation.” It’s how Google’s Portrait Mode works without the need for a secondary sensor. Companies like Oppo, Vivo and Xiaomi pre-train their camera apps against hundreds of different scenarios — Sunset, Beach, Restaurant, Food, Pets, Portraits, Group Portraits, and what not. The camera app already knows what to look for when shooting a scene and applies the camera settings on the fly to improve the image.

Portrait Mode

The portrait mode in smartphones also relies on computational photography to improve portrait shots. Selfie-centric phones are a big draw these days with the front camera becoming a primary feature in smartphones. The front camera is mainly used to take selfies and that’s where companies like Oppo, Vivo, Honor and Xiaomi deploy their AI capabilities. All the four companies claim the camera can recognise hundreds of different features of a person’s face and applies the relevant settings to beautify them. The result is mostly a washed-out face that looks like a creepy doll, but in phones like the Redmi Note 5 Pro and the Mi 8, the results are pleasantly surprising. More often than not, the effects are applied in post-production after you have taken the selfie. The depth of field is calculated by taking multiple photos with the focus on different areas which are then merged to bring out a shallow depth of field and the bokeh effect. The facial beautification is then done by scanning the contours of the face, facial hair density and the likes.

Text Prediction and Smart replies

Any early instance of AI in smartphones are the numerous keyboard apps like Swiftkey, Swype and the likes that flooded app stores a couple of years back. These apps also use neural networks and natural language processing to predict the next word you are likely to type and to autocorrect and punctuate your sentences. An evolved form of that is the new ‘Smart Replies’ feature in Gmail and Android that also use the same technology to predict what you’d reply to a conversation.

AI-based smart interfaces

A relatively new application of AI has been to speed up the user interface in smartphones. Honor and Asus are two of the primary adopters of this feature. Both EMUI and ZenUI claim to use machine learning to learn from your activities. The app you’re likely to launch in the morning, the game you’re likely to play while commuting and the likes. It then keeps those apps pre-loaded in the memory so they launch faster. However, more often than not, companies are simply using probability and estimation algorithms to do this. Smart app recommendations are nothing new. Most phones keep a count of the apps you open frequently and optimise them so that they launch quicker. Features like AI-ringtone and AI-charging on the new Asus Zenfone 5z simply use the available sensors to gather data and apply rudimentary estimation programs to keep the phone from ringing out loud in a noisy environment and decide when to trickle charge your phone respectively.

There are more specific, narrow applications of machine learning and deep learning that are making smartphones wiser and ushering in an AI-centric future. But is there a cost to pay for it?

Privacy in the times of AI

The sudden burst of AI-centric features and devices have their origin in the massive amounts of data collected by internet giants like Google, Facebook, Amazon and the likes. Images uploaded on the internet serve as the raw data for training image recognition programs while videos and audio files serve as training material for voice assistants. Essentially, your personal data is used to build the features you have come to rely on. Naturally, there’s a rising concern about one’s digital privacy in the age of AI.

In a survey conducted by Genpact last year among 5,000 respondents, over 59 percent think more should be done to protect personal data.

“To encourage adoption, the key is to have visibility into AI decisions, and be able to track and explain the logic behind them. Companies need to break through the 'black box' to drive better insights for their business and give consumers the assurance they need,” said Sanjay Srivastava, Chief Digital Officer, Genpact.

There is a definite lack of transparency from companies when it comes to disclosing where and how data is collected for AI. According to an NPR report, researchers from two US universities conducted a study among 500 volunteers who were asked to sign up for a new social networking site. The site had a privacy policy which stated “payment would be satisfied by surrendering your first-born child.” 98 percent of the participants accepted the terms anyway. It’s clear nobody reads the terms of use when signing up for a new service.

That’s exactly how companies take your consent while collecting data. Google, Microsoft and Facebook has become more proactive in making the process more transparent after recent events of privacy-breaches. But there’s lot more that needs to be done.

Huawei came on the record to state that it doesn’t collect any sort of private data to deploy its AI features. Instead, it uses publicly available images to train its algorithms.

Asus CEO Jerry Shen said, “data privacy is very, very important to us. To train our AI camera, we use data available in the open environment, not from private sources. We don’t buy other’s privacy.”

Google maintains a massive database of tagged images and videos that are used by AI companies to train their AI modules. There are other open-source platforms as well that make attributed and tagged data available for companies looking to dabble in AI. Data collection is an inevitable cost of an AI-ready future. Since AI isn’t going anywhere, there needs to be an active effort from the device manufacturers and software developers to educate users about AI and its hunger for raw data.

Prof. Mayank Vatsa, Head of Infosys Centre for Artificial Intelligence at IIIT-Delhi believes apps and features won’t work without the user giving up their data.

“When you purchase a smartphone, there has to be acceptance of “terms and conditions” where some conditions says that your phone will record data and it will be used for quality improvement purposes. Similarly, different apps on your smartphones record and collect data – otherwise you will not be able to use the application (it will not install the application). Different applications collect data to continuously improve the features and enhance user experience. For example, if you book your ticket using your Gmail account, you would get a calendar notification about your travel; Google uses your data to enhance user experience and in this process, they collect your data,” explains Prof. Vatsa.

There needs to be stringent privacy and data protection laws. The European Union set things rolling with the introduction of GDPR which stipulates companies to explicitly inform users when they are collecting private data. In India, however, there is no such protection available for digital citizens. India’s telecom regulator TRAI is also working on a policy to better protect the personal data of Indian netizens.

AI Winter

Privacy aside, another aspect that plagues us is the chance of an AI winter setting in that could hinder the progress and adoption of AI. The features being touted as AI are simply scratching the surface. It’s just the tip of the iceberg. The AI features on a smartphone are insignificant in the larger scheme of things. Who would care about AI beautifying your selfies once the technology is successfully used to detect cancer? Or power autonomous vehicles? The hype being generated around such rudimentary applications may reduce the bigger impact of AI when it actually happens.

Something similar happened back in the 1960s. In the early days of AI research, there was a lot of optimism around the subject. By 1959, computers were speaking English better than most humans, playing chess and proving logical theorems. Early AI researchers including economist Herbert Simon predicted that “machines, will be capable, within 20 years, of doing any work a man can do.” Naturally, that was far from becoming a reality and the extra optimism and a lack of understanding led funding to dry up and an “AI winter” set in.

Fast forward to the 21st century and the success of Amazon Alexa and Google Home, have given birth to hundreds of AI startups around the world. But as machine learning matures, the number of startups receiving funding is already plateauing in the United States. According to Crunchbase, funding of AI startups have already hit an S-shaped curve showing signs of slowing down. Early-stage funding was high between 2014 and 2016 and since 2017, VCs are becoming more wary of funding AI startups. There seem to be signs of a fatigue setting in, similar to the one that happened in the 1960s.

Machine learning and deep learning can only get us so far in the larger scheme of things. Artificial General Intelligence or AGI, which is what many are afraid will take away our jobs and enslave us, is far from being real. An IIT Professor who has researched on Artificial Intelligence and Computer Vision for the past 30 years believes the key to unlocking the next step lies in answering some key philosophical and epistemological questions.

“AI right now is just estimation theory. It's not intelligence in any way. We are not even going in that direction right now. In the bigger scheme of things regarding AI, we are exactly where were during the times of Aristotle. We've made no progress. It will require philosophical breakthroughs of the kind we are not. Nobody knows how to make machines learn like we do. Machines can estimate a probability distribution better than humans, but can it feel jealousy and love? Not yet. You are the only creature in the cosmos that even understands there is a cosmos. And how we understand that? Nobody knows. What people talk about AI today are primarily engineering systems and the methods are no different from what we have been using. The techniques are more sophisticated, yes, but it's nothing new," he explained without being wanted to be named.

Technology is always a work in progress. AI has only started to catch on and become mainstream. The hype, however, will make you believe we have already achieved the pinnacle of AI. Don't fall into it. Artificial Intelligence, in its truest sense, is still a pipe dream and there will be a long wait for us to experience it, fear of losing jobs and getting enslaved by a Skynet-like entity included. For now, though, smart cameras and voice assistants will have to suffice.