Cybersecurity in Age of AI: Black Hat 2024’s top 3 LLM security risks

Last week, at Black Hat 2024, one of the major cybersecurity events in the world, a few disconcerting revelations about the potential unsecure nature of GenAI and LLM implementations came to the fore – and how they can be exploited by hackers and malicious actors to steal user data and critical business intelligence. Here are the top highlights:

Survey

SurveyMicrosoft Copilot AI’s potential data leak

One of the most shocking revelations to emerge from Black Hat 2024 was the vulnerability of Microsoft’s AI assistant, Copilot. Security researcher Michael Bargury, CTO, Zenity, and his colleague Avishai Efrat, exposed critical security loopholes in Microsoft’s Copilot Studio offering that could allow malicious actors to hack into corporate networks and access sensitive data.

Also read: CrowdStrike BSOD error: Risking future of AI in cybersecurity?

Microsoft unveiled Copilot Studio back in February 2023, giving businesses the power to create their own custom AI assistants. This tool empowers them to teach these assistants how to handle specific business processes using internal data within Microsoft’s suite of applications. Copilot understands everything from typed instructions to images and even spoken commands, and it can be deployed directly onto new Windows 11 laptops and AI PCs natively. Bargury demonstrated that Copilot Studio is rife with security risks. By exploiting insecure default settings, plugins with broad permissions, and fundamental design flaws, attackers could potentially access and steal sensitive enterprise data, according to Bargury. The researcher also warned that the ease with which these vulnerabilities can be exploited makes data leakage highly probable.

Furthermore, Bargury unveiled a tool, Copilot Hunter, which is capable of scanning for publicly accessible Copilot instances and extracting valuable information. Needless to say this discovery highlights the urgent need for organisations to prioritise the security of their AI systems and implement safeguards to protect sensitive data. Incidentally, Johann Rehberger, popular cybersecurity researcher, has recently outlined a blog post on preventing data leaks in Copilot Studio.

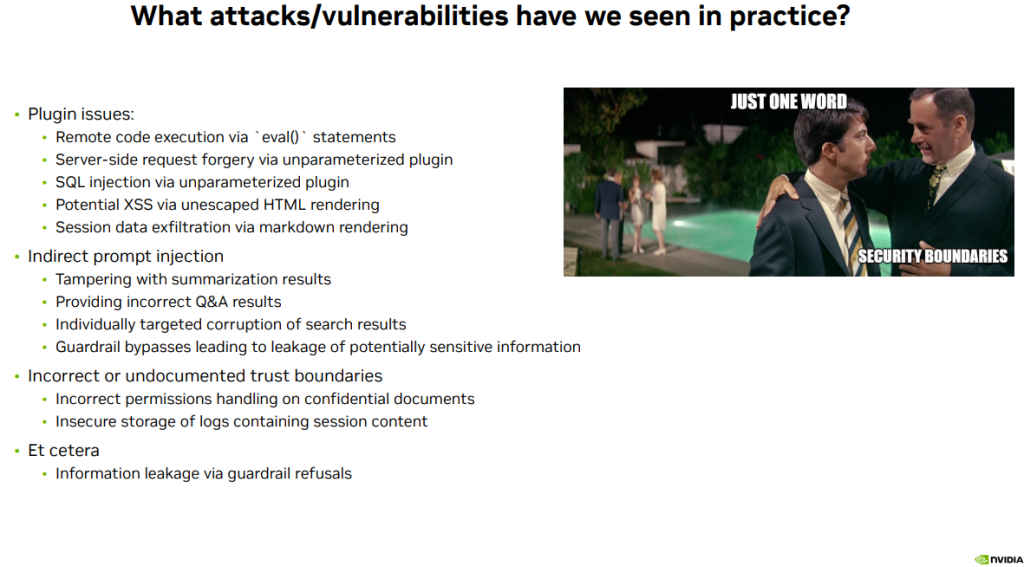

NVIDIA AI Red Team on indirect prompt injection

The NVIDIA AI Red Team also highlighted critical vulnerabilities in large language models (LLMs) at Black Hat 2024. Among the most concerning are indirect prompt injections, where an LLM responds to manipulated input from a third-party source, and security issues related to plugins, which could be exploited to gain unauthorised access to the LLM.

In his presentation, Richard Harang, Principal Security Architect (AI/ML), NVIDIA, further highlighted different types of prompt injections, including direct and second-order (indirect) injections in LLMs, and their cybersecurity implications. The indirect variant, for example, can allow attackers to alter the behaviour of an LLM by inserting malicious instructions into data sources that the model later processes, potentially leading to misinformation or data leakage, according to Harang.

Also read: McAfee’s Pratim Mukherjee on fighting deepfake AI scams in 2024 and beyond

To delve deeper into these topics, NVIDIA has detailed these issues in several articles on its developer blogs and forum. They describe how attackers can exploit vulnerabilities in LLM plugins, such as those in the LangChain library – a popular open-source framework for building apps and plugins on LLMs – to manipulate LLM responses, leading to potentially severe consequences like remote code execution or SQL injection.

Harang’s presentation underlined an important nature of AI security with respect to LLMs. That LLMs don’t reason, they make statistical predictions on what words are most likely to follow some other words, in which case “Hallucinations and prompt injection naturally follow,” he emphasised. Food for thought.

Deep backdoors in AI agents

Another AI advancement taken to task at Black Hat 2024 was Deep Reinforcement Learning (DRL), a powerful technique for training AI agents to make decisions, which has seen rapid adoption across various industries in recent months. However, this technology is not without its risks, according to a recent study from The Alan Turing Institute which highlights a critical cybersecurity vulnerability: backdoors.

Unlike traditional software backdoors that exploit code vulnerabilities, these backdoors are embedded within the neural network itself, according to a group of researchers presenting their findings at Black Hat 2024. According to the study, the backdoors act as malicious triggers, designed to blend seamlessly into normal data, and can manipulate an agent’s behaviour when activated.

Also read: AI impact on cybersecurity: The good, bad and ugly

Imagine a sophisticated LLM or GenAI model designed to provide accurate and unbiased information. It’s trained on vast amounts of text data to generate human-quality text and answer your questions in an informative way. But what if this model has been secretly manipulated to produce harmful or misleading information under specific conditions? This is essentially a backdoor in an LLM. It’s like a hidden trigger that, when activated, causes the model to deviate from its intended behaviour. For example, a language model might be programmed to generate hateful content when presented with a seemingly innocuous but specific phrase. This backdoor could be incredibly difficult to detect, as it would blend seamlessly into the model’s normal output.

Existing defence mechanisms often prove ineffective against these sophisticated backdoors in Deep Reinforcement Learning. Hence, the researchers propose a novel approach to detect these malicious patterns by analysing the neural network’s internal activations during runtime. Through experiments, they demonstrated the potential of this method in identifying hidden backdoors. This research underscores the urgent need for robust security measures to protect DRL systems from malicious attacks.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile