ByteDance’s OmniHuman-1 AI transforms photos and audio into lifelike human videos

ByteDance, the parent company of TikTok, has introduced OmniHuman-1, an artificial intelligence model designed to generate hyper-realistic videos from minimal input, such as a single image and an audio clip.

Survey

SurveyThe tool aims to transform industries like digital media and entertainment by producing lifelike human movements, synchronised speech, and realistic gestures. Unlike earlier AI systems that struggled with fluid motion replication, OmniHuman-1 integrates advanced machine-learning techniques to improve lip-syncing accuracy and facial expressions.

How is it different?

| Feature | OmniHuman-1 | Competitors (Sora, Veo, Synthesia) |

| Input flexibility | Audio, video, text, or pose signals | Limited to specific modalities (e.g., text) |

| Body motion | Full-body gestures and gait | Focus on facial/upper-body animations |

| Aspect ratios | Supports portraits, widescreen | Fixed formats |

| Training efficiency | 18,700 hours of mixed-condition data | Smaller, filtered datasets |

OmniHuman-1 distinguishes itself from other AI video generation tools through its focus on hyper-realistic human animation and seamless integration of multimodal inputs. Compared to platforms like Synthesia or Runway Gen-3, which are widely used for creating professional videos or transforming inputs into stylised outputs, OmniHuman-1 excels in generating lifelike human movements with unparalleled accuracy.

Also read: OpenAI o3-mini vs. DeepSeek R1: Which one to choose?

For instance, Synthesia specialises in creating studio-quality videos using AI avatars that narrate scripts in over 140 languages. While it is ideal for corporate training or marketing videos, it lacks the advanced motion synthesis capabilities of OmniHuman-1. Similarly, Runway Gen-3 offers fast video generation and supports text-to-video transformations but focuses more on creative storytelling and cinematic effects rather than replicating natural human behaviour.

Other tools like Kling and Veed provide features such as motion control and text-to-video generation but are limited in their ability to produce highly realistic human animations. Kling’s strength lies in its precision for storytelling and motion control; however, its slower processing times can be a drawback.

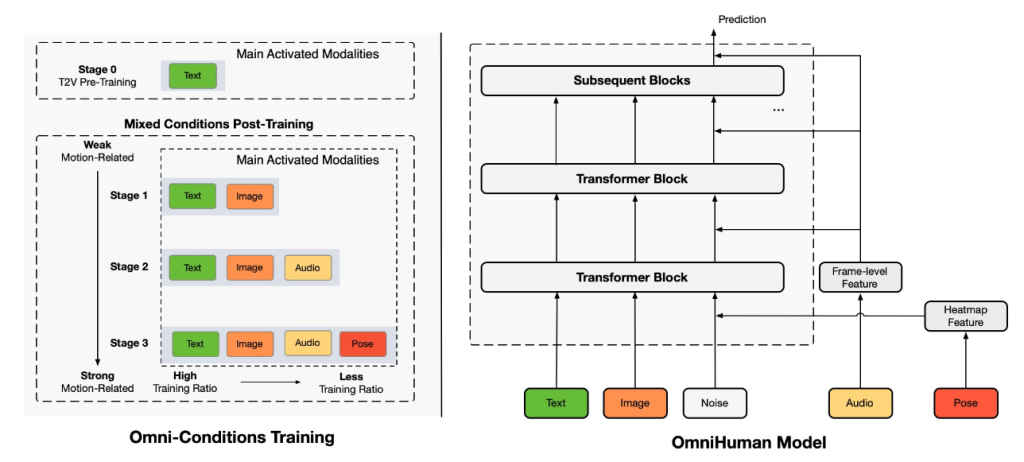

The training process

OmniHuman-1’s development involved training on 18,700 hours of video footage, according to ByteDance researchers. The process includes compressing movement data from inputs like images, audio, and text descriptions, followed by refining outputs against real-world footage.

Also read: DeepSeek data breach: A grim warning for AI security

While ByteDance has not publicly released the full dataset details, the methodology aligns with common practices in AI video synthesis. The model’s ability to animate stylised avatars and adapt to aspect ratios (e.g., portrait or widescreen formats) is confirmed in early demonstrations.

Multimodal input integration

The tool processes text prompts, audio signals, and motion cues to create animations. For example, it can synchronise body movements with speech, a feature demonstrated in reference videos.

Also read: Krutrim-2 – Can India’s language-first AI outpace global benchmarks?

However, claims about replicating intricate dance routines remain unverified, as no public third-party tests have been conducted. ByteDance emphasises the model’s adaptability for industries like animation and virtual influencer creation, though practical applications are still in development.

Ethical considerations

Viral demonstrations, such as a digital Albert Einstein delivering a speech, highlight OmniHuman-1’s potential but raise ethical concerns about deepfake misuse. While ByteDance states it is refining the technology for ethical deployment, independent experts note the lack of transparent safeguards. The tool is not yet publicly available, and challenges like handling low-quality input data remain unresolved.

Sagar Sharma

A software engineer who happens to love testing computers and sometimes they crash. While reviving his crashed system, you can find him reading literature, manga, or watering plants. View Full Profile