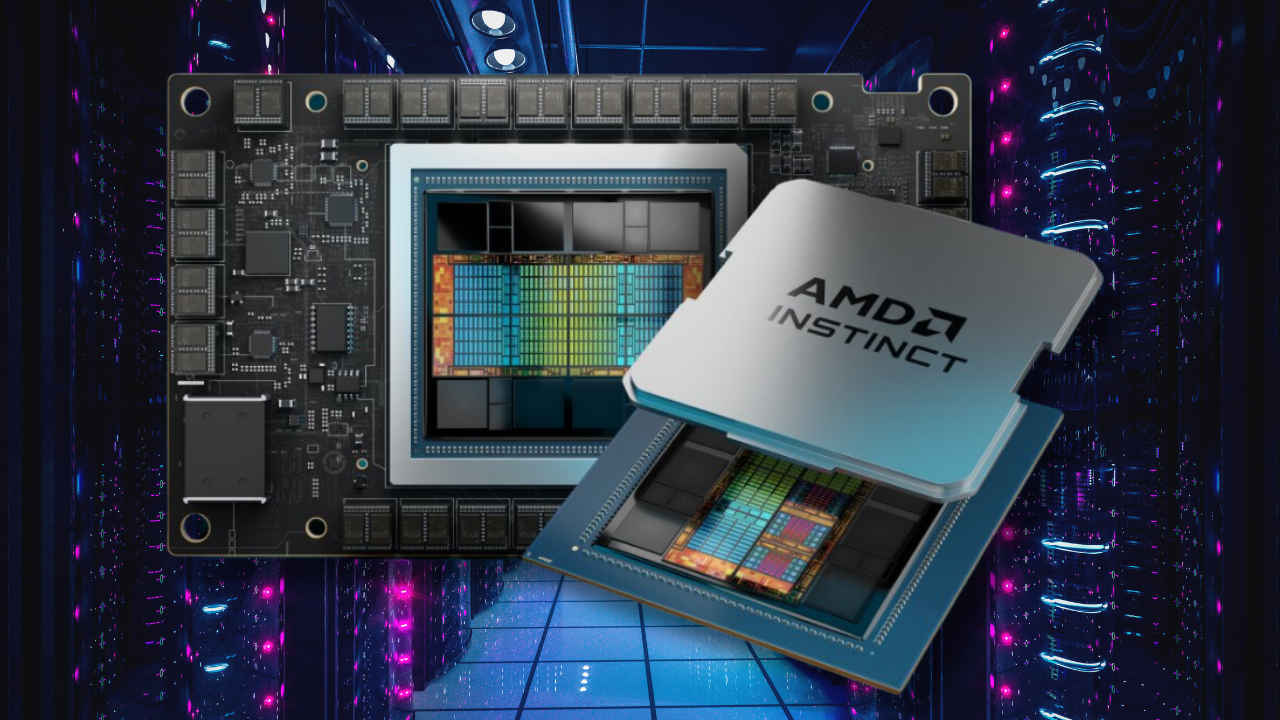

AMD unveils Instinct MI325X AI chip, challenging Intel and NVIDIA

Aiming to strengthen its position in the AI and data center markets, AMD has unveiled its latest AI accelerator, the Instinct MI325X, designed to challenge the dominance of NVIDIA and Intel. The new accelerator promises enhanced performance and energy efficiency, reinforcing AMD’s commitment to delivering cutting-edge AI solutions and setting new standards for generative AI models and data centers.

Survey

SurveyAlso read: Intel launches powerful Xeon 6 and Gaudi 3 AI chips amid stiff competition

This significant product launch comes at a time when competition in the semiconductor industry is intensifying, with rival companies making aggressive moves in the AI space. AMD’s latest offering aims to give customers more choices and set new performance benchmarks for AI infrastructure.

AMD Instinct MI325X: What’s new

Built on the AMD CDNA 3 architecture, AMD claims the Instinct MI325X accelerator represents a big leap forward from its predecessors, especially in delivering performance and efficiency gains for demanding AI tasks, including foundation model training, AI fine-tuning, and inferencing. This powerful processor features industry-leading memory capacity and bandwidth, boasting 256 gigabytes (GB) of HBM3E memory supporting 6.0 terabytes per second (TB/s) bandwidth. This offers 1.8x more capacity and 1.3x more bandwidth compared to NVIDIA’s H200.

The MI325X also provides 1.3x greater peak theoretical FP16 and FP8 compute performance compared to the H200, translating into substantial performance gains for AI workloads. For example, it delivers up to 1.3x the inference performance on Mistral 7B at FP16, 1.2x on Llama 3.1 70B at FP8, and 1.4x on Mixtral 8x7B at FP16 compared to the H200.

Designed to handle compute-intensive workloads with increased efficiency, AMD claims the Instinct MI325X is engineered to meet the performance demands of AI from edge to data center and cloud environments. With its high memory capacity and bandwidth, it is particularly well-suited for large-scale AI models that require significant computational resources.

Set to begin production shipments in Q4 2024, the AMD Instinct MI325X accelerators are expected to have widespread system availability from a broad range of platform providers, including Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro, starting in Q1 2025.

Also read: From IIT to Infosys: India’s AI revolution gains momentum, as 7 new members join AI Alliance

Continuing its commitment to an annual roadmap cadence, AMD also previewed the next-generation Instinct MI350 series accelerators. Based on the upcoming AMD CDNA 4 architecture, the MI350 series is designed to deliver a remarkable 35x improvement in inference performance compared to CDNA 3-based accelerators. With up to 288GB of HBM3E memory per accelerator, the MI350 series is on track to be available in the second half of 2025.

Impact on AI applications in the Cloud

The introduction of the AMD Instinct MI325X accelerator is poised to significantly impact AI applications in the cloud by enabling faster processing, improved efficiency, and the ability to handle more complex models. There are several ways this new AMD chip can make a difference:

Cloud-based AI services like virtual assistants, chatbots, and language translation tools rely heavily on NLP models, which require substantial computational power. The MI325X’s increased memory capacity and bandwidth allow for more efficient training and inference of large language models like OpenAI’s GPT series or Meta’s Llama 3.1 and 3.2. This means virtual assistants can understand and respond to user queries more accurately and swiftly. Similarly, cloud-based surveillance systems can process video feeds in real-time with higher accuracy, thanks to the MI325X’s high compute performance.

Also read: How computer vision and AI will automate toll booths on Indian roads and highways

Data centers consume significant amounts of energy, and the MI325X’s energy-efficient design helps reduce this consumption. The MI325X supports popular AI frameworks like PyTorch and integrates with AMD’s ROCm open software stack, making it easier for developers to deploy and scale AI applications in the cloud. This will allow startups and tech companies to bring innovative AI products to market faster, leveraging existing tools and models without extensive reconfiguration.

AMD vs Intel vs NVIDIA: Who’s winning the AI race?

To put AMD’s advancements into perspective, the data center market is currently dominated by NVIDIA and Intel. NVIDIA’s GPUs are widely used for AI workloads, and Intel’s Xeon processors power a significant portion of data centers. However, with the introduction of the Instinct MI325X accelerator, AMD is positioning itself as a formidable contender in the AI space.

The MI325X is designed to compete directly with NVIDIA’s H200 series and Intel’s Gaudi 3 AI accelerators. By offering higher memory capacity, bandwidth, and compute performance, AMD aims to capture a larger share of the AI accelerator market. For example, the MI325X’s 256GB HBM3E memory and 6.0TB/s bandwidth provide significant advantages for large-scale AI models that require substantial memory and data throughput.

Furthermore, the upcoming MI350 series, with its projected 35x improvement in inference performance, signals AMD’s commitment to leading in AI hardware innovation. This aggressive roadmap underscores AMD’s dedication to meeting the evolving needs of AI workloads and staying ahead of competitors.

Amid intense competition and rapid advancements in AI technology, it’s clear AMD is making bold moves to assert its position in the industry. By delivering faster products like the Instinct MI325X accelerator, AMD is addressing the growing demands of AI workloads and offering customers alternative solutions to those provided by NVIDIA and Intel. As businesses increasingly seek powerful and efficient AI infrastructure, AMD’s comprehensive suite of products positions the company as a key player in shaping the future of AI and data center technologies.

Also read: Meta AI manifesto: The AI-assisted resurrection of Mark Zuckerberg

Team Digit

Team Digit is made up of some of the most experienced and geekiest technology editors in India! View Full Profile