AI: One step closer to world domination

If 2016 was the year of VR, then 2017 is the year of AI

Primarily pictured in movies as that evil entity which threatens all of mankind, the future of Artificial Intelligence (AI) seems a long way from what we see today. Current AI is simply decision makers, very good decision makers, with millions and millions of data inputs being factored to generate elaborate binary trees which form the brains. And with hardware pushing the boundaries, we’re enabling these AI to become smarter at an unprecedented pace. The growth of AI has been so rapid, that some of the smartest minds on the planet have rung the alarm bells. So how do these simple decision making AIs pose such a threat to mankind? To understand that, we need to have a look at the state of AI so far.

A primer on Machine Learning

Machine Learning has been around for ages, and in the simplest sense, it is about building machines that can learn without being explicitly programmed. It boils down to creating a set of conditions for pattern recognition and then the computer is fed a lot of data using which it hones these preset conditions to increase levels of accuracy. The data sets are also monitored during the learning phase to ensure that the right kind of conditions are learnt by the system. This is the training phase where the data that’s fed to the system is handpicked. Once the training phase is over, we provide a few data sets to see how well the system has trained itself, this being the validation test. Based on the validation set, the system might need to be tweaked again to ensure accuracy. And lastly, we have testing, wherein real world data sets are given to see if the classification performed is up to human standard. The system is then put into production.

The Revolution. Credit: Fonytas

A relatable example is that of recommender systems which can be found on e-commerce websites and on multimedia portals like Netflix. Suppose you show interest in certain products or movies by watching them or by regularly browsing sections related to said movies. Soon enough, every movie or product in recommended section piques your interest. That’s a very benign implementation of machine learning.

Milestones in 2016

They can beat you are your own game

2016 began with a flurry of news articles lauding the numerous victories of AlphaGo, a Google Deepmind computer program which can play the board game Go. It started by taking baby steps, learning the game and its different strategies and then moved on to besting humans in October 2015 without handicaps. Five months down the line in March 2016, it had won in a best-of-five against Lee Sedol. For those who’re coming across this name for the first time, Lee Sedol is a 9-dan (top Go world rank) professional player. In just five months, using current gen technology, an AI had become the very best in the human scale.

The match: AlphaGo vs Lee Sedol

They can speak your language

Another key development was that of Google Neural Machine Translation, which is now used for Google’s Translate service. With a little help from the Google Brain team, the folks behind the project were able to reduce translation errors in complex languages like Mandarin by 60 percent compared to their previous phrase-based algorithms. This was in September 2016, and in just one month, the researchers behind the project have announced work on Zero-Shot Translation. Till now, all the 103 languages that Google Translate had to work on had separate computing machines. With Zero-Shot Translation, they aim to do away with all of them and replace that with just one extremely powerful system. They’ve already put the system in practice for a few languages and are scaling it slowly.

Google translate can instantly translate words and phrases to over 100 different languages

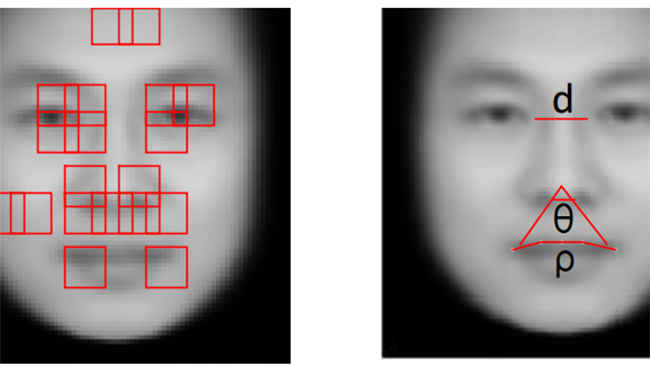

They can single you out

We’ve spoken about games and languages, both key domains for ML. Another such key domain is that of visual recognition. Industrial applications, like that of security, which have hundreds of cameras tied into one computer, which analyses each video feed frame-by-frame, have already been around for a while. Human behavioural traits are now being learnt by these systems to mark individuals out from the crowd. There’s even a startup called AllGoVision which has already put into production a system that can detect loitering, tailgating, suspicious objects and more.

Using facial recognition, computers can now understand human behaviours

To take things to the extreme end, scientists have developed a neural network that can identify criminals just by looking at photos. Students at the Shanghai Jiao Tong University fed 1,586 photographs of men, half of which were criminals, to a system which then learned to identify criminals with an 89.5 percent accuracy. No background data was provided whatsoever, so the only metric were their faces.

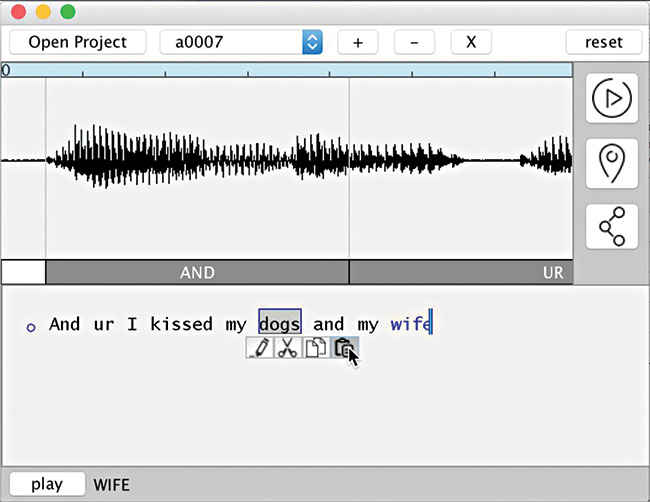

They can read your lips

The folks at the University of Oxford seem to have grown tired of the BBC anchors’ voices, so much that they came up with the ‘Watch, Listen, Attend and Spell’ neural model. Be it just plain audio, or video or both, this machine learning model can accurately transcribe speech into characters with a higher accuracy than professional lip-readers. The system was so good, that it surpassed all previous benchmarks for lip-reading based on the same data sets.

Lip reading accuracy has improved

They can speak for you

Google’s been keeping Brain quite busy – they recently published a paper introducing WaveNet, a deep neural network for generating raw audio forms. This is simply a text to speech system, except that it doesn’t sound robotic anymore. Parametric systems, i.e. the ones you’re all quite familiar with, be it at public airports, or your operating system’s friendly voice, uses snippets of audios which are stitched together to form words. These sound very monotonous but when you get a neural network to analyse millions of audio files, it can generate the same sentence with a much higher resolution.

Imagine current gen systems as having individual audio files for each letter. Based on their position in a word or sentence, a particular audio file would be played. Now this is the equivalent of a highly pixelated image. Imagine if more audio files were used per alphabet, you’d have tonality. Now go one level deeper with even more files per sound. You now have WaveNet, which speaks very much like a human being.

One thing that politicians will be blaming each time they’re “misquoted” will be Adobe’s VoCo. Why? Well, it’s photoshop … for audio. Just like WaveNet, it can take an audio stream from a person, transcribe it, allow you to edit the sentence and play it back … in their own voice! This technology was expected but no one expected it to materialise this quick. While it does raise several concerns about ethics, and how digital media could no longer be used as evidence in the courtroom, it sure does make for a really cool technology. The advent of photo manipulation tools raised similar concerns but that hasn’t screwed up the legal system and we don’t expect this to either. Adobe has themselves said that they’re working to detect use of its software so if at all any fraudulent evidence is presented, they’ll know if it was made using VoCo technology.

Adobe's VoCo is PhotoShop for audio

They can learn on their own

We’ve seen how far machine learning algorithms have come and how the hardware advances, especially the GPUs, have really pushed the boundaries of machine learning to new extremes. However, one thing remains quite constant, a human expert is always required to perform certain key tasks that set a machine learning system on its path. The human element is required to preprocess the data, select the key parameters, select the most apt ML algorithm for the learning to begin, tweak the system to ensure accuracy during the training phase and lastly, to precisely analyse if the system is doing what it set out to do.

Computer systems are now able to perform tasks normally that require human intelligence

With AutoML, you can say goodbye to the humans. This rapid growth of ML in the last few years has led to an increased demand for ML solutions that work out of the box requiring next to no expertise to set up. AutoML does exactly that. It provides an array of tools that any novice can apply on a set of data – from simple metadata learning to traditional algorithms that use Bayesian classification. It won’t be long before complex AI can finally build and improve itself over time.

They can keep secrets

Another one from the folks at Google Brain, this experiment involved setting up three neural networks which could all communicate with each other. Two of them, Bob and Alice, shared a secret key which was used to encrypt communication between the two. And the third neural network, called Eve, was the snoop who’d try to eavesdrop on the conversation between Bob and Alice. Eve’s success was measured by how accurate her interpretation of the secret communication was. And Alice and Bob’s success was measured with reference to how less of their conversation could be decrypted by Eve.

Computers are getting better at breaking encryption

This resulted in an adversarial network between the three entities with improvements happening over time. Bob and Alice became very good at generating an inhumanly powerful encryption that Eve could not crack. That’s right, robots just created encryption methods that even other powerful robots could not crack. The techniques by which these new methods were arrived at are way advanced than how humans have done so far.

All aboard the Open Source train

2016 was a huge year for AI and quite a lot of these have gone the open source way. Google announced the open sourcing of Tensor Flow and started supported Keras, a deep learning library for Python. Microsoft then went ahead and did the same with CNTK (Cognitive Tool Kit), their set of learning algorithms. The Chinese web-services company, Baidu, also open sourced their libraries under the name PaddlePaddle. Amazon has opted to implement MXNet in their AWS ML platform and lastly, Facebook is backing Torch and Caffe.

Google opensourced TensorFlow

2017 will be bigger

While 2016 has already accomplished so much, 2017 looks to be even bigger with leaps of advancement being predicted in every single domain. But two things stand out – chatbots and self-driving automobiles. While there have been a few setbacks for chatbots this year, namely, Microsoft’s Tay going on racist rants thanks for hundreds of thousands of Twitter users feeding it racist diatribe, the year 2017 seems to be a lot more promising. Google’s Allo, Amazon’s Alexa, Apple’s Siri are all paving the way forward with better interpretation of natural requests and responses becoming more intuitive and natural. Given the rapid pace of improvements, we hope to see a lot more human-like chatbots in 2017.

Google's self-driving car

Self-driving automobiles are doing well enough but there have been a few legal setbacks and they’re still to gain mainstream acceptance. 2017 should probably be the year when public transportations systems gain a level of autonomy post. The consumer segment should follow right behind but given the push back from the traditional automotive industry, this could take a few more years. Nevertheless, 2017 will see a huge leap in at least industrial applications for the self-driving vehicle.

Closer to the singularity

One of the prime reasons why Stephen Hawking and Elon Musk are worried is that once AI achieves the ability to communicate secretly and teach itself – It will give rise to a runaway effect resulting in a super intelligence. Mr. Hawking, in an interview with the BBC said that, “It would take off on its own, and redesign itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.”

HAL 9000 pretty much embodied everything that could go wrong with AI

Elon Musk, on the other hand, is worried about just one company which he hasn’t named yet, but he decided to do something about it. And thus, OpenAI was born. It’s a non-profit AI company with an aim to develop friendly AI that hopefully doesn’t end up like HAL 9000 from Arthur C. Clarke’s Space Odyssey.

Whether we get superseded by machines as the dominant race or if we form a peaceful society where AI and humans can co-exist remains to be seen, but one thing’s for certain, this will happen in our lifetime.

This article was first published in January 2017 issue of Digit magazine. To read Digit's articles first, subscribe here or download the Digit e-magazine app for Android and iOS. You could also buy Digit's previous issues here.

Mithun Mohandas

Mithun Mohandas is an Indian technology journalist with 10 years of experience covering consumer technology. He is currently employed at Digit in the capacity of a Managing Editor. Mithun has a background in Computer Engineering and was an active member of the IEEE during his college days. He has a penchant for digging deep into unravelling what makes a device tick. If there's a transistor in it, Mithun's probably going to rip it apart till he finds it. At Digit, he covers processors, graphics cards, storage media, displays and networking devices aside from anything developer related. As an avid PC gamer, he prefers RTS and FPS titles, and can be quite competitive in a race to the finish line. He only gets consoles for the exclusives. He can be seen playing Valorant, World of Tanks, HITMAN and the occasional Age of Empires or being the voice behind hundreds of Digit videos. View Full Profile