AI hallucination in LLM and beyond: Will it ever be fixed?

Despite going mainstream two years ago, Generative AI products and services are arguably still in their infancy, and you just can’t stop marvelling at their potent, transformative power. Even this early in its adoption curve, GenAI continues to impress. With broad consensus on GenAI as the next best thing since sliced bread, capable of responding to our whims and fancies better than our own wildest imagination, the honeymoon period is well and truly on. It seems these AI chatbots or text-to-image generators can do no wrong. Unless, of course, they do – at which point the honeymoon ends rather abruptly.

Just like us mere mortals, GenAI isn’t without its flaws. Sometimes subtle, sometimes glaringly obvious. In its myriad attempts to conjure up text and images out of thin air, AI can have a tendency to make factual mistakes. In other words, hallucinate. These are instances where GenAI models produce incorrect, illogical or purely nonsensical output amounting to beautifully wrapped gibberish.

Also read: When AI misbehaves: Google Gemini and Meta AI image controversies

From Google Gemini’s historically inaccurate images to Meta AI’s gender biased pictures, whether it’s ChatGPT’s imaginary academic citations for generative text or Microsoft Edge’s Bing Copilot giving erroneous information, these mistakes are noteworthy. Call it inference failure or Woke AI, they’re all shades of AI hallucinations on display. Needless to say these AI hallucinations have been shocking, embarrassing and deeply concerning, giving even the most ardent of GenAI evangelists and gung-ho AI fans some serious pause. In fact, take any LLM (one of the pillars of GenAI currently) out there, it’s guaranteed to make mistakes in something as simple as document summarisation. No jokes!

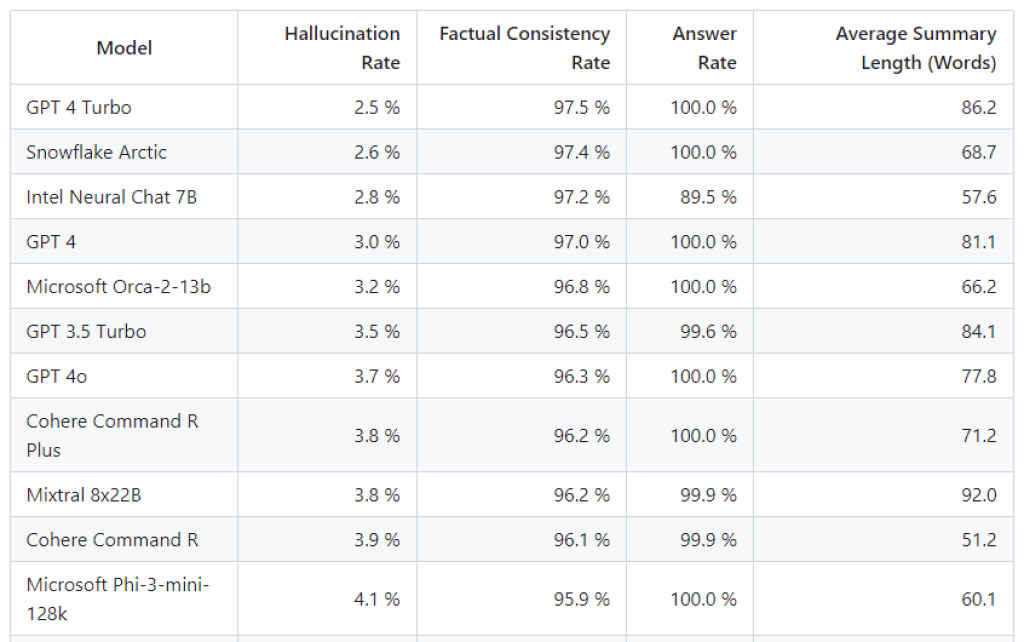

Researchers have created a public leaderboard on GitHub to track the hallucination rates in popular LLMs. They built an AI model to detect hallucinations in LLM outputs, feeding 1000 short documents to various AI models and measuring the rate of factual consistency and hallucination in their output. The models were also measured by their answer rate and average summary length. According to their leaderboard, some of the LLMs with the lowest hallucination rates are GPT-4 Turbo, Snowflake Arctic, and Intel Neural Chat 7B. They’re also in the process of building a leaderboard on citation accuracy of LLMs – a crucial hurdle to overcome in terms of improving factual consistency.

Why does AI hallucinate?

AI hallucinations in popular LLMs like Llama 2 (70 billion parameters), GPT-3.5 (175 billion parameters), Claude Sonnet (70 billion parameters), etc, are all ultimately linked to their training data. Despite its gigantic size, if the training data of these LLMs had built-in bias of some kind, the generative AI output of these LLMs can have hallucinated facts that try to reinforce and transfer that bias in some form or another – similar to the Google Gemini blunders, for example. On the other end of the spectrum, absence of enough variety of data on any given subject can also lead to AI hallucinations every time the LLM is prompted on a topic it isn’t well-versed to answer with authority.

Also read: Hallucin[AI]tion

If an LLM is trained on a mix of code and natural language-based data, it’s very likely to hallucinate nonsensical code if it encounters a programming concept outside its training dataset. If the initial training data of image generation models like Midjourney or Stable Diffusion, which were trained on hundreds of billions of parameters, had a majority of images of Western architecture, for instance, their output will struggle to generate realistic or believable images of traditional Indian architecture, leading their models to invent or hallucinate a mish-mash of architectural variations that don’t pass muster.

Generative AI video models like MovieGAN and OpenAI Sora, which aim to generate realistic videos from text input, suffer from similar issues right now. If their training data doesn’t capture the full range of human motion, it will generate human forms capable of performing physically impossible movements – as these AI generated videos of human gymnasts very well emphasise. Last year, a TikTok user self-released a song called “Heart On My Sleeve,” where the vocals sounded eerily similar to Drake and The Weeknd.

The song clocked over 15 million views on TikTok, not to mention hundreds of thousands more on Spotify and YouTube. If the viral hit was generated using an AI-based sound generation tool, chances are it might have been heavily trained on Western hip-hop music as part of its dataset, and that it won’t be great at generating vocals that sound like Lata Mangeshkar. Probably why we haven’t heard an AI generated song of a famous Indian singer yet, because of the lack of quality training data.

Could AI hallucinations also be linked to a lack of effort? Because the AI genie is well and truly out of the bottle, and there’s no going back to non-GenAI times, companies and startups are locked in a furious race to release half-baked AI products to gain first mover’s advantage and cover market share. These are some of the key findings of a recent report from Aporia, which surveyed about 1000 AI and ML professionals from North America and UK – individuals working in companies ranging from 500 to 7,000 employees, across various important sectors such as finance, insurance, healthcare and travel, among others.

Aporia’s findings reveal a noteworthy trend among engineers working with LLMs and Generative AI. A shocking 93-percent of machine learning engineers reported encountering issues with AI-based production models either on a daily or weekly basis, while 89-percent of these professionals also acknowledge encountering hallucinations within these AI systems. According to the survey findings, these AI distortions often materialise as factual inaccuracies, biases, or potentially harmful content, underscoring the critical importance of implementing robust monitoring and control mechanisms to mitigate such AI hallucination issues effectively.

Can AI hallucination be detected and stopped?

University of Oxford researchers seem to have made significant progress in ensuring the reliability of information generated by AI, one that addresses the issue of AI hallucination fair and square. Their study, published in Nature, introduces a novel method for detecting instances when LLMs hallucinate by inventing plausible-sounding but imaginary facts. The new method proposed by Oxford researchers analyses the statistics behind any given AI model’s answer, specifically looking at the uncertainty in the meaning of a phrase in a generated sentence rather than just its grammatical structure, allowing it to determine if the model is genuinely unsure about the answer it generates for any given prompt. According to the researchers, their new method outperformed existing ones in detecting incorrect answers in GenAI based LLMs, leading to more secure deployment of GenAI in contexts where errors can have serious consequences, such as legal or medical question-answering

Also read: AI Turf “War”: An Old Man’s Defence of Good Old-Fashioned AI

Microsoft also claims to tackle AI hallucinations through new tools as part of its Azure AI Studio suite for enterprise customers, according to a report by The Verge. Microsoft is able to detect AI hallucinations in GenAI-based deployments of its enterprise customers’ apps by blocking malicious prompts that trick their customers’ AI into deviating from its training data. It also analyses the AI model’s response to check if it contains fabricated information and further assess potential vulnerabilities in the AI model itself. These features readily integrate with popular GenAI models like GPT-4 and Llama, according to Microsoft, giving its Azure cloud users more control in preventing unintended and potentially damaging AI outputs.

Other big tech players aren’t sitting idle in the face of AI hallucinations. Beyond recognising the importance of high-quality training data for LLMs, Google Cloud Platform employs techniques like regularisation, which penalises GenAI models for making extreme predictions, preventing overfitting to the training data and generating potentially hallucinating outputs. Amazon uses a similar approach in its online cloud empire, with AWS (Amazon Web Services) also exploring approaches like Retrieval-Augmented Generation (RAG), which combines the LLM’s text generation capabilities with a retrieval system that searches for relevant information and helps the LLM stay grounded in factual information while generating text and reduce the chances of AI hallucination.

Long story short, it appears there’s no single solution for stopping AI hallucinations. With GenAI deployments across various industries still accelerating, the problem of AI hallucination remains an ongoing area of research for all major tech players and academia. In fact, one research paper from the National University of Singapore asserts that AI hallucination is inevitable due to an innate limitation of LLMs. Their study provides a mathematical proof asserting that hallucination is an inherent challenge for these models – that no matter how advanced an LLM may be, it cannot learn everything. They will inevitably generate inaccurate outputs or hallucinate when faced with certain real-world scenarios.

If it’s an unintended feature and not a bug, there’s an argument to be made that AI hallucination is actually good for some use cases, according to IBM. AI can create dreamlike visuals and inspire new artistic styles, reveal hidden connections and offer fresh perspectives on complex information which can be great for data analysis. Mind-bending virtual worlds hallucinated by AI can enrich gaming and VR experiences as well.

Depending on how you look at it, the phenomenon of AI hallucination seems to be both a curse and a blessing in disguise (but it’s mostly a curse). It mirrors the complexities of the human brain and cognitive thought, in a process shrouded in mystery that both medical researchers and computer scientists don’t fully understand. Just as our brains can sometimes misinterpret or fill gaps in information, creating illusions or mistaken perceptions, AI systems too encounter limitations in interpreting data. While efforts are underway to enhance their accuracy and reliability, these occasional AI hallucinations also present opportunities for creativity and innovation, for thinking out of the box – similar to how our minds can unexpectedly spark new ideas.

This realisation should make you appreciate your LLM’s output even more, that GenAI isn’t too dissimilar from us when it comes to brainfarts. Until the experts lobotomise the problem, keep double-triple checking your favourite LLM’s response.

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile