Using Gesture Recognition as Differentiation Feature on Android

Overview

Sensors found in mobile devices typically include accelerometer, gyroscope, magnetometer, pressure, and ambient light sensor. Users generate motion events when they move, shake, or tilt the device. We can use a sensor’s raw data to realize motion recognition. For example, you can mute your phone by flipping your phone when a call is coming or you can launch your camera application when you lift your device. Using sensors to create convenient features helps to promote a better user experience.

Intel® Context Sensing SDK for Android* v1.6.7 has released several new context types, like device position, ear touch, flick gesture, and glyph gesture. In the paper, we will introduce how to get useful information from sensor data, and then we will use an Intel Context Sensing SDK example to demonstrate flick detect, shake detect, glyph detect.

Introduction

A common question is how to connect sensors to the application processor (AP) from the hardware layer. Figure 1 shows three ways for sensors to be connected to the AP: direct attach, discrete sensor hub, and ISH (integrated sensor hub).

When sensors are connected to the AP, it is a direct attach. The problem, however, is direct attach consumes AP power to detect data changes. The next evolution is a discrete sensor hub. It can overcome power consumption problems, and the sensor can work in an always-on method. Even if the AP enters the S3[1] status, a sensor hub can use an interrupt signal to wake up the AP. The next evolution is an integrated sensor. Here, the AP contains a sensor hub, which holds costs down for the whole device BOM.

A sensor hub is an MCU (Multipoint Control Unit), and you can compile your algorithm using available languages (C/C++ language), then download the binary to the MCU. In 2015, Intel will release CherryTrail-T platform for tablets, SkyLake platform for 2in1 devices, both employing sensor hubs. See [2] for more information about the use of integrated sensor hubs.

Figure 2, illustrating the sensor coordinate system, shows the accelerometer measures velocity along the x, y, z axis, and the gyroscope measures rotation around the x, y, z axis.

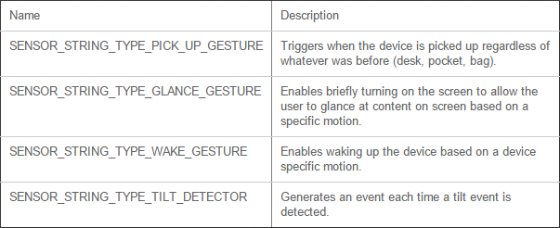

Table 1 shows new gestures included in the Android Lollipop release.

Table 1: Android* Lollipop’s new gestures

These gestures are defined in the Android Lollipop source code directory/hardware/libhardware/include/hardware/sensor.h.

Gesture recognition process

The gesture recognition process contains preprocessing, feature extraction, and a template matching stage. Figure 4 shows the process.

In the following content, we will analyze the process.

Preprocessing

After getting the raw data, data preprocessing is started. Figure 5 shows a gyroscope data graph when a device is right flicked once. Figure 6 shows an accelerometer data graph when a device is right flicked once.

We can write a program to send sensor data by network interface using Android devices, and then write a Python* script that will be run on a PC. So we can dynamically get the sensor graphs from the devices.

This step contains the following items:

- A pc running a Python script to receive sensor data.

- A DUT-run application to collect sensor data, and then send this data to the network.

- An Android adb command to configure the receive and send port (adb forward tcp: port tcp: port).

In this stage we will remove singularity and as is common we use a filter to cancel noise. The graph in Figure 8 shows that the device is turned 90。, and then turned back to the initial position.

Feature extraction

A sensor may contain some signal noise that can affect the recognition results. For example, FAR (False Acceptance Rate) and FRR (False Rejection Rates) show rates of recognition rejection. By using different sensors data fusion we can get more accurate recognition results. Sensor fusion[5] has been applied in many mobile devices. Figure 9 shows an example of using the accelerometer, magnetometer, and gyroscope sensor to get device orientation. Commonly, feature extraction uses FFT and zero-crossing methods to get feature values. The accelerometer and magnetometer are very easily interfered with by EMI. We usually need to calibrate these sensors.

Features contain max/min value, peak and valley, we can extract these data to enter the next step.

Template Matching

By simply analyzing the graph of the accelerometer sensor, we find that:

- A typical left flick gesture contains two valleys and one peak

- A typical left flick twice gesture contains three valleys and two peaks

This implies that we can design very simple state machine-based flick gesture recognition. Compared to the HMM[6] model based gesture recognition, it is more robust and has higher algorithm precision.

Case study: Intel® Context Sensing SDK

Intel Context Sensing SDK[7] uses sensor data as a provider to transfer sensor data to context sensing services. Figure 11 shows detailed architecture information.

Currently the SDK supports glyph, flick, and ear_touch gesture recognition. You can get more information from the latest release notes[8]. Refer to the documentation to learn how to develop applications. The following is that device running the ContextSensingApiFlowSample sample application.

Intel® Context Sensing SDK support flick direction is accelerometer sensor x axis and z axis direction, not support z axis flick.

Summary

Sensors are widely applied to modern computing devices with motion recognition in mobile devices as a significant differentiation feature to attract users. Sensor usage is a very important feature to promote user experience in mobile devices. The currently released Intel Context Sensing SDK v1.6.7 accelerates the simple usage of sensors that all users are seeking.

For more such Android resources and tools from Intel, please visit the Intel® Developer Zone

Source: https://software.intel.com/en-us/articles/using-gesture-recognition-as-differentiation-feature-on-android