The Small Batches Principle – Building Big one Piece at a Time

The concept of “Agile” is usually associated with software development, and much of what is being said about Agile is really tailored for pure software development. Still, people are trying to apply Agile to system and hardware development, but the analogy and terminology always feels a bit off. Rapid releases of half-finished designs and quick patches is just not how chips are built.

However, I found a better way to talk about “non-waterfall” development (iterative and incremental development): “the small batches principle”, as described in a Communication of the ACM* article from 2016 by Tom Limoncelli. The term might not be as catchy, but I think it is a better guiding principle for how to do effective and efficient system development. The small batches principle comes from the DevOps community, where you do take a broader look at how to quickly develop & deploy software to users.

Incidentally, I think the principle makes it much easier to explain the benefits of simulation in hardware and system development: it lets us do work in small batches. Once you do small batches, agility, speed, and quality follows.

In this blog post, I will look at the overall principle, and how it works for software – and eventually also for hardware. In my next blog post, I will look more deeply into how we can get agile for hardware and hardware-software systems using simulation to implement the small batches principle in practice.

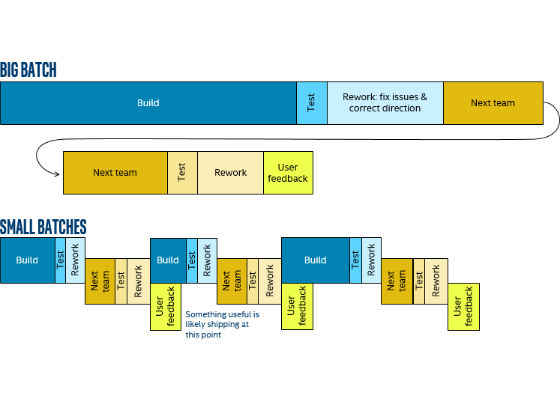

This is the “Small Batches Principle”:

[…] the small batches principle: it is better to do work in small batches than big leaps. Small batches permit us to deliver results faster, with higher quality and less stress.

I really like the “small batches” way to describe the idea of agile system development. It is the natural way to do things when you are a bit unsure of the method and the outcome – you build a little, evaluate the results, and adjust the direction if needed.

I recognize this instinctively – I always get nervous when I have to do something that involves a long sequence of actions with no good way to check the general direction during the process; it seems like a risky approach to things. I’d much rather break things down into small steps where continuous measurement and adjustment is possible. “Starting big” is almost always the wrong way to do something new.

For example, when writing code, I tend to break up the creation process into many small steps, making sure that each step works on its own before continuing. This builds my trust for the code and simplifies debugging as the delta in each case is small. In scientific experiments, you strive to vary one parameter at a time in order to understand what goes on – you do not change everything at once.

When deploying a new technology in a company, you tend to start with a pilot to prove it out and make sure it works. It is like orienteering – you don’t just grab a direction and try to stick to it. Rather, you regularly stop and check your position and adjust the path through the woods depending on the lay of the land and where you are relative to the target.

In product development, the benefits of small batches are immediate. You get less waste since you get feedback quickly and can adjust. There is less risk of doing a lot of work that is useless.

You get lower latency, since when you have dependencies between teams, downstream teams can get started earlier. Instead of waiting for the first team to do their entire task and deliver it, you produce a stream of smaller releases that downstream teams can pick up and work from. In the end, ideally, the users of the system (whatever it is) gets a stream of small releases that incrementally make it more complete and valuable. But the key is that they get something early and get in the feedback loop early.

When you apply this to software development, you get the frequent releases of a subset of the functionality that is typical for Agile methods and DevOps, as illustrated above. Note that I did not draw any overlap in the work of the teams, just a bounce back and forth as small pieces of functionality are iterated through multiple teams. In many cases, the upstream teams will keep working on their next piece of functionality while the downstream teams do their work – which is another optimization. Here, our focus is on the core idea to quickly get small pieces done in order to let downstream teams and users provide feedback.

Working in small batches and with low latency between teams reduces the time to fix bugs. The total amount of work done that can contain bugs is smaller, and the work is more recent in the minds of developers. Both factors make bugs quicker and cheaper to fix.

If we look beyond code-level bugs to concept-level bugs, working in small batches lower the risk in development – by testing only small changes at each step, we quickly discover when we go wrong. At a high level, small batches is another way of saying that you build the minimum viable product that allows a concept, idea, or change to be tested and evaluated.

Working in small batches also tends to get things moving – a classic big-batch waterfall approach is heavy and offers plenty of opportunity for procrastination and delays. Completing a huge set of up-front requirements and stakeholder analysis can be exhausting and it easily gets to the point where it never feels complete enough to release. By working in small batches, each step is lighter and much easier to get done.

This is all great stuff. Where and how can we apply it?

Not Just Software Development…

What is really cool is that the small batches principle can be applied to big problems that are not just pure software development. For example, Tom Limoncelli describes how the small batches were applied to the failover management at StackOverflow. There, they went from “testing” their failover management once per year as a result of a real disaster, to testing it very often as a deliberate action. This means that the number of changes that could introduce problems each time went down – that’s the batch size. The more often and regularly they did a (practice) failover, the easier it went.

I think this is a brilliant way to think about Agile and efficient development methods when applied to problems that do not look like software development.

Another example in the article deals with setting up a monitoring system for an IT infrastructure. Here, success was achieved by starting out small – monitoring a few things in a simple way, and then building on the success and learning from the small deployment. First, they tried to build the system by gathering all the requirements and getting everyone on board with a clear (and presumably huge) specification. This process basically never got anywhere. By instead starting small and building out what worked and what was useful, they got into a learning loop.

Small systems are more flexible and malleable; therefore, experiments are easier. Some experiments would work well, others wouldn't. Because they would keep things small and flexible, however, it would be easy to throw away the mistakes.

Great idea, that’s how I want to work! But how do we translate this to hardware development?

… But what about Hardware Development?

In Limoncelli’s article, he is talking about deploying functionality that is still based on software (even if they are not quite traditional software development projects). With software as the delivery, you can get usable, but bare-bones, systems in place quickly.

Manufacturing costs zero. There does not need to be any shipping of physical goods, and thus delivery to users can be very fast (at least for users close to the development team), or even entirely automatic in an ideal DevOps setting. It is possible to take a deployed system and patch and tweak it on the fly. In addition, when a mistake is discovered downstream, you can roll out a fix just as easily as the release.

On the other hand, hardware is hard. When designing new hardware or a new hardware-software system, deploying a half-finished system isn’t really an option. If a hardware designer tried to use the software model of “code a little, test a little” naively, progress would be painfully slow. There are real costs and significant latency involved in manufacturing, testing, shipping and deploying physical units of electronic systems – even if it is “just” a board using existing chips. If a mistake is made, an entirely new batch must be manufactured and shipped, or hardware recalled and patched up in the lab.

For chip development, and the cost of a spin (a release in software terms) can easily get to millions of dollars – and the lead time to get from an idea to working silicon is very long.

So how can we get small batches into the development of hardware, chips, and systems? One answer is to use simulation since simulation basically turns physical things into software code.

Simulation has been a break-through technology in many areas of engineering and development. A classic example is the development of safety features in cars. Once upon a time, ideas had to be tested by building a physical prototype and smashing it into a wall. Rather expensive and time-consuming. Today, designers build models in CAD tools and smash them virtually using sophisticated physical simulations. This shrinks the batch size and provides a much faster feedback loop, resulting in better products that get to market faster. It makes it possible to try more things since each test costs less time and effort.

In the next part of this blog series, we will look at some concrete examples of how computer simulation is used to allow small batches in silicon, hardware, and system design.

Closing Notes

It should be noted that there are cases when we build physical simulators of physical phenomena, like I discussed in my blog post on the Schiaparelli mission. Such simulation is typically more about validating a design and making sure the hardware turned out right, than exploring the design space.

Another thing worth noting is that it is fairly easy to prototype many types of control systems and embedded systems today using “maker”-style hardware kits. Such prototyping in a way simulates a product – but getting from such a prototype to an actual custom board design or custom chip design is rather more difficult. Hardware is still hard, unless you entirely use something someone else built.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source: https://software.intel.com/en-us/blogs/2017/08/18/small-batches-with-simulation