Offload over Fabric to Intel Xeon Phi Processor: Tutorial

The OpenMP* 4.0 device constructs supported by the Intel® C++ Compiler can be used to offload a workload from an Intel® Xeon® processor-based host machine to Intel® Xeon Phi™ coprocessors over Peripheral Component Interface Express* (PCIe*). Offload over Fabric (OoF) extends this offload programing model to support the 2nd generation Intel® Xeon Phi™ processor; that is, the Intel® Xeon® processor-based host machine uses OoF to offload a workload to the 2nd generation Intel Xeon Phi processors over high-speed networks such as Intel® Omni-Path Architecture (Intel® OPA) or Mellanox InfiniBand*.

This tutorial shows how to install OoF software, configure the hardware, test the basic configuration, and enable OoF. A sample source code is provided to illustrate how the OoF works.

Hardware Installation

In this tutorial, two machines are used: an Intel® Xeon® processor E5-2670 2.6 GHz serves as the host machine and an Intel® Xeon Phi™ processor serves as the target machine. Both host and target machines are running Red Hat Enterprise Linux* 7.2, and each has Gigabit Ethernet adapters to enable remote log in. Note that the hostnames of the host and target machines are host-device and knl-sb2 respectively.

First we need to set up a high-speed network. We used InfiniBand in our lab due to the hardware availability, but Intel OPA is also supported.

Prior to the test, both host and target machines are powered off to set up a high-speed network between the machines. Mellanox ConnectX*-3 VPI InfiniBand adapters are installed into PCIe slots in these machines and are connected using an InfiniBand cable with no intervening router. After rebooting the machines, we first verify that the Mellanox network adapter is installed on the host:

[host-device]$ lspci | grep Mellanox

84:00.0 Network controller: Mellanox Technologies MT27500 Family [ConnectX-3]

And on the target:

[knl-sb2 ~]$ lspci | grep Mellanox

01:00.0 Network controller: Mellanox Technologies MT27500 Family [ConnectX-3]

Software Installation

The host machine and target machines are running Red Hat Enterprise Linux 7.2. On the host, you can verify the current Linux kernel version:

[host-device]$ uname -a

Linux host-device 3.10.0-327.el7.x86_64 #1 SMP Thu Oct 29 17:29:29 EDT 2015 x86_64 x86_64 x86_64 GNU/Linux

You can also verify the current operating system kernel running on the target:

[knl-sb2 ~]$ uname –a

Linux knl-sb2 3.10.0-327.el7.x86_64 #1 SMP Thu Oct 29 17:29:29 EDT 2015 x86_64 x86_64 x86_64 GNU/Linux

On the host machine, install the latest OoF software here to enable OoF. In this tutorial, the OoF software version 1.4.0 for Red Hat Enterprise Linux 7.2 (xppsl-1.4.0-offload-host-rhel7.2.tar) was installed. Refer to the document “Intel® Xeon Phi™ Processor x200 Offload over Fabric User’s Guide” for details on the installation. In addition, the Intel® Parallel Studio XE 2017 is installed on the host to enable the OoF support, specifically support of offload programming models provided by the Intel compiler.

On the target machine, install the latest Intel Xeon Phi processor software here. In this tutorial, the Intel Xeon Phi processor software version 1.4.0 for Red Hat Enterprise Linux 7.2 (xppsl-1.4.0-rhel7.2.tar) was installed. Refer to the document “Intel® Xeon Phi™ Processor Software User’s Guide” for details on the installation.

On both host and target machines, the Mellanox OpenFabrics Enterprise Distribution (OFED) for Linux driver MLNX_OFED_LINUX 3.2-2 for Red Hat Enterprise Linux 7.2 is installed to set up the InfiniBand network between the host and target machines. This driver can be download from www.mellanox.com(navigate to Products > Software > InfiniBand/VPI Drivers, and download Mellanox OFED Linux).

Basic Hardware Testing

After you have installed the Mellanox driver on both the host and target machines, test the network cards to insure the Mellanox InfiniBand HCAs are working properly. To do this, bring the InfiniBand network up, and then test the network link using the ibping command.

First start InfiniBand and the subnet manager on the host, and then display the link information:

[knl-sb2 ~]$ sudo service openibd start

Loading HCA driver and Access Layer: [ OK ]

[knl-sb2 ~]$ sudo service opensm start

Redirecting to /bin/systemctl start opensm.service

[knl-sb2 ~]$ iblinkinfo

CA: host-device HCA-1:

0x7cfe900300a13b41 1 1[ ] ==( 4X 14.0625 Gbps Active/ LinkUp)==> 2 1[ ] "knl-sb2 HCA-1" ( )

CA: knl-sb2 HCA-1:

0xf4521403007d2b91 2 1[ ] ==( 4X 14.0625 Gbps Active/ LinkUp)==> 1 1[ ] "host-device HCA-1" ( )

Similarly, start InfiniBand and the subnet manager on the target, and then display the link information of each port in the InfiniBand network:

[knl-sb2 ~]$ sudo service openibd start

Loading HCA driver and Access Layer: [ OK ]

[knl-sb2 ~]$ sudo service opensm start

Redirecting to /bin/systemctl start opensm.service

[knl-sb2 ~]$ iblinkinfo

CA: host-device HCA-1:

0x7cfe900300a13b41 1 1[ ] ==( 4X 14.0625 Gbps Active/ LinkUp)==> 2 1[ ] "knl-sb2 HCA-1" ( )

CA: knl-sb2 HCA-1:

0xf4521403007d2b91 2 1[ ] ==( 4X 14.0625 Gbps Active/ LinkUp)==> 1 1[ ] "host-device HCA-1" ( )

iblinkinfo reports the link information for all the ports in the fabric, one at the target machine and one at the host machine. Next, use the ibping command to test the link (it is equivalent to the ping command for Ethernet). Start the ibping server on the host machine using:

[host-device ~]$ ibping –S

From the target machine, ping the port identification of the host:

[knl-sb2 ~]$ ibping -G 0x7cfe900300a13b41

Pong from host-device.(none) (Lid 1): time 0.259 ms

Pong from host-device.(none) (Lid 1): time 0.444 ms

Pong from host-device.(none) (Lid 1): time 0.494 ms

Similarly, start the ibping server on the target machine:

[knl-sb2 ~]$ ibping -S

This time, ping the port identification of the target from the host:

[host-device ~]$ ibping -G 0xf4521403007d2b91

Pong from knl-sb2.jf.intel.com.(none) (Lid 2): time 0.469 ms

Pong from knl-sb2.jf.intel.com.(none) (Lid 2): time 0.585 ms

Pong from knl-sb2.jf.intel.com.(none) (Lid 2): time 0.572 ms

IP over InfiniBand (IPoIB) Configuration

So far we have verified that the InfiniBand network is functional. Next, to use OoFabric, we must configure IP over InfiniBand (IPoIB). This configuration provides the target IP address that is used to offload computations over fabric.

First verify that the ib_ipoib driver is installed:

[host-device ~]$ lsmod | grep ib_ipoib

ib_ipoib 136906 0

ib_cm 47035 3 rdma_cm,ib_ucm,ib_ipoib

ib_sa 33950 5 rdma_cm,ib_cm,mlx4_ib,rdma_ucm,ib_ipoib

ib_core 141088 12 rdma_cm,ib_cm,ib_sa,iw_cm,mlx4_ib,mlx5_ib,ib_mad,ib_ucm,ib_umad,ib_uverbs,rdma_ucm,ib_ipoib

mlx_compat 16639 17 rdma_cm,ib_cm,ib_sa,iw_cm,mlx4_en,mlx4_ib,mlx5_ib,ib_mad,ib_ucm,ib_addr,ib_core,ib_umad,ib_uverbs,mlx4_core,mlx5_core,rdma_ucm ib_ipoib

If the ib_ipoib driver is not listed, you need to add the module to the Linux kernel using the following command:

[host-device ~]$ modprobe ib_ipoib

Next list the InfiniBand interface ib0 on the host using the ifconfig command:

[host-device ~]$ ifconfig ib0

ib0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 2044

Infiniband hardware address can be incorrect! Please read BUGS section in ifconfig(8).

infiniband A0:00:02:20:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00 txqueuelen 1024 (InfiniBand)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Configure 10.0.0.1 as the IP address on this interface:

[host-device ~]$ sudo ifconfig ib0 10.0.0.1/24

[host-device ~]$ ifconfig ib0

ib0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 2044

inet 10.0.0.1 netmask 255.255.255.0 broadcast 10.0.0.255

Infiniband hardware address can be incorrect! Please read BUGS section in ifconfig(8).

infiniband A0:00:02:20:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00 txqueuelen 1024 (InfiniBand)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10 bytes 2238 (2.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Similarly on the target, configure 10.0.0.2 as the IP address on this InfiniBand interface:

[knl-sb2 ~]$ ifconfig ib0

ib0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 2044

Infiniband hardware address can be incorrect! Please read BUGS section in ifconfig(8).

infiniband A0:00:02:20:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00 txqueuelen 1024 (InfiniBand)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[knl-sb2 ~]$ sudo ifconfig ib0 10.0.0.2/24

[knl-sb2 ~]$ ifconfig ib0

ib0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 2044

inet 10.0.0.2 netmask 255.255.255.0 broadcast 10.0.0.255

Infiniband hardware address can be incorrect! Please read BUGS section in ifconfig(8).

infiniband A0:00:02:20:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00 txqueuelen 1024 (InfiniBand)

RX packets 3 bytes 168 (168.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10 bytes 1985 (1.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Finally, verify the new IP address 10.0.0.2 of the target using the ping command on the host to test the connectivity:

[host-device ~]$ ping 10.0.0.2

PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=0.443 ms

64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.410 ms

<CTRL-C>

Similarly, from the target, verify the new IP address 10.0.0.1 of the host:

[knl-sb2 ~]$ ping 10.0.0.1

PING 10.0.0.1 (10.0.0.1) 56(84) bytes of data.

64 bytes from 10.0.0.1: icmp_seq=1 ttl=64 time=0.313 ms

64 bytes from 10.0.0.1: icmp_seq=2 ttl=64 time=0.359 ms

64 bytes from 10.0.0.1: icmp_seq=3 ttl=64 time=0.375 ms

<CTRL-C>

SSH Password-Less Setting (Optional)

When offloading a workload to the target machine, Secure Shell (SSH) requires the target’s password to log on to target and execute the workload. To enable this transaction without manual intervention, you must enable the ssh login without a password. To do this, first generate a pair of authentication keys on the host without entering a passphrase:

[host-device ~]$ ssh-keygen -t rsa

Then append the host’s new public key to the target’s public key using the command ssh-copy-id:

[host-device ~]$ ssh-copy-id @10.0.0.2

Offload over Fabric

At this point, the high-speed network is enabled and functional. To enable OoF functionality, you need to Install Intel® Parallel Studio XE 2017 for Linux on the host. Next set up your shell environment using:

[host-device]$ source /opt/intel/parallel_studio_xe_2017.0.035/psxevars.sh intel64

Intel(R) Parallel Studio XE 2017 for Linux*

Copyright (C) 2009-2016 Intel Corporation. All rights reserved.

Below is the sample program used to test the OoF functionality. This sample program allocates and initiates a constant A and buffers x, y, z in the host, and then offloads the computation to the target using OpenMP device constructs directives (#pragma omp target map…).

The target directive creates a device data environment (on the target). At runtime values for the variables x,y and A are copied to the target before beginning the computation, and values of variable y are copied back (to the host) when the target completes the computation. In this example, the target parses CPU information from the lscpucommand, and spawns a team of OpenMP threads to compute a vector scalar product and add the result to a vector.

#include <stdio.h>

int main(int argc, char* argv[])

{

int i, num = 1024;;

float A = 2.0f;

float *x = (float*) malloc(num*sizeof(float));

float *y = (float*) malloc(num*sizeof(float));

float *z = (float*) malloc(num*sizeof(float));

for (i=0; i<num; i++)

{

x[i] = i;

y[i] = 1.5f;

z[i] = A*x[i] + y[i];

}

printf("Workload is executed in a system with CPU information:\n");

#pragma omp target map(to: x[0:num], A) \

map(tofrom: y[0:num])

{

char command[64];

strcpy(command, "lscpu | grep Model");

system(command);

int done = 0;

#pragma omp parallel for

for (i=0; i<num; i++)

{

y[i] = A*x[i] + y[i];

if ((omp_get_thread_num() == 0) && (done == 0))

{

int numthread = omp_get_num_threads();

printf("Total number of threads: %d\n", numthread);

done = 1;

}

}

}

int passed = 0;

for (i=0; i<num; i++)

if (z[i] == y[i]) passed = 1;

if (passed == 1)

printf("PASSED!\n");

else

printf("FAILED!\n");

free(x);

free(y);

free(z);

return 0;

}

Compile this OpenMP program with the Intel compiler option -qoffload-arch=mic-avx512 to indicate the offload portion is built for the 2nd generation Intel Xeon Phi processor. Prior to executing the program, set the environment variable OFFLOAD_NODES to the IP address of the target machine, in this case10.0.0.2, to indicate that the high-speed network is to be used.

[host-device]$ icc -qopenmp -qoffload-arch=mic-avx512 -o OoF-OpenMP-Affinity OoF-OpenMP-Affinity.c

[host-device]$ export OFFLOAD_NODES=10.0.0.2

[host-device]$ ./OoF-OpenMP-Affinity

Workload is executed in a system with CPU information:

Model: 87

Model name: Intel(R) Xeon Phi(TM) CPU 7250 @000000 1.40GHz

PASSED!

Total number of threads: 268

Note that the offload processing is internally handled by the Intel® Coprocessor Offload Infrastructure (Intel® COI). By default, the offload code runs with all OpenMP threads available in the target. The target has 68 cores, and the Intel COI daemon running on one core of the target leaves the remaining 67 cores available; the total number of threads is 268 (4 threads/core). You can use the coitrace command to trace all Intel COI API invocations:

[host-device]$ coitrace ./OoF-OpenMP-Affinity

COIEngineGetCount [ThID:0x7f02fdd04780]

in_DeviceType = COI_DEVICE_MIC

out_pNumEngines = 0x7fffc8833e00 0x00000001 (hex) : 1 (dec)

COIEngineGetHandle [ThID:0x7f02fdd04780]

in_DeviceType = COI_DEVICE_MIC

in_EngineIndex = 0x00000000 (hex) : 0 (dec)

out_pEngineHandle = 0x7fffc8833de8 0x7f02f9bc4320

Workload is executed in a system with CPU information:

COIEngineGetHandle [ThID:0x7f02fdd04780]

in_DeviceType = COI_DEVICE_MIC

in_EngineIndex = 0x00000000 (hex) : 0 (dec)

out_pEngineHandle = 0x7fffc88328e8 0x7f02f9bc4320

COIEngineGetInfo [ThID:0x7f02fdd04780]

in_EngineHandle = 0x7f02f9bc4320

in_EngineInfoSize = 0x00001440 (hex) : 5184 (dec)

out_pEngineInfo = 0x7fffc8831410

DriverVersion:

DeviceType: COI_DEVICE_KNL

NumCores: 68

NumThreads: 272

<truncate here>

OpenMP* Thread Affinity

The result from the above program shows the default number of threads (272) that run on the target; however, you can set the number of threads that run on the target explicitly. One method uses environment variables on the host to modify the target’s execution environment.

First, define a target-specific environment variable prefix, and then add this prefix to the OpenMP thread affinity environment variables. For example, the following environment variable settings configure the offload runtime to use 8 threads on the target:

[host-device]$ $ export MIC_ENV_PREFIX=PHI

[host-device]$ $ export PHI_OMP_NUM_THREADS=8

The Intel OpenMP runtime extensions KMP_PLACE_THREAD and KMP_AFFINITY environment variables can be used to bind threads to physical processing units (that is, cores) (refer to the section Thread Affinity Interface in the Intel® C++ Compiler User and Reference Guide for more information). For example, the following environment variable settings configure the offload runtime to use 8 threads close to each other:

[host-device]$ $ export PHI_KMP_AFFINITY=verbose,granularity=thread,compact

[host-device]$ $ ./OoF-OpenMP-Affinity

You can also use OpenMP affinity by using the OMP_PROC_BIND environment variable. For example, to duplicate the previous example to run 8 threads close to each other using OMP_PROC_BIND use the following:

[host-device]$ $ export MIC_ENV_PREFIX=PHI

[host-device]$ $ export PHI_KMP_AFFINITY=verbose

[host-device]$ $ export PHI_OMP_PROC_BIND=close

[host-device]$ $ export PHI_OMP_NUM_THREADS=8

[host-device]$ $ ./OoF-OpenMP-Affinity

Or run with 8 threads and spread them out using:

[host-device]$ $ export PHI_OMP_PROC_BIND=spread

[host-device]$ $ ./OoF-OpenMP-Affinity

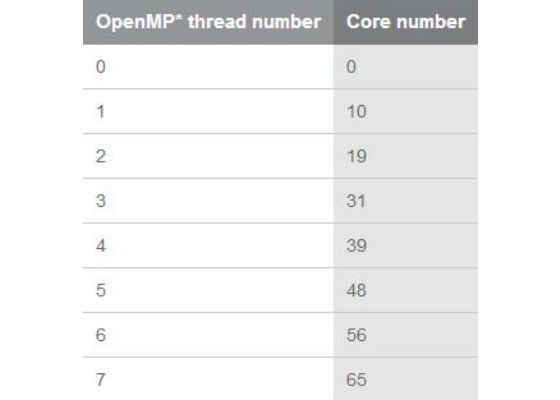

The result is shown in the following table:

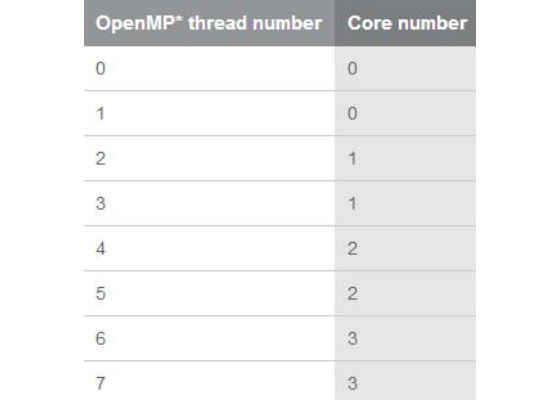

To run 8 threads, 2 threads/core (4 cores total) use:

[host-device]$ export PHI_OMP_PROC_BIND=close;

[host-device]$ export PHI_OMP_PLACES="cores(4)"

[host-device]$ export PHI_OMP_NUM_THREADS=8

[host-device]$ $ ./OoF-OpenMP-Affinity

The result is shown in the following table:

Summary

This tutorial shows details on how to set up and run an OoF application. Hardware and software installations were presented. Mellanox InfiniBand Host Channel Adapters were used in this example, but Intel OPA can be used instead. The sample code was an OpenMP offload programming model application that demonstrates running on an Intel Xeon processor host and offloading the computation to an Intel Xeon Phi processor target using a high-speed network. This tutorial also showed how to compile and run the offload program for the Intel Xeon Phi processor and control the OpenMP Thread Affinity on the Intel Xeon Phi processor.

For more such intel Modern Code and tools from Intel, please visit the Intel® Modern Code

Source:https://software.intel.com/en-us/articles/offload-over-fabric-to-intel-xeon-phi-processor-tutorial