Intro to Device Side AVC Motion Estimation

Introduction

This article introduces the new device-side h.264/Advanced Video Coding (AVC) motion estimation extensions for OpenCL* available in the implementation for Intel Processor Graphics GPUs. The video motion estimation (VME) hardware has been accessible for many years as part of the Intel® Media SDK video codecs, and via built-in functions invoked from the host. The new cl_intel_device_side_avc_motion_estimation extension (available in Linux driver SRB4) provides a fine-grained interface to access the hardware supported AVC VME functionality from kernels.

VME access with Intel extensions to the OpenCL* standard was previously described in :

- Intro to Motion Estimation Extension for OpenCL*

- Intro to Advanced Motion Estimation Extension for OpenCL*

Motion Estimation Overview

Motion estimation is the process of searching for a set of many local (block level) translations best matching temporal differences between one frame and another. Without hardware acceleration this search can be expensive. Accessing hardware acceleration of this operation can open up new ways of thinking about many algorithms.

For 6th Generation Core/Skylake processors the Compute Architecture of Intel® Processor Graphics Gen9 guide provides more hardware details. As a quick overview:

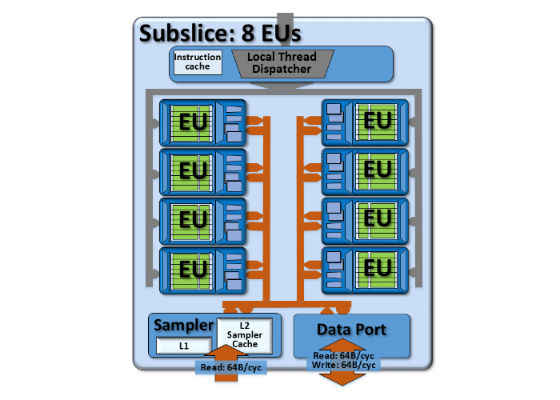

- The VME hardware is part of the sampler blocks included in all subslices (bottom left of the image below). Intel subgroups work at the subslice level, and the device-side VME extension is also based on subgroups.

- Gen9 hardware usually contains 3 subslices per slice (24 EUs/slice).

- Processors contain varying numbers of slices. You can determine the number of slices with a combination of info from ark.intel.com (which will give you the name of the processor graphics GPU) and other sites like notebookcheck.com which will give more info on the processor graphics model.

Motion search is one of the core operations of many modern codecs. It also has many other applications. For video processing it can be used in filters such as video stabilization and frame interpolation. It can also be a helpful pre-processor for encode, with useful information about motion complexity, where motion is occurring in the frame, etc.

From a computer vision perspective, the motion vectors could be useful as a base for algorithms based on optical flow.

Currently available Intel® Processor Graphics GPU hardware only supports AVC/H.264 motion estimation. HEVC/H.265 motion estimation is significantly more complex. However, this does not necessarily mean that AVC motion estimation is only relevant to AVC. The choices returned from VME for the simpler AVC motion direction search can be used to narrow the search space for a second pass/more detailed search where needed.

The device-side interface

Previous implementations have focused on builtin functions called from the host. The device-side implementation requires kernel code for several phases of setup:

1. General initialization (intel_sub_group_avc_ime_initialize)

2. Operation configuration (including inter and intra costs and other properties where needed)

(from vme_basic.cl)

intel_sub_group_avc_ime_payload_t payload =

intel_sub_group_avc_ime_initialize( srcCoord, partition_mask, sad_adjustment);

payload = intel_sub_group_avc_ime_set_single_reference(

refCoord, CLK_AVC_ME_SEARCH_WINDOW_EXHAUSTIVE_INTEL, payload);

ulong cost_center = 0;

uint2 packed_cost_table = intel_sub_group_avc_mce_get_default_medium_penalty_cost_table();

uchar search_cost_precision = CLK_AVC_ME_COST_PRECISION_QPEL_INTEL;

payload = intel_sub_group_avc_ime_set_motion_vector_cost_function( cost_center, packed_cost_table, search_cost_precision, payload );

There is then an evaluation phase (intel_sub_group_avc_ime_evaluate*). After this results can be extracted with the intel_sub_group_avc_ime_get* functions.

intel_sub_group_avc_ime_result_t result =

intel_sub_group_avc_ime_evaluate_with_single_reference(

srcImg, refImg, vme_samp, payload );

// Process Results

long mvs = intel_sub_group_avc_ime_get_motion_vectors( result );

ushort sads = intel_sub_group_avc_ime_get_inter_distortions( result );

uchar major_shape = intel_sub_group_avc_ime_get_inter_major_shape( result );

uchar minor_shapes = intel_sub_group_avc_ime_get_inter_minor_shapes( result );

uchar2 shapes = { major_shape, minor_shapes };

uchar directions = intel_sub_group_avc_ime_get_inter_directions( result );

In the example, sub-pixel refinement of motion estimation is also implemented with similar steps:

intel_sub_group_avc_ref_payload_t payload =

intel_sub_group_avc_fme_initialize(

srcCoord, mvs, major_shape, minor_shapes,

directions, pixel_mode, sad_adjustment);

payload =

intel_sub_group_avc_ref_set_motion_vector_cost_function(

cost_center,packed_cost_table,search_cost_precision,payload );

intel_sub_group_avc_ref_result_t result =

intel_sub_group_avc_ref_evaluate_with_single_reference(

srcImg, refImg, vme_samp, payload );

mvs = intel_sub_group_avc_ref_get_motion_vectors( result );

sads = intel_sub_group_avc_ref_get_inter_distortions( result );

Expected Results

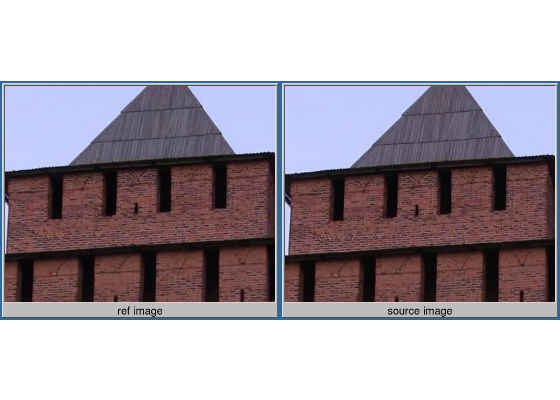

Note — only a small region of the input/output frames used below.

From the inputs (a source frame and reference/previous frame)

There is a "zoom out" motion in the test clip included with the sample. The image below shows where the major differences are located (from imagemagick comparison of source and ref image).

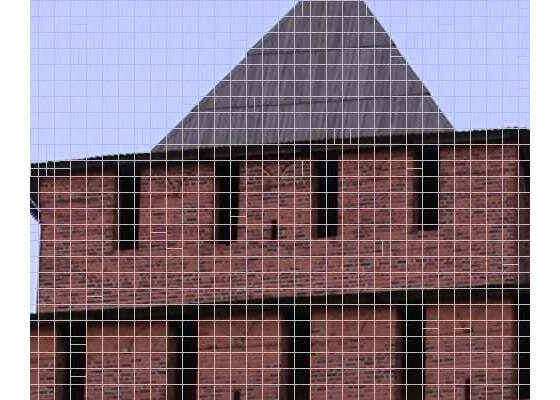

Output of the sample is an overlay of motion vectors as shown here. Note that most fit the radial pattern expected from zoom.

Additional outputs include AVC macroblock shape choices (shown below) and residuals.

Conclusion

Video motion estimation is a powerful feature which can enable new ways of thinking about many algorithms for video codecs and computer vision. The search for most representative motion vector, which is computationally expensive if done on CPU, can be offloaded to specialized hardware in the Intel Processor Graphics Architecture image samplers. The new device-side (called from within a kernel) interface enables more flexibility and customization while potentially avoiding some of the performance costs of host-side launch.

For more such intel resources and tools from Intel on Game, please visit the Intel® Game Developer Zone

Source:https://software.intel.com/en-us/articles/intro-ds-vme