Getting to Small Batches in Hardware Design using Simulation

In the previous part of this two-part blog, I discussed the general principle of doing work in small batches, the great benefits that it brings, and how the principle can be applied outside the traditional software development domain. In this part, I will discuss some more concrete examples about how we apply simulation to enable small batches, early adjustment, and better efficiency in hardware and system design.

First, by looking at large systems like IoT, and then looking at chip and computer design.

System-Level Small Batches

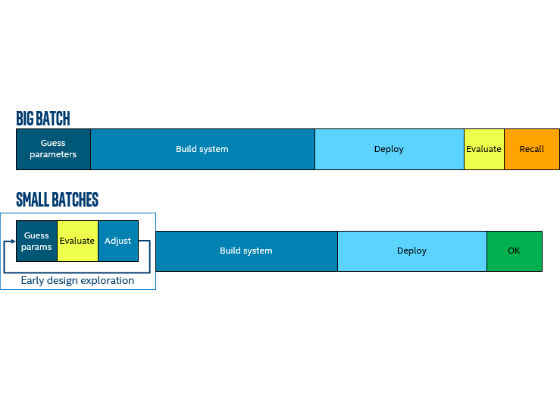

Imagine that you have decided to build a monitoring network using IoT technology. You select what looks like a useful piece of hardware for each sensor node and buy 100 of them. You develop some software to go on the IoT nodes that collects sensor readings and reports them back to your cloud server. You develop the cloud server data gathering and analysis system. Finally, the system is deployed, and data starts to flow in. Sometimes the data flow turns out to be a bit erratic, with data missing or coming in later than expected. The culprit turns out to be that purchasing went for the cheapest-possible network connection, GPRS… and you needed more bandwidth from the nodes. This wasn’t obvious until the system was deployed. Fixing such a mistake amounts to a complete recall: develop a new version of the nodes, update the software, and then go out, bring back the nodes, change their wireless subsystem, and redeploy. Not cheap, fast, easy, or popular.

You really needed that feedback about the data connection and system performance earlier, in the initial design and architecture phase.

You want the batch size of the architecture and design work to be reduced. From “build entire system” to “quick feedback in the design phase”. One way to achieve that is to simulate the system with a model at a higher level of abstraction.

Instead of physical hardware boards that run actual code, you build a simulation with abstracted nodes that generate data at a certain rate. There is no need to move actual data from the nodes to the cloud, the important property is the volume of data and its path through various networks. This kind of model is a natural match for Intel® CoFluent™ Technology for IoT.

Using CoFluent, it is easy to set up models that lets you explore the design space before committing to actual hardware and before developing the software. This provides a development model that makes designing and sizing the system into a series of small batches, getting the parameters right before moving on to detailed implementation.

The architectural feedback loop comes early, and changes are quick and easy to make since you are only changing an abstract model. The model is much smaller than the actual code, and there is no hardware to change or reconfigure. In this way, simulation enables small-batch-size work for a large-scale hardware-software system, without having to build and deploy hardware iterations.

Computer Architecture and Small Batches

Next, let’s look at how processors, systems-on-chip (SoCs), and other chips are designed. Here, simulation is basically the standard way of working, and has been for a long time for anything even moderately complex. Why? It is all about how hard it is to design and build hardware that works.

The cost of manufacturing a test chip depends on the process node, but it is generally estimated to be in the millions of dollars for a leading-edge process. Not to mention the very long time it takes to implement all the details of the hardware and get working silicon out of manufacturing. Thus, if a designer relied on the software model of “code a little, test a little”, we would never finish anything.

In many ways, physically building a chip is the definition of a “big batch” – especially with the increased level of integration we are seeing for each new generation. All the components have to be in place before a chip can be manufactured and tested. Not the ideal way to do design exploration. Chip design is a long and complex process if you look at it all the way from designing individual IP blocks to the integration of a complete SoC. So let’s break it up a bit.

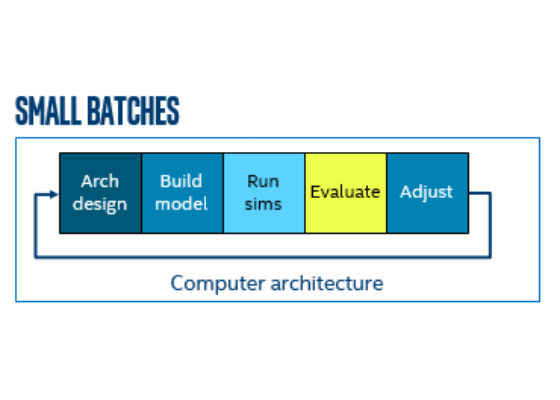

In general, the architecture of computer processor cores and processing blocks is done by using “cycle accurate” architecture simulators.

Indeed, the software simulator is often the primary tool for computer architects. By using a simulator, you get small batches very naturally – the design is now just software and can be changed as easily as software changes.

Changes are made to an architecture, the model is updated to reflect the change, and then simulations are run based on traces, software, benchmarks – whatever inputs can be obtained and executed against the model. This provides information to the architects who can then rework the design, update the model, and repeat. Using computer-based simulation for computer architecture work has as long history, going back 60 years or so to the late 1950s when IBM* used a simulator to architect the world’s first pipelined processor design for the famous 7030 “Stretch” super computer. There are many different approaches taken to computer architecture simulation which I will not go into detail on here – more analysis and reading can be found here, here, and here (and the various resources linked from those blog posts).

Hardware-Software Interface Small Batches

In addition to the architecture design that determines just how an IP block should do its work for maximum performance and minimal power consumption, there is also design work needed on the software interface of the block. The drivers and other support software has to be written in order to make the IP blocks and the chip actually useful and valuable.

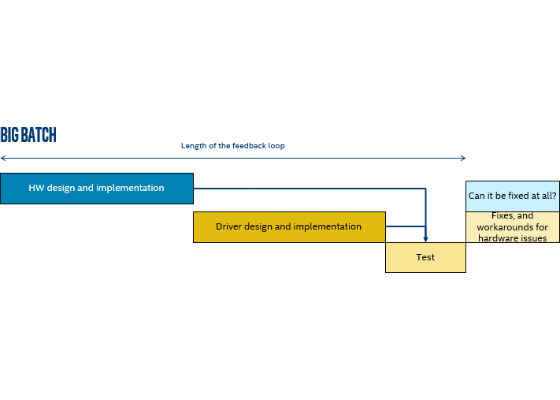

Once upon a time (and sometimes even today), the hardware-software integration was done as a big batch:

This approach leads to late feedback or no feedback on a hardware design from the software team. Which in turn might result in hardware that is hard to program and use by the software stack. Here is one example from a blog post I did a few years ago on the topic of iterative hardware-software interface design, quoting the Windows driver team:

If every hardware engineer just understood that write-only registers make debugging almost impossible, our job would be a lot easier.

Many products are designed with registers that can be written, but not read. This makes the hardware design easier, but it means there is no way to snapshot the current state of the hardware, or do a debug dump of the registers, or do read-modify-write operations.

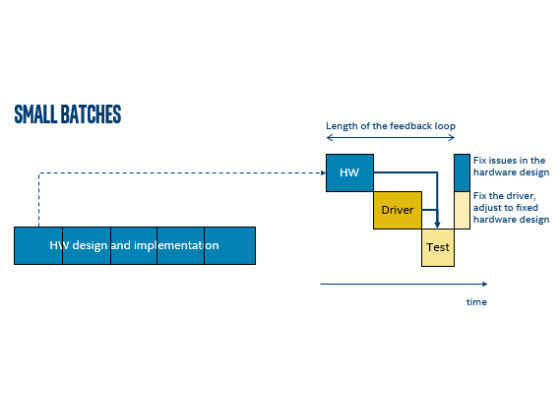

If a piece of hardware is difficult to program, it is often less successful in the market – without supporting software, how is the value exposed to users? Thus, we need to get into small-batch mode for the hardware-software integration and get the feedback loop going as early as possible.

Using a simulator, it is possible to build a little, program a little, and have a short feedback loop with plenty of opportunities to adjust both the hardware and the software to create a better system.

Such simulations are not necessarily cycle-accurate like the aforementioned architecture simulators. Indeed, to do work at the hardware-software interface, it is often better with a fast functional simulator like Simics, one that can run large software loads and provide the full BIOS + OS context for drivers and applications.

Integrating in Small Batches

The next step after building a set of IP blocks and their respective software stacks is integrating them all into a system (usually manufactured as a system-on-a-chip, an SoC). Instead of a big-bang big-batch integration where everything comes together at the end of the project, integration should be performed and tested and validated in small steps. Integrate a few blocks, check that they work, and then build out with more and more blocks over time. How to do that with a virtual platform was described in some depth in my previous blog post here on the Intel™ Developer Zone.

Summary

In this pair of blogs posts I discussed the small batches principle and how doing work in small batches improves product quality, reduces waste, and makes development less risky. It is an alternative formulation of “Agile” that is easier to apply as a guiding principle to areas outside of software. Looking specifically at hardware, hardware-software, and systems development, simulation at various levels of abstraction can be used to get to small batches. Simulation removes some of the “hard” from hardware, and makes it easier to build things in stages and quickly iterate on a design.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source:https://software.intel.com/en-us/blogs/2017/08/24/small-batches-in-hardware-design-using-simulation