DeepMask Installation and Annotation Format for Satellite Imagery Project (2)

When we look at satellite images of refugee camps, we can easily distinguish between shelters and other different objects. If we want to do analysis on the basis of this information, it becomes a really laborious task due to the presence of hundreds of different objects and thus results in a considerable decrease in efficiency. To avoid this, we want to train our computers so that they can recognize different objects in the given images efficiently.

For machines, an image is just an array of numbers. To help them recognize different variations of a single object is a really complicated task. There are nearly infinite variabilities of objects and scenes in real world scenarios. A single object can have different shapes and appearances, colors and textures, sizes and positions. Combine with inherent complexity of real world settings (such as variable backgrounds and lightning conditions) it becomes really difficult for machines to recognize different objects in the images.

Deep learning can serve our purpose here. It is a branch of machine learning and involves algorithms that are inspired by the Human Nervous System and are known as Artificial Neural Networks. Rather than defining rules for each different pattern of a single object, neural networks are relatively simple architectures consisting of millions of parameters. These parameters are trained rather than defined.

The training is done by providing millions of example images with their annotations or labels that provide information about the content of images to these networks. After seeing large number of example images these networks can start recognizing unseen variations of objects too.

But there is a catch here!

We don't have millions of images of refugee camps. No problem, transfer learning can be our solution.

Let's move ahead by understanding: what is transfer learning?

Let's start with a real-world example. A teacher has years of experience of a particular subject. In his/her lecture he/she tries to convey a precise overview of that particular subject to his/her students. The teacher is transferring his/her knowledge to his/her students. The subject becomes relatively easy for students and they don't have to understand everything from scratch by themselves. In a similar way (in the context of neural networks), let's say we have a network or model that is trained on a massive dataset. The knowledge gained by our neural network on this data is stored as "weights" or "parameters". We can extract these parameters and transfer them to another model. In this way we can transfer the learned features so that we don't have to train our new neural network from scratch.

After reading various research articles and consulting with my supervisor, at first I am evaluating facebook's pretrained deepmask object proposal model.

DeepMask's approach is proposed by Pedro O. Pinheiro, Ronan Collobert, Piotr Dollar and the title for its research article is " Learning to Segment Object Candidates".

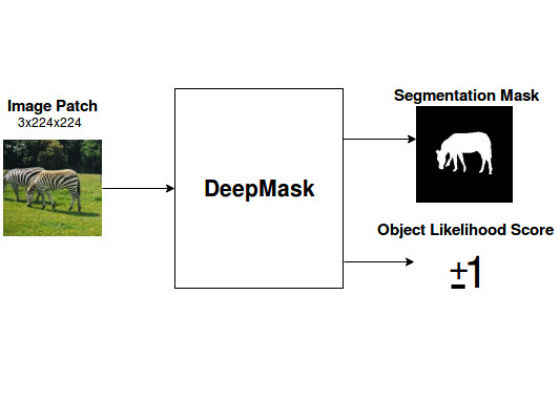

In DeepMask, the authors proposed a new discriminative convolutional network-based approach for segmenting object masks. DeepMask consists of two parts and is trained by providing it with patches of sample images. The first part of this model outputs the segmentation mask around different objects without telling which class a particular segmentation mask belongs to. The second part of the model outputs a score which tells us whether the given patch contains an object. Compared to previous approaches, DeepMask can detect large number of real objects and is able to generalize on unseen categories more efficiently. Further, it does not rely on low level segmentation methods like edges or group of connected pixels with a similar color or grey levels.

Fig 1: Overview of DeepMask

As we know given an input patch, this algorithm predicts a segmentation mask with an object likelihood score.

A score of 1 is assigned if an object is present at the center of the patch and is not partially present but is fully present within the given patch. Otherwise the score of -1 is assigned. But during training, most objects are expected to be slightly offset from their precisely centered positions. So to increase tolerance and robustness, each centered position is randomly jittered during training. At test time, the model is applied on the whole test image which generates segmentation masks with corresponding object likelihood scores.

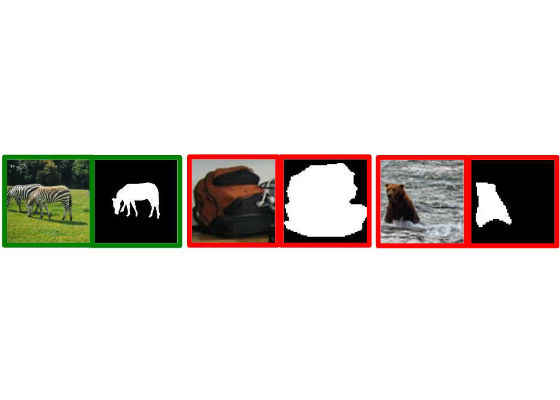

Fig 2: Green patch satisfies specified constraints and therefore will be given a score of 1 whereas in the first red patch the object is partially present and in the second red patch object is not centered and so will be assigned scores of -1.

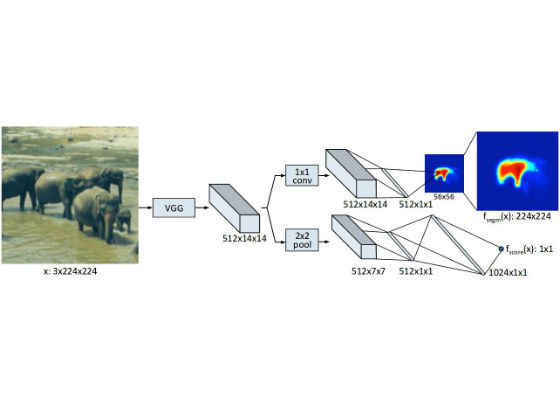

Both tasks of mask and score prediction shares most of the layers of the network except the last-task specific layers. As we are using the same layers for both tasks, this reduces the capacity of our model considerably and also increases the speed of full scene inference at test time. The architecture overview is given below:

Fig 3: Architecture of DeepMask

More implementation and architecture details can be found in this paper.

Here are some example results produced by DeepMask.

Fig 4: DeepMask Results

Figures 2, 3 and 4 are taken from a research article of DeepMask. How to use pretrained DeepMask and what problems can you expect before starting training.

Installation:

Prerequisites:

First install torch following this link

Then clone the coco api repository:

git clone https://github.com/pdollar/coco.git

After cloning, run this command under coco/:

luarocks make LuaAPI/rocks/coco-scm-1.rockspec

Install other packages too by running the following commands:

luarocks install image

luarocks install tds

luarocks install json

luarocks install nnx

luarocks install optim

luarocks install cunn

luarocks install cutorch

luarocks install cunn

luarocks install cudnn

After installing dependencies:

Create a directory for deepmask:

mkdir deepmask

Get its absolute path:

DEEPMASK=/data/mabubakr/deepmask

Clone deepmask repository in respective path:

git clone https://github.com/facebookresearch/deepmask.git $DEEPMASK

Create directory for pretrained deepmask and go to that directory.

mkdir -p $DEEPMASK/pretrained/deepmask; cd $DEEPMASK/pretrained/deepmask

Download pretrained deepmask.

wget https://s3.amazonaws.com/deepmask/models/deepmask/model.t7

Go to the data directory of deepmask and create a new directory there for your training samples:

mkdir $DEEPMASK/data/train2014

Copy all your training data here.

In similar way create another directory in data directory of deepmask:

mkdir $DEEPMASK/data/val2014

Copy all your validation samples here.

Then create an annotations directory for your labels in the data directory:

mkdir $DEEPMASK/data/annotations

In the annotations directory transfer all your annotation files both for training and validation samples. The annotation files should be named as:

instances_train2014.json

instances_train2014.val

Both of the annotation files should also follow DeepMask annotation format.

DeepMask follows the following annotation format:

{

"info" : info,

"images" : [images],

"licenses" : [licenses],

"annotations" : [annotation],

"categories" : [categories]

}

info {

"description" : str,

"url" : str,

"version" : str,

"year" : int,

"contributor" : str,

"date_created" : datetime,

}

images{

"license" : int,

"file_name" : str,

"coco_url" : str,

"height" : int,

"width" : int,

"date_captured" : datetime,

"flickr_url" : str,

"id" : int,

}

licenses{

"url" : str,

"id" : int,

"name" : str,

}

annotations{

"segmentation" : [segmentation],

"area" : int,

"iscrowd" : int,

"image_id" : int,

"bbox" : int,

"category_id" : int,

"id" : int,

}

segmentation{

"counts" : array,

"size" : array,

}

categories{

"supercategory" : str,

"id" : int,

"name": str,

}

After doing the above steps, you have to run this command under deepmask/ to start training the pretrained deepmask model:

th train.lua -dm /path/to/pretrained/deepmask

This will start the training and at the same time it will produce a corresponding model that you can use for evaluations in the following directory.

/deepmask/exps/sharpmask/corresponding directory

To evaluate the results during training, you have to run command like this again under deepmask/ with the image that you want to test:

th computeProposals.lua /deepmask/exps/sharpmask/corresponding directory -img /path/to/testing/image.jpg

The evaluation result will be produced as res.jpg in the same directory.

In my next blog I will show you how I created my annotation file from the given shapefile and sample images. Further I will tell you the problems and their solution that I faced during installation and training of my model and their solutions. Keep following and if you have any questions do not hesitate to ask in the comments section.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source:https://software.intel.com/en-us/blogs/2017/08/25/deepmask-installation-and-annotation-format-for-satellite-imagery-project